October 8, 2025

.png?width=400&height=150&name=Copy%20of%20G2%20Image%20(1).png) by Shreya Mattoo / October 8, 2025

by Shreya Mattoo / October 8, 2025

As a marketing professional, I am best friends with data. If we zoom in to the absolute core of my job nature, you will find visual customer data. As I set foot in the B2B industry, it took me a good number of business days to understand how raw business data is converted and transformed via an ETL tool into a data warehouse or data lake that simplifies data management for teams.

Data engineers, CTOs, and data scientists consider the best ETL tools to handle APIs, data processing, and data warehousing for smooth data management.

Because of my growing curiosity to analyze raw data and turn it into a meaningful customer journey, I set out to review the 6 best ETL tools for data transfer and replication for external use.

If you are already contemplating on best ETL tools to handle data securely and offer cost-efficient pricing, this detailed review guide is for you.

These are the best ETL tools in their category, according to G2 Grid Reports. I’ve also added their monthly pricing to make comparisons easier for you.

The global ETL software market size was valued at USD 6.5 billion in 2023 and is poised to grow from USD 7.34 billion in 2024 to USD 19.37 billion by 2032, growing at a CAGR of 12.9% during the forecast period.

Even though I operate in the marketing sector, I am a prior developer who probably knows a thing or two about how to crunch data and sum variables in a clean and structured way via relational database management system (RDBMS) and data warehousing.

Although my experience as a data specialist is dated, my marketing role made me revisit data workflows and management techniques. I understood that once raw data files enter a company's tech stack, say CRM or ERP, they need to be readily available for standard business processes without any outliers or invalid values.

Evidently, the ETL tools that I reviewed excelled at transferring, managing, and replicating data to optimize performance.

Whether you wish to regroup and reengineer your raw data into a digestible format, integrate large databases with ML workflows, and optimize performance and scalability, this list of ETL tools will help you with that.

I started with G2’s Grid® Report for ETL tools to identify platforms consistently rated high for user satisfaction and market presence. This helped me identify solutions consistently trusted by data engineers, developers, and analysts for their reliability and performance.

Using AI-assisted analysis, I examined G2 review data to surface recurring feedback on performance, scalability, schema management, and usability and also researched

vendor documentation to ensure accuracy in reporting key features, integrations, and pricing details.

In cases where I couldn't personally evaluate a tool due to limited access, I consulted a professional with hands-on experience and validated their insights using verified G2 reviews. The screenshots featured in this article may mix those captured during evaluation and those obtained from the vendor's G2 page.

ETL tools' prime purpose is to help both technical and non-technical users store, organize, and retrieve data without much coding effort. According to my review, these ETL tools not only offer API connectors to transfer raw CRM or ERP data but also eliminate invalid data, cleanse data pipelines, and offer seamless integration with ML tools for data analysis.

It should also integrate with cloud storage platforms or on-prem platforms to store data in cloud data warehouses or on-prem databases. Capabilities like microservices, serverless handling, and low latency made it to this list, which are features of a well-equipped ETL tool in 2025.

After reviewing ETL tools, I got a better hang of how raw data is extracted and transformed for external use and the data pipeline automation processes that secure and protect the data in a safe and cloud environment for enterprise use.

Out of 40+ tools I scouted and learned about, these 6 ETL tools stood out in terms of latency, high security, API support, and AI and ML support.

The list below contains genuine reviews from the ETL tools category page. To be included in this category, software must:

*This data was pulled from G2 in 2025. Some reviews may have been edited for clarity.

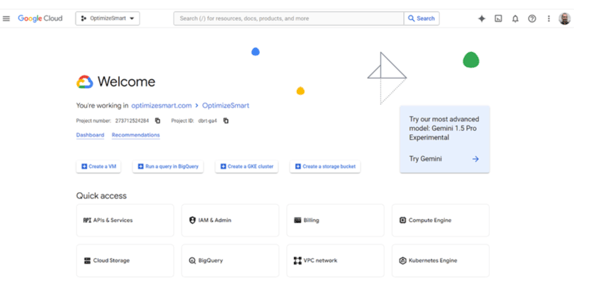

Google Cloud BigQuery is an AI-powered data analytics platform that allows your teams to run DBMS queries (up to 1 tebibyte of queries per month) in multiple formats across the cloud.

Google Cloud BigQuery has been ranked as a category leader on G2, with a customer satisfaction score of 99 and a market presence score of 99, based on 284 reviews. Further, 92% of users are also likely to recommend it to others.

When I first started using Google Cloud BigQuery, what immediately stood out to me was how fast and scalable it was. I am dealing with fairly large datasets, millions of rows, sometimes touching terabytes, and BigQuery consistently processes them in seconds.

I didn't have to set up or manage infrastructure at all. It's fully serverless, so I could jump right in without provisioning clusters or worrying about scaling. That felt like a major win early on.

The SQL interface made it approachable. Since it supports standard SQL, I didn't have to learn anything new. I liked being able to write familiar queries while still getting the performance boost that BigQuery offers. The web interface has a built-in query editor, which works fine for the most part.

What I found genuinely helpful was the way it integrates with other Google services in the ecosystem. I've used it with GA4 and Google Data Studio, and the connections were very seamless and easy. You can also pull data from Google Cloud Storage, run models using BigQuery ML (right from the UI using SQL), and connect to tools like Looker or third-party platforms like Hevo or FiveTran. It feels like BigQuery is built to fit into a modern data stack without much friction.

The sentiment echoes in G2 reviews too. Among users, BigQuery stands out for its unmatched speed, scalability, and seamless integration across the Google ecosystem. Many highlight how easily it handles massive datasets without infrastructure overhead, making it a top choice for analytics teams that prioritize performance and reliability.

G2 reviewers also mention that pricing requires a bit of planning, especially with the pay-as-you-go model where exploratory queries can scan large data volumes. Teams with steady workloads often prefer the flat-rate option for predictability. There’s also a learning curve for newer users, particularly around SQL optimization, partitioning, and clustering, though Google’s documentation and community resources help smooth the process.

Overall, BigQuery is a great fit for organizations ready to manage cost structure intentionally and leverage its advanced capabilities for real-time analytics at scale.

"I have been working with Google Cloud for the past two years and have used this platform to set up the infrastructure as per the business needs. Managing VMs, Databases, Kubernetes Clusters, Containerization, etc, played a significant role in considering it. The pay-as-you-go cloud concept in Google Cloud is way better than its competitors, although at some point you might find it getting in the way if you are managing a giant infrastructure."

- Google Cloud BigQuery Review, Zeeshan N.

"Misunderstanding of how queries are billed can lead to unexpected costs and requires careful optimization and awareness of best practices, and while basic querying is simple, features like partitioning, clustering, and BigQuery ML require some learning, and users heavily reliant on UI might find some limitations compared to standalone SQL clients of third-party tools."

- Google Cloud BigQuery Review, Mohammad Rasool S.

Explore the 6 best managed file transfer software to log, transfer, track, and audit data with convenience and protect your system from unwarranted attacks.

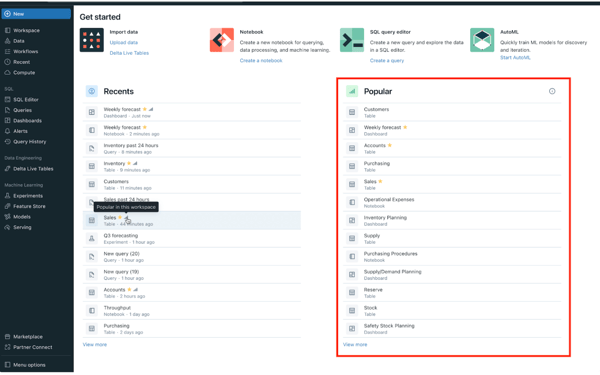

Databricks Data Intelligence Platform displays powerful ETL capabilities, AI/ML integrations, and querying services to secure your data in the cloud and help your data engineers and developers.

As a category leader, Databricks Data Intelligence Platform has a satisfaction score of 97 and a market presence score of 84, making it a trustworthy provider. Around 92% of G2 users are likely to recommend Databricks for ETL data-driven workflows.

I have been using Databricks for a while now, and honestly, it has been a game-changer, especially for handling large-scale data engineering and analytics workflows. What stood out to me right away was how it simplified big data processing.

I don't need to switch between different tools anymore; Databricks consolidates everything into one cohesive lakehouse architecture. It blends the reliability of a data warehouse with the flexibility of a data lake, which is a huge win in terms of productivity and design simplicity.

I also loved its support for multiple languages, such as Python, SQL, Scala, and even R, all within the same workspace. Personally, I switch between Python and SQL a lot, and the seamless interoperability is amazing.

Plus, the Spark integration is native and incredibly well-optimized, making batch and stream processing smooth. There is also a solid machine-learning workspace with built-in support for feature engineering, model training, and experiment tracking.

I've used MLflow extensively within the platform, and having integrated it means that I waste less time on configuration and more time on training the models.

I also loved the Delta Lake integration with the platform. It brings ACID transactions and schema enforcement to big data, which means I don't have to worry about corrupt datasets when working with real-time ingestion or complex transformation pipelines. It's also super handy when rolling back bad writes or managing schema evaluation without downtime.

G2 users also consistently highlight its versatility across batch and streaming data, seamless integration with multiple languages, and strong support for AI and ML workflows, all within one collaborative platform.

Some reviewers mention that the interface and notebook experience are highly functional, though teams benefit most when they establish clear workflows and version control practices early on. A few also note that new users may take some time to get comfortable with advanced ETL configurations, but once they do, they find the environment intuitive and highly customizable for complex data workloads.

Overall, G2 sentiment positions Databricks as a robust, enterprise-ready platform that delivers exceptional scalability and flexibility for organizations looking to unify data engineering, analytics, and AI in one workspace.

"It is a seamless integration of data engineering, data science, and machine learning workflows in one unified platform. It enhances collaboration, accelerates data processing, and provides scalable solutions for complex analytics, all while maintaining a user-friendly interface."

- Databricks Data Intelligence Platform Review, Brijesh G.

"Databricks has one downside, and that is the learning curve, especially for people who want to get started with a more complex configuration. We spent some time troubleshooting the setup, and it’s not the easiest one to begin with. The pricing model is also a little unclear, so it isn’t as easy to predict the cost as your usage gets bigger. At times, that has led to some unforeseen expenses that we might have cut if we had better cost visibility."

- Databricks Data Intelligence Platform Review, Marta F.

Once you set your database in a cloud environment, you'll need constant monitoring. My colleague's analysis of the top 5 cloud monitoring tools in 2025 is worth checking.

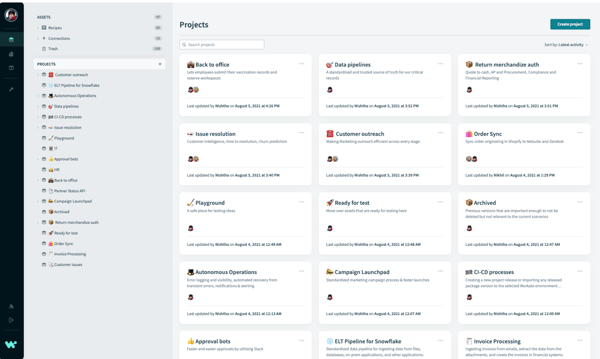

Workato is a flexible and automated ETL tool that offers data scalability, data transfer, data extraction, and cloud storage, all on a centralized platform. It also offers compatible integrations for teams to optimize performance and automate the cloud.

Based on 208+ G2 reviews, Workato earned a satisfaction score of 95, making it a category leader on G2. Around 94% users said that they are likely to recommend it to others, most likely for process automation and ETL.

What impressed me about Workato was how easy and intuitive system integrations were. I didn't need to spend hours writing scripts or dealing with cryptic documentation. The drag-and-drop interface and its use of "recipes," also known as automation workflows, made it ridiculously simple to integrate apps and automate tasks. Whether I was linking Salesforce to Slack, syncing data between HubSpot and NetSuite, or pulling info via APIs, it felt seamless and easy.

I also loved the flexibility in integration. Workato supports over 1000 connectors right out of the box, and if you need something custom, it offers the custom connector software development kit (SDK) to build custom processes.

I've used the API capabilities extensively, especially when building processes that hinge on real-time data transfers and custom triggers.

Recipes can be set off using scheduled triggers, app-based events, or even manual inputs, and the platform supports sophisticated logic like conditional branching, loops, and error handling routines. This means I can manage everything from a simple lead-to-CRM sync to a full-blown procurement automation with layered approvals and logging.

Another major win for me is how quickly I can spin up new workflows—hours, not days. This is partly due to the intuitive UI but also because Workato's recipe templates (there are thousands) give you a running start.

Even non-tech folks on my team started building automations—yes, it is that accessible. The governance controls are pretty robust, too. You can define user roles, manage recipe versioning, and track changes, all useful for a team setting. And if you need help with on-premises systems, Workato's got an agent, too.

G2 reviewers praise Workato’s intuitive interface and strong automation capabilities, especially its ability to handle complex integrations with minimal coding. Many teams highlight how it accelerates data workflows through pre-built connectors and flexible recipe-based automation.

Some reviewers share that performance optimization becomes most important when managing very large datasets or complex batch processes, and teams that plan workload distribution early tend to achieve smoother scalability. Others mention that response times from customer support can vary, though once connected, they find the assistance knowledgeable and solution-oriented.

On the whole, Workato a highly capable platform for organizations seeking secure, scalable, and low-code automation that grows with their integration needs.

"The best thing is that the app is always renewing itself, reusability is one of the best features, configurable UI, and low-code implementation for complicated processes. Using Workato support has been a big comfort - the staff is supportive and polite."

- Workato Review, Noya I.

"If I had to complain about anything, I'd love to get all the dev-ops functionality included in the standard offering. Frankly, I'm not sure if that's still a separate offering that requires extra spending."

- Workato Review, Jeff M.

Check out the working architecture of ETL, ELT, and reverse ETL to optimize your data processes and automate the integration of real-time data with the existing pipeline.

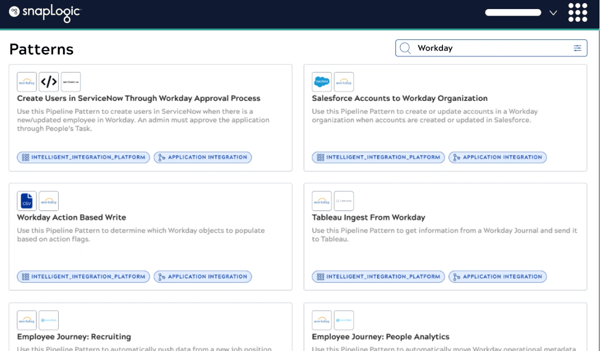

SnapLogic Intelligent Integration Platform (IIP) is a powerful AI-led integration and plug-and-play platform that monitors your data ingestion, routes data to cloud servers, and automates business processes to simplify your technology stack and take your enterprise to growth.

As a category leader on G2, SnapLogic has a customer satisfaction score of 97. Around 88% of G2 users are likely to recommend it to others for data automation, integration, and transformation.

After spending some serious time with the SnapLogic Intelligent Integration Platform, I have to say that this tool hasn't received the recognition it deserves. What instantly won me over was how easy it was to set up a data pipeline. You drag, you drop, and snap, and it is done.

The platform's low-code/no-code environment, powered with pre-built connectors (called Snaps), helps me build powerful workflows in minutes. Whether I am integrating cloud apps or syncing up with on-prem systems, the process just feels seamless.

SnapLogic really shines when it comes to handling hybrid integration use cases. I loved that I could work with both cloud-native and legacy on-prem data sources in one place without switching tools.

The Designer interface is where all the magic happens in a clean, user-friendly, and intuitive way. Once you dive deeper, features like customizable dashboards, pipeline managers, and error-handling utilities give you control over your environment that many other platforms miss.

One thing that surprised me (in the best way) is how smart the platform feels. The AI-powered assistant, Iris, nudges you in the right direction while building workflows. This saved me loads of time by recommending the next steps based on the data flow that I was constructing. It is also a lifesaver when you're new to the platform and not sure where to go next.

G2 reviewers highlight that SnapLogic is intuitive and easy to use, especially for building standard data workflows through its low-code interface. Some reviewers mention that when projects involve more complex pipelines, referring to detailed documentation and setup guidance helps teams get the most out of advanced features.

Others note that while most connectors perform reliably, optimizing configurations for larger or time-sensitive workloads helps maintain consistency and performance across integrations.

All things considered, SnapLogic is a solid fit for organizations that want to streamline integrations through a low-code environment without compromising scalability. It’s particularly well-suited for teams managing hybrid data environments or frequent API-based connections

"The things I like most are the AWS snaps, REST snaps, and JSON snaps, which we can use to do most of the required things. Integration between APIs and setup of standard authentication flows like OAuth is very easy to set up and use. AWS services integration is very easy and smooth. Third-party integration via REST becomes very useful in daily life and allows us to separate core products and other integrations."

- SnapLogic Intelligent Integration Platform Review, Tirth D.

"SnapLogic is solid, but the dashboard could be more insightful, especially for running pipelines. Searching pipelines via task could be smoother. CI/CD implementation is good, but migration takes time – a speed boost would be nice. Also, aiming for a lag-free experience. Sometimes, cluster nodes don't respond promptly. Overall, great potential, but a few tweaks could make it even better."

- SnapLogic Intelligent Integration Platform Review, Ravi K.

Domo is an easy-to-use and intuitive ETL tool designed to create friendly data visualizations, handle large-scale data pipelines, and transfer data with low latency and high compatibility.

Based on 341 reviews, Domo has received a satisfaction score of 82 and a market presence score of 69. Around 87% of users are likely to recommend Domo to others for data automation, loading, and reporting.

At its core, Domo is an incredibly robust and scalable data experience platform that brings together ETL, data visualization, and BI tools under one roof. Even if you are not super technical, you can still build powerful dashboards, automate reports, and connect data sources without feeling overwhelmed.

The magic ETL feature is my go-to. It's a drag-and-drop interface that makes transforming data intuitive. You don't have to write SQL unless you want to get into deeper customizations.

And while we're on SQL, it is built on MySQL 5.0, which means advanced users can dive into "Beast Mode," which is Domo's custom calculated fields engine. Beast mode can be a powerful ally, but it has some drawbacks. The learning curve is a bit steep, and the documentation might not offer the right alternative.

However, Domo also shines in its integration capabilities. It supports hundreds of data connectors, like Salesforce, Google Analytics, or Snowflake. The sync with these platforms is seamless. Plus, everything updates in real-time, which can be a lifesaver if you are dealing with live dashboards or key performance indicator (KPI) monitoring.

Having all your tools and data sets consolidated in one platform just makes collaboration much easier, especially across business units.

G2 reviewers highlight Domo’s ease of use, rich connector ecosystem, and ability to unify business data for faster insights. Many users appreciate how easily teams can build dashboards and automate reports without needing deep technical expertise. Some reviewers mention that the platform’s newer consumption-based pricing model offers flexibility as teams scale, though it may require careful tracking to align with usage patterns.

Others note that performance optimization is most effective for very large datasets or highly visual dashboards, and that the mobile experience, while functional, may not yet match the full feature depth of the desktop version.

Based on G2 reviews, Domo is a great fit for organizations that want to make data visualization and reporting more accessible across teams. Its intuitive dashboarding and wide connector network make it well-suited for business users and analysts who want quick, self-service insights.

"Domo actually tries to apply feedback given in the community forum to updates/changes. The Knowledge Base is a great resource for new users & training materials. Magic ETL makes it easy to build dataflows with minimal SQL knowledge & has excellent features for denoting why dataflow features are in place in case anyone but the original user needs to revise/edit the dataflow. The automated reporting feature is a great tool to encourage adoption.

- Domo Review, Allison C.

"Some BI tools have things that Domo doesn’t. For example, Tableau and Power BI can do more advanced analysis and allow you to customize reports more. Some work better with certain apps or let you use them offline. Others can handle different types of data, like text and images, better. Plus, some might be cheaper. Each tool has its own strengths, so the best one depends on what you need."

- Domo Review, Leonardo D.

Check out the differences between structured and unstructured data and categorize your data pipelines more efficiently.

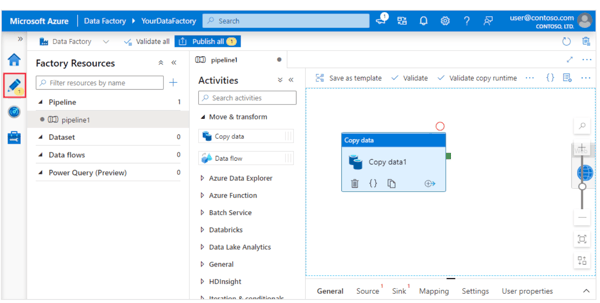

Azure Data Factory is a cloud-based ETL that allows users to integrate disparate data sources, transform and retrieve on-prem data from SQL servers, and manage cloud data storage efficiently.

According to G2, Azure has earned a customer satisfaction score of 71, and around 92% users are likely to recommend it for big data integration, data scalability and ETL processes.

What attracted me about Azure was how easy it was to get started. The drag-and-drop interface is a lifesaver, especially if you are dealing with complex ETL pipelines.

I am not a fan of writing endless lines of code for every little transformation, so the visual workflows are very refreshing and productive.

Connecting to a wide variety of data sources, such as SQL, Blob storage, and even on-prem systems, was way smoother than I had expected.

One of the things I absolutely love about ADF is how well it plays into the rest of the Azure ecosystem. Whether it is Azure Synapse, Data Lake, or Power BI, everything feels like it's just a few clicks away. The linked services and datasets are highly configurable, and parameterization makes reusing pipelines super easy.

I use triggers frequently to automate workflows, and the built-in monitoring dashboard has been helpful when debugging or checking run history.

Azure Data Factory is often praised on G2 for its low-code/no-code approach, scalability, and strong integration with the Azure ecosystem, but reviewers also point out a few areas for improvement

Some reviewers mention that troubleshooting and optimization work best with proactive monitoring, as detailed logging and community guidance can help teams quickly identify and refine pipeline performance.

Others share that complex transformations or multi-join data flows benefit from additional tuning, and leveraging Azure’s documentation and community resources enhances overall efficiency.

Based on G2 reviews, Azure Data Factory is a strong choice for organizations operating within the Microsoft ecosystem or managing hybrid cloud environments. It’s especially well-suited for teams that want scalable orchestration, strong integration with Azure services, and flexible cost control through a pay-as-you-go model.

"The ease of use and the UI are the best among all of its competitors. The UI is very easy, and you can create a data pipeline with a few clicks of buttons. The workflow allows you to perform data transformation, which is again a drag-and-drop feature that allows new users to use it easily."

- Azure Data Factory Review, Martand S.

"I am happy to use ADF. ADF just needs to add more connectors with other third-party data providers. Also, logging can be improved further."

- Azure Data Factory Review, Rajesh Y.

Azure Data Factory integrates tightly with SQL Server and other Microsoft products, making it a strong choice for enterprises on Azure. SnapLogic also supports SQL-based pipelines with robust connectors for legacy and cloud databases.

While the platforms here are commercial, Databricks supports open-source frameworks like Apache Spark and Delta Lake, giving teams flexibility with data pipelines. SnapLogic also offers pre-built Snaps that connect easily with open-source stacks.

BigQuery scales seamlessly for analytics workloads, while Databricks is designed for compute-heavy data engineering jobs. SnapLogic handles high-throughput automation pipelines, making all three leaders in scalability.

Workato is praised for secure integrations with pre-built connectors, while SnapLogic excels at automating ETL workflows across enterprise systems. Azure Data Factory offers strong integration within the Microsoft ecosystem.

BigQuery is built for cloud-native analytics with pay-as-you-go flexibility. Azure Data Factory offers native integration with Azure cloud services, while Databricks provides multi-cloud support across AWS, Azure, and GCP.

Domo appeals to small businesses and non-technical teams with its self-service dashboards and automodeling. Workato also suits SMBs by offering low-code integrations without requiring large data engineering teams.

Azure Data Factory is widely used for secure, large-scale migrations, especially from SQL Server to the cloud. Databricks also supports migrations by transforming and validating data during the transition.

On G2 and similar platforms, BigQuery is consistently praised for speed and simplicity, while Databricks earns strong feedback for unified engineering and analytics. Workato is often highlighted for ease of integration and automation.

Workato is ideal for SaaS companies because of its pre-built connectors for apps like Salesforce and Slack. SnapLogic also enables SaaS integrations at scale, connecting CRMs, ERPs, and analytics tools.

Domo offers strong value for startups seeking fast insights without heavy data engineering. Workato provides affordable automation with scalable plans, helping startups connect systems quickly.

Workato is designed with enterprise-grade data security, including role-based access and compliance. Databricks adds governance features like Unity Catalog for auditing, while SnapLogic secures pipelines with encryption and access controls.

Databricks is widely regarded as the leader for big data ETL thanks to its Spark-based processing and embedded analytics. BigQuery complements this by delivering real-time, SQL-driven analytics across massive datasets.

My analysis allowed me to list intricate and crucial factors like performance optimization, low latency, cloud storage, and integration with CI/CD that are primary features of an ETL tool for businesses. Before considering different ETL platforms, note your data's scale, developer bandwidth, data engineering workflows, and data maturity to ensure you pick the best tool and optimize your ROI. If you eventually struggle or get confused, refer back to this list for inspiration.

Optimize your data ingestion and cleansing processes in 2025, and check out my colleague's analysis of the best data extraction software to invest in the right plan.

Shreya Mattoo is a former Content Marketing Specialist at G2. She completed her Bachelor's in Computer Applications and is now pursuing Master's in Strategy and Leadership from Deakin University. She also holds an Advance Diploma in Business Analytics from NSDC. Her expertise lies in developing content around Augmented Reality, Virtual Reality, Artificial intelligence, Machine Learning, Peer Review Code, and Development Software. She wants to spread awareness for self-assist technologies in the tech community. When not working, she is either jamming out to rock music, reading crime fiction, or channeling her inner chef in the kitchen.

Storing large amounts of data means finding solutions that work best for your business.

by Holly Landis

by Holly Landis

The real challenge for data teams is how to choose a data science and machine learning...

by Yashwathy Marudhachalam

by Yashwathy Marudhachalam

What is the possible reason that your customer marketing isn't yielding any significant wins?...

.png) by Shreya Mattoo

by Shreya Mattoo

Storing large amounts of data means finding solutions that work best for your business.

by Holly Landis

by Holly Landis

The real challenge for data teams is how to choose a data science and machine learning...

by Yashwathy Marudhachalam

by Yashwathy Marudhachalam