June 7, 2025

by Soundarya Jayaraman / June 7, 2025

by Soundarya Jayaraman / June 7, 2025

I didn’t think I needed yet another AI chatbot until Grok popped up on my Twitter, ahem, X feed, with Elon Musk’s name stamped all over it. A chatbot with a sense of humor? That’s how it was being pitched, and I’ll admit, I was skeptical, but intrigued.

I’ve relied on ChatGPT for everything from outlining articles to naming projects, so I wasn’t sure Grok had anything new to offer. But curiosity won.

So I decided to put the two head-to-head: Grok vs ChatGPT. Same prompts, real tasks, zero fluff. To be honest, this wasn’t my first AI showdown, I’ve tested other chatbots like Perplexity, DeepSeek, and Gemini with nearly identical prompts.

But Grok felt different right away. Not just in tone, but in how it responded, joked, and occasionally dodged the point entirely.

Overall, ChatGPT was the all-purpose powerhouse for polished output and structured tasks in my tests, while Grok stood out for speed, analytical insights, and real-time takes, especially when I wanted snappy summaries or casual content.

Here’s what happened when I let both AI chatbots loose on my workflow.

Here’s a quick feature comparison of both AI models.

|

Feature |

ChatGPT |

Grok |

|

G2 rating |

4.7/5 |

4.4/5 |

|

AI models |

Free: GPT-4o Mini and limited access to GPT‑4o and o3‑mini, GPT-4.1 mini, |

Free: Limited access to Grok 3 model and Aurora image model, thinking and DeepSearch, and DeeperSearch

|

|

Best for |

Versatile daily use, writing, coding, and image generation; Best general-purpose AI chatbot |

Edgy takes, meme-like tone, casual content generation |

|

Creative writing and conversational ability |

Strong, can mimic tones and styles well |

Gets witty, sarcastic tone better but less consistent. Works well for real-time data on X. |

|

Image generation, recognition, and analysis |

Excellent image generation with the GPT-4o model and great image analysis capabilities |

Decent but not as good as ChatGPT |

|

Real-time web access |

Available via SearchGPT |

Available. Pulls real-time data from the web and X. |

|

Coding and debugging |

One of the best AI code generators |

Good but not as robust as ChatGPT |

|

Pricing |

ChatGPT Plus: $20/month ChatGPT Teams: $25/user/month ChatGPT Pro: $200/month |

SuperGrok: $30/month or $300/year |

Note: Both OpenAI and xAI frequently roll out new updates to these AI chatbots. The details below reflect the most current capabilities as of June 2025 but may change over time.

Before we jump into the hands-on comparisons, it's worth zooming out. On the surface, Grok and ChatGPT are two of the most advanced, talked-about AI assistants today, backed by tech titans Elon Musk and Sam Altman, respectively.

Musk, once a co-founder of OpenAI, launched xAI and Grok after openly criticizing OpenAI’s closed approach under Altman. That underlying rivalry shows up in the tools themselves: Grok is fast, unfiltered, and a little chaotic. ChatGPT is structured, safe, and built for scale.

So when you compare the two, you’re not just evaluating capabilities; you’re weighing two starkly different visions for where AI is headed.

Now, this is where it gets interesting. Here are the key differences between Grok and ChatGPT:

Grok and ChatGPT may have different vibes, but under the hood, they are more alike than you’d think. Beyond the tone and branding, both are capable, multi-modal AI tools that can tackle nearly any digital task. Here’s where they overlap:

Capabilities on paper are great, but I wanted to see how they hold up in practice. That’s why I ran both through 10 hands-on, everyday use cases

To keep things structured, I put both chatbots through a range of tasks across four key areas:

I kept it simple and unbiased: each bot received the exact same prompt, word for word. There were no custom instructions, rewrites, or model-specific tweaking. Find my prompts here! I’ve also used the same prompts to test other chatbots like Gemini, Perplexity, and DeepSeek, so I had a solid benchmark going in.

I graded their responses based on four core criteria:

To round out the comparison, I also cross-checked my findings with G2 user reviews. Grok doesn’t have enough reviews on G2 yet, but I did look at how ChatGPT is rated and described by users, just to see how my experience aligned with broader feedback.

Disclaimer: AI responses may vary based on phrasing, session history, and system updates for the same prompts. These results reflect the models' capabilities at the time of testing.

Now for the fun part, seeing how Grok and ChatGPT actually performed. For each task, I’ll walk you through three key takeaways:

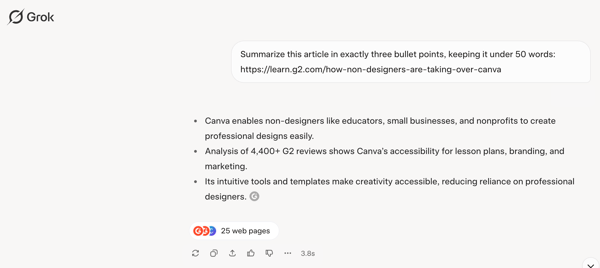

For this test, I asked both Grok and ChatGPT to distill a G2 article into exactly three bullet points under 50 words.

Right away, Grok surprised me, in a good way. It stuck to the word limit, actually pulled the exact number of G2 reviews mentioned in the article (4,400+), and delivered a crisp, well-scoped summary. No fluff, no overexplaining — just tight, relevant bullets that respected the constraints I set. Although its 25-page source tab did confuse me a bit at the beginning.

Grok's response to the summarization prompt

ChatGPT, on the other hand, went over the word limit. The summary was more nuanced and touched on pros and cons, which is great for depth, but not what I asked for. It read more like a full article excerpt than a compact summary, which defeats the purpose when you’re aiming for brevity and precision.

.

ChatGPT's response to the summarization prompt

Between the two, Grok nailed the format and showed sharper compliance with the task. ChatGPT’s response was thoughtful, but if I’m grading on following instructions and information fidelity, Grok wins this round.

Winner: Grok

For this test, I asked Grok and ChatGPT to create a full brand kit for a fictional product. One prompt, multiple assets: product description, tagline, social posts, email copy, and short video scripts. The goal? See how well each AI could deliver a cohesive, campaign-ready content pack in a single go.

Grok’s output was clean, consistent, and surprisingly brand-ready. It nailed the eco-friendly, adventure-focused tone across every piece, from a punchy tagline to platform-specific language in the Instagram and X posts.

Grok's response to the content creation prompt

The TikTok and YouTube scripts followed a clear visual arc and were visually paced for actual production. The emoji use felt natural (especially in the social copy), and the email copy was tight without sounding robotic. It didn’t just write marketing assets; it wrote like it understood the product’s persona.

Grok's response to the content creation prompt

Grok's response to the content creation prompt

ChatGPT also absolutely held its ground here. The tagline “Power your phone, power the planet” was sharp, punchy, and probably the strongest line either bot came up with. The copy was polished, clear, and felt like something you could use immediately.

ChatGPT's response to the content creation prompt

Overall? I’d call this one a tie. Grok brought personality and visual storytelling; ChatGPT brought structure, clarity, and a headline-worthy hook. Both could realistically power a real marketing campaign with minimal tweaks.

Winner: Split verdict; Both AI assistants produced strong marketing materials with on-brand tone and format consistency.

I wanted to test how well each AI could tell a story, specifically, a 300-word sci-fi scene built around a set of required elements. Storytelling pushes creativity, tone, pacing, and emotional payoff, and both ChatGPT and Grok took very different paths to get there.

Grok’s story leaned cinematic, with atmospheric descriptions and a gradual build-up that created a real sense of isolation and tension. There was a clear arc, and the emotional payoff landed well. I liked how Grok maintained a steady pace, keeping the reader grounded. Reading the story felt poetic and introspective, almost like a sci-fi short film.

Grok's response to my creative writing prompt

ChatGPT’s version, titled “Whispers of the Wanderer” (bonus points for giving it a title without being prompted), felt sharper and more dialogue-driven. The tension was more immediate, the pacing tighter, and the twist was delivered with a psychological punch.

The ending was haunting and layered. ChatGPT also added more environmental distortion and glitchy visuals, which gave the story a more surreal, mind-bending feel.

ChatGPT's story "Whispers of Wanderer" for the creative writing task

So, who did it better? Honestly, it’s close. Grok wins on mood and pacing — it reads like a thoughtful, slow-burn character piece. ChatGPT wins on structure and cinematic impact, with a stronger climax and tighter prose. Plus, that unprompted title was a nice storytelling instinct.

If I had to choose? I'd say ChatGPT edges ahead here for its more dramatic delivery and polished structure. But it’s a narrow win since both stories were genuinely enjoyable and well-executed in their own right.

Winner: ChatGPT

Users rate ChatGPT 8.8 for generating engaging, imaginative content, reinforcing its edge in creative writing and storytelling.

Want more? Explore the other best AI writers available in the market.

For the coding test, I wanted to see how these AIs could help someone like me with coding. I’m not a developer, but I love building little tools for personal or professional use. So I asked both Grok and ChatGPT to create a basic password generator.

ChatGPT delivered flawless code. I ran it straight in a compiler — no edits, no tweaks. The interface was clean, the password generation worked perfectly, and the copy-to-clipboard button did exactly what it was supposed to. If I were shipping this for real, I wouldn’t need to change a thing. It felt truly plug-and-play.

ChatGPT's code for a password generator

Grok, on the other hand, created a nicely styled generator with clear code and even a preview interface, which is a great touch for a visual user like me. But there was one problem: the clipboard copy feature didn’t work out of the box.

Grok's code for a password generator

Grok's code for a password generator

Now, I have seen this same error in other AI chatbot codes like DeepSeek and Perplexity. Here's where it got interesting: Grok’s preview console actually spotted the error and surfaced a “Fix This” button. I clicked it, and just like that, it resolved the issue. No digging through code. No second prompt. That level of built-in debugging is something I haven’t seen in other AI tools, and honestly? It blew me away.

Grok fixing a bug in its own code

Grok fixing a bug in its own code

So who won? ChatGPT still takes the win here for clean, production-ready code that worked immediately. But Grok gets serious points for user-friendliness, especially for people like me who aren’t technical. That live “fix-it” feature felt like AI actually partnering with me, not just coding for me.

Winner: ChatGPT

Users rate ChatGPT 8.7/10 for code generation, accuracy, and overall code quality, making it the preferred choice for AI-assisted coding.

It is also a top-rated AI coding assistant on G2.

Want more? Explore the other best AI coding assistants, tried and tested by my colleague Sudipto Paul.

Next up was image generation, and this one really showed where the two models stand when it comes to visual creativity and execution.

I asked both ChatGPT and Grok to generate a professional stock photo of a small business owner inside a cozy boutique, with a few key details. Now, stock-style images are notoriously hard to get right. They need to look polished, natural, and believable, not staged or robotic.

ChatGPT absolutely delivered. The result looked like a photo straight from a premium stock site. There was great composition, realistic posture, cozy ambiance, and beautifully styled lighting. The subject felt natural, and everything in the frame aligned with the visual I had in mind. With GPT-4o powering its image generation, it’s no surprise it came through this strongly.

Image generated with ChatGPT

Grok’s images were okay, but not quite there. They captured the overall theme, but the execution lacked polish. The lighting didn’t feel as warm or immersive, and both images had one noticeable issue: the hands looked awkward and slightly off. It’s a subtle detail, but one that really pulls you out of the realism. The backgrounds also felt flatter and less styled than ChatGPT’s version.

.jpg?width=300&height=400&name=image%20(1).jpg)

Images generated with Grok

So while Grok managed to hit the general prompt, ChatGPT nailed the vibe, the details, and the final output. It wasn’t even close this time — GPT-4o just has a serious edge when it comes to realistic, styled visuals.

Winner: ChatGPT

ChatGPT and Grok aren’t the only cool AI image generators in the market. Read our review of the best free AI image generators, from Adobe Firefly and Canva to Microsoft Designer and Recraft.

The next test was image analysis, and I threw two very different visuals at both bots—a clean, data-packed infographic and a handwritten note featuring the full poem “Hope is the thing with feathers” by Emily Dickinson.

Honestly? Both Grok and ChatGPT did a great job here. They transcribed the poem flawlessly and identified it without any confusion. Grok kept things focused and factual, just like I asked. ChatGPT, meanwhile, added a little personality. It described the handwriting style, mentioned the slightly crumpled paper, and even framed it like a sweet journal entry someone had lovingly copied. Not necessary, but definitely charming.

Grok's and ChatGPT's response to my handwritten image analysis prompt

When it came to the infographic, both tools pulled out the six main data points, highlighted key stats, and gave a clear summary. Grok went the extra step with trend analysis and conclusions, pointing out insights like departmental disparities in AI adoption. ChatGPT’s version was a bit more compact but still hit all the important points.

Grok's and ChatGPT's response to my image analysis prompt

So for this task? I’m calling it a tie. If you want clean, straight-to-the-point analysis, Grok nails it. If you want a bit of interpretive flair on top of that, ChatGPT has you covered.

Winner: Split verdict; Both transcribed the handwritten note and summarized the infographic accurately.

For this round, I wanted to test how well each AI could handle dense, academic content, so I dropped in a PDF of Einstein’s “On the Electrodynamics of Moving Bodies” and asked both to boil it down into five concise bullet points under 100 words.

Grok followed the brief exactly. It delivered a clear, sharp summary with clean formatting and stayed well within the word limit. The points were accurate and neatly aligned with the core principles of special relativity.

Grok's response to the file analysis task

Grok's response to the file analysis task

ChatGPT, on the other hand, went slightly over the word count, something I’ve started to notice as a bit of a pattern. That said, its summary was just as strong and arguably a bit richer in nuance. It included an extra concept — Relativity of Simultaneity — that Grok didn’t mention explicitly, and offered a bit more interpretive context on Einstein’s contributions to modern physics.

ChatGPT's response to the file analysis task

So, who came out ahead? If you value precision and instruction-following, Grok wins. It respected the constraint and still covered the fundamentals. But if you don’t mind a few extra words in exchange for depth, ChatGPT pulls slightly ahead with its broader framing of the theory’s implications. I’d call this one for Grok on brevity.

Winner: Grok

Both ChatGPT and Grok truly flexed their analytical muscles on this one. I dropped a simple CSV into each chatbot — just raw Google Trends data showing daily U.S. search interest for “ChatGPT” over three months — and let them go to work. And honestly? They both delivered.

Both tools broke down trends clearly and generated charts that made the patterns easy to follow.

But ChatGPT took things a step further. It didn’t just highlight patterns; it layered in statistical depth. I got a full summary table with metrics like mean, median, standard deviation, and percentiles.

It also flagged outliers using a two-standard-deviation rule and, notably, plotted a 7-day moving average on its graph. That line made broader trend shifts much easier to spot and interpret.

Grok, to its credit, nailed the storytelling aspect. It gave a more natural-language overview of trends, like weekday vs. weekend behavior and specific peak dates, and even included thoughtful recommendations for further exploration. But it didn’t go as deep into the math.

In short, both were great, but ChatGPT was more rigorous and detailed. If I had to choose one for a stakeholder-ready data brief, it’d be ChatGPT.

Winner: ChatGPT

When it came to real-time web research, I assumed Grok might have an edge, especially with its supposed access to X (formerly Twitter). But to my surprise, it was ChatGPT that clearly came out on top.

I asked both tools to fetch the three most recent and significant AI news stories. Grok delivered news that was over a week old, and while relevant, it didn’t feel current.

Grok's response to the real-time web search task

In contrast, ChatGPT surfaced stories from just the past couple of days, including Grammarly’s $1B funding, an AI breakthrough in cancer drug prediction, and a fresh licensing deal between Amazon and the New York Times. That’s real-time accuracy, not just keyword scraping.

ChatGPT's response to the real-time web search task

The difference? ChatGPT not only pulled newer articles but also framed them with precise summaries and reputable sources like Reuters, The Guardian, and Financial Times. Grok, meanwhile, leaned heavily on the BBC with stories that, while important, weren’t quite as timely or varied.

My take: For up-to-the-minute information, ChatGPT was faster, sharper, and simply more in sync with what’s happening right now.

Winner: ChatGPT

For the final test, I asked ChatGPT and Grok to create an executive-level report on “The AI Chatbot Landscape.” This wasn’t a basic summary; I wanted to see how deeply they could research, organize, and present complex info using their top tools: ChatGPT’s Deep Research and Grok’s DeepSearch. This one was all about strategy, not just speed.

ChatGPT kicked things off with follow-up questions to clarify the goal — already a good sign. The final report was sharp, well-organized, and full of structured insights: clear sections, bullet points, sourced data, and a tone that felt ready for the boardroom. It balanced depth and readability really well.

ChatGPT's response for deep research task

ChatGPT's response for deep research task

Grok, using both DeepSearch and DeeperSearch, produced two visually rich and wide-ranging reports. It covered everything from chatbot evolution to market trends, platform comparisons, and even future tech like blockchain and autonomous agents. I could see that it pulled in a lot of interesting context, but at times the tone veered too generic, and the flow wasn’t as consistent.

This one goes to ChatGPT. Its ability to ask the right questions, organize information with precision, and write in a business-ready tone gave it the edge

Winner: ChatGPT

Here’s a table showing which chatbot won the tasks.

|

Task |

Winner |

Why It Won |

|

Summarization |

Grok 🏆 |

Grok followed instructions precisely and kept the summary tight and structured under 100 words. |

|

Content creation |

Split |

Both tools produced strong marketing materials with on-brand tone and format consistency. |

|

Creative writing |

ChatGPT 🏆 |

ChatGPT added a compelling title, stronger suspense, and had an interesting twist ending; felt more polished and creative. |

|

Coding (password generator) |

ChatGPT 🏆 |

ChatGPT delivered error-free, production-ready code instantly; Grok needed a fix. |

|

Image generation |

ChatGPT 🏆 |

ChatGPT’s image captured the stock photo aesthetic better; Grok’s hands looked unnatural. |

|

Image analysis |

Split |

Both transcribed the handwritten note and summarized the infographic accurately; no clear edge. |

|

File analysis (PDF summary) |

Grok 🏆 |

Grok stuck to the word count and structured it cleanly; ChatGPT went slightly over. |

|

Data analysis (CSV processing and visualization) |

ChatGPT🏆 |

ChatGPT included a 7-day moving average and more detailed statistical analysis. |

|

Real-time web search |

ChatGPT🏆 |

ChatGPT surfaced timely, high-quality news; Grok shared older articles despite its X integration. |

|

Deep research (M&A trends report) |

ChatGPT🏆 |

ChatGPT had polished, structured and executive-ready report. |

While there’s no G2 review data available for Grok at this time, ChatGPT has an established presence on the platform with thousands of reviews. Here's what stands out based on user feedback:

ChatGPT scores high in overall experience:

Adoption is strongest in:

Users consistently highlight:

Areas flagged for improvement include:

Grok doesn’t have enough review data on G2 to compare its performance or adoption trends directly. That absence could suggest it’s still gaining traction in enterprise or professional environments. However, based on hands-on testing in this review, Grok shows potential that may not be reflected in formal reviews yet.

Curious how other AI chatbots stack up against ChatGPT? Check out these head-to-head battles:

After putting Grok and ChatGPT through ten real-world tasks, ChatGPT once again proved to be the most reliable all-rounder. With GPT-4o and Deep Research, it consistently delivered structured, polished output across creative and analytical workflows.

And this isn’t just a Grok comparison. Having tested Gemini, DeepSeek, Perplexity, and Claude in similar side-by-sides, the pattern holds: ChatGPT continues to lead in consistency, versatility, and depth. When I need results, I can actually use — without rework — it’s still the first tool I reach for.

That said, Grok genuinely surprised me.

From its spot-on summarization and strong file handling to its clever real-time coding error detection, Grok proved it's more than just a novelty AI with Elon Musk branding. Sure, it lacks polish in some areas, but it’s fast, witty, and constantly improving. In the right context, it’s incredibly effective.

Bottom line? Same as always: it’s not about picking one, it’s about building your AI stack.

Use ChatGPT when you need polish and structure. Turn to Grok for speed, attitude, and quick takes. Go with Perplexity when citations matter, or Gemini when you need fast facts and a clean pull from the web.

The best part of the AI space right now? You don’t have to pick a winner. You can just use the right tool for your job.

Still have questions? Get your answers here!

It depends on what you're looking for. Grok 3 is designed to be witty, unfiltered, and deeply integrated with real-time content from X (formerly Twitter). ChatGPT, especially with GPT-4o, is more polished, professional, and feature-rich. In our tests, ChatGPT consistently outperformed Grok in areas like coding, image generation, and research accuracy. But Grok had wins in summarization and real-time preview error handling.

ChatGPT is better for coding. It generates clean, error-free code and explains it well, perfect for developers or beginners. Grok had a nice debugging feature in our test, but it needed a fix before the code was fully functional.

ChatGPT wins here thanks to its strong summarization, structured explanations, and web access via Deep Research. Grok can handle academic content, too, but it's better suited for casual queries and real-time info.

ChatGPT offers far more integrations. With support for custom GPTs, third-party plugins, and file analysis, it’s turning into a full AI platform. Grok 3 is still focused on chat-based interaction and doesn’t currently support custom bots or app integrations. Both provide an API.

Absolutely. You can use ChatGPT via its website or mobile app, and access Grok through grok.com, the X app, or the Grok mobile app. Just note that full access to Grok may require an X Premium+ or SuperGrok subscription.

Yes, both can help you write or refine a resume. ChatGPT tends to offer more structured and professional templates, while Grok might give you a more casual or creative spin. For job applications, ChatGPT is the safer pick.

ChatGPT is more accurate and reliable when it comes to solving math problems or explaining logic. It handles step-by-step breakdowns, especially in STEM tasks. Grok can assist, but it’s not as consistent with formulas or precision-heavy queries.

This one's close. Grok 3 brings humor and personality. It’s great for playful copy and offbeat ideas. ChatGPT offers better structure and tone control, making it the stronger choice for polished writing, storytelling, and professional content.

ChatGPT is more accurate overall. Its responses are better grounded, more consistent, and usually supported by sources (especially when using web browsing). Grok is fast and opinionated, but sometimes sacrifices accuracy for tone or brevity.

Each of these AI chatbots brings something different to the table:

Choose ChatGPT for versatility, depth, and advanced features. Pick Grok 3 for personality, summarization, and fun, real-time commentary. Use Gemini if you're focused on accuracy, research, or you're already deep in the Google ecosystem.

ChatGPT and Grok aren’t the only AI chatbots out there. I’ve tested Claude, Microsoft Copilot, Perplexity, and more to see how they stack up in my best ChatGPT alternatives guide. Check it out!

Soundarya Jayaraman is a Senior SEO Content Specialist at G2, bringing 4 years of B2B SaaS expertise to help buyers make informed software decisions. Specializing in AI technologies and enterprise software solutions, her work includes comprehensive product reviews, competitive analyses, and industry trends. Outside of work, you'll find her painting or reading.

Everyone’s comparing AI chatbots — but what happens when one of them is not a chatbot at all?

by Soundarya Jayaraman

by Soundarya Jayaraman

You know you’re living in the future when choosing your AI sidekick feels more like deciding...

by Soundarya Jayaraman

by Soundarya Jayaraman

When I first heard about DeepSeek in January 2025, I thought it might be just another name on...

by Soundarya Jayaraman

by Soundarya Jayaraman

Everyone’s comparing AI chatbots — but what happens when one of them is not a chatbot at all?

by Soundarya Jayaraman

by Soundarya Jayaraman

You know you’re living in the future when choosing your AI sidekick feels more like deciding...

by Soundarya Jayaraman

by Soundarya Jayaraman