September 4, 2025

by Soundarya Jayaraman / September 4, 2025

by Soundarya Jayaraman / September 4, 2025

When you work in marketing, you're constantly making decisions that could go either way. Should that CTA say “Get Started” or “Try It Free”? Should the landing page headline focus on value or urgency? I’ve lost count of how many times I thought I knew what would convert better... only to be proven wrong by the data.

That’s why the best A/B testing tools aren’t just nice to have, they’re essential. Whether you’re tweaking headlines, testing button colors, or experimenting with entire page layouts, these tools take the guesswork out of optimization.

And if you're anything like me, you're juggling traffic goals, conversion targets, campaign deadlines, and maybe a dash of impostor syndrome for flavor.

In this list, I’m sharing some of the best A/B testing tools I’ve come across, backed by real user experiences shared on G2, that help marketers, growth teams, and startup founders test smarter, not harder. Some are beginner-friendly. Some are built for scale. But all of them have one thing in common: they make decisions less risky and results more real.

*These are the top-rated products in the A/B testing tool category, according to G2's Spring 2025 Grid Reports. Some also offer a free plan. I’ve also added the starting price of their paid pricing to make comparisons easier for you.

Before I started using A/B testing tools, I used to rely on guesswork, tweaking headlines, changing button colors, or rewriting CTAs just hoping they’d perform better. But hope isn’t a strategy. That’s where A/B testing tools completely shifted how I approached optimization.

At its core, an A/B testing tool lets you compare two (or more) versions of a webpage, email, CTAs, or element to see which one performs better. You create a variation, set a goal (like clicks, sign-ups, or revenue), and the tool splits your traffic between the original and the test version. Then it shows you, with real data, which one actually works. It’s like having a marketing crystal ball, except it's backed by numbers, not gut feelings.

These tools usually handle everything behind the scenes, from splitting traffic to tracking behavior and running the numbers. Some offer drag-and-drop editors so you can make changes without coding. Others go deeper with features like audience targeting, heatmaps, or detailed analytics. The goal is simple. Make decisions based on what works, not what you hope will work.

I started by shortlisting the best A/B testing tools according to tech reviews on G2 and exploring each platform’s features, flexibility, and ease of use.

My focus was on how well these tools support key needs for marketers, like running experiments without relying heavily on developers, tracking conversion goals accurately, segmenting audiences, and integrating with platforms like Google Analytics, HubSpot, or Shopify.

To dig deeper, I used AI to analyze G2 reviews and surface patterns that kept showing up. I looked for common frustrations, standout features users kept praising, and areas where certain tools clearly delivered more value than others—whether it was through advanced targeting, intuitive dashboards, or speed of launching tests.

Note: Some of these tools offer free trials or demos, but I didn’t get hands-on access to every single one. In those cases, I leaned on the experience of other marketers or users who’ve used them directly and cross-checked their feedback with verified G2 reviews and my own research. The screenshots you’ll see in this article are a mix of images captured during my exploration, along with visuals sourced from the tools’ G2 profiles.

There’s no one-size-fits-all when it comes to A/B testing platforms. Here’s what I looked for to find tools that actually support real-world experimentation across teams.

After evaluating more than 15 A/B testing tools, I narrowed the list down to seven that truly stood out. These tools offer a strong mix of functionality, efficiency, and reliability.

While none of them are perfect, each one brings something worthwhile to the table, whether it's ease of use, advanced features, or the ability to scale with different team needs.

According to G2 Data, the average user adoption rate for A/B testing tools is 66%, with users seeing ROI from the tool in 9 months. These tools are most used by small businesses (43%), followed by mid-market companies (38%) and enterprises (19%).

The list below contains genuine user reviews from the best A/B testing tools. To be included in this category, a solution must:

*This data was pulled from G2 in 2025. Some reviews may have been edited for clarity.

I’ve known VWO as one of the popular A/B testing software for improving user experience, and is one of the top-rated A/B testing tool on G2, so I was curious to see how it holds up in a modern optimization workflow.

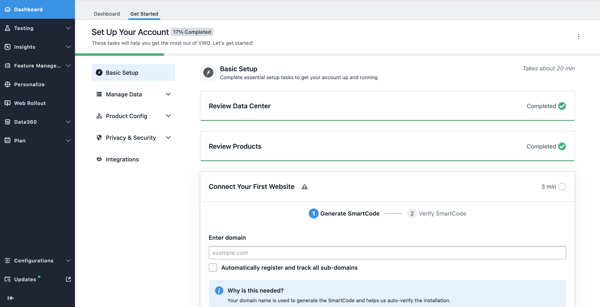

I gave it a spin during its 30-day free trial, and right off the bat, the onboarding felt thoughtful and guided. The "Get Started" dashboard walked me through setup with clean progress indicators and step-by-step tasks.

Connecting a domain to generate the SmartCode was straightforward, and I appreciated the option to automatically track subdomains. For someone without a dev background, this made installation a lot less intimidating.

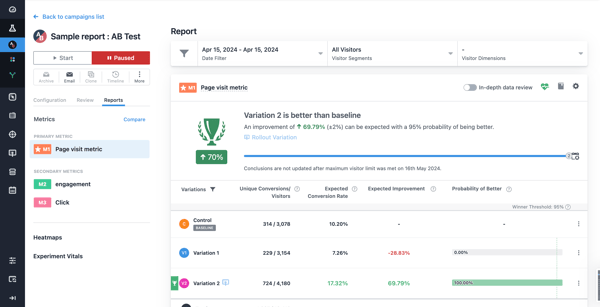

Where VWO really started to shine was in the testing interface. Setting up A/B tests felt intuitive, and even the sample report made the experience feel real. The reporting dashboard was clean and detailed, highlighting the winning variation with metrics like conversion uplift, probability of outperforming the baseline, and segmented visitor data. I saw similar sentiment being shared by other users on G2 reviews, too.

There’s a lot more to like in my view. VWO combines A/B testing with features like heatmaps, session recordings, and funnel analysis, all under one roof. This makes it a solid choice for teams that want more than just simple split tests. It’s especially useful if you’re managing conversion rate optimization at scale or juggling multiple testing goals across landing pages, product features, or campaigns.

One thing I noticed is that with all the power VWO offers, there’s naturally a bit of a learning curve. It’s not overwhelming, but there’s a lot happening on the platform. During my trial, I had to click around a few times to get familiar with the flow. Once I got the hang of it, the setup and navigation felt much smoother. This adjustment period is something echoed by G2 reviews, too, with other users mentioning they needed time to get comfortable at first.

The other thing to keep in mind is pricing. VWO includes a wide range of features like testing, heatmaps, and behavioral insights, which make it a strong all-in-one platform. But a couple of users on G2 mentioned that for smaller teams or those just starting with experimentation, the cost might feel a little high. If you’re running consistent tests and need deeper insights, though, the value is absolutely there. In fact, VWO is considered the leading A/B testing service for e-commerce websites and brands.

So, if you’re in marketing, product, UX, or even CRO at a mid-sized or enterprise company, I'd say VWO is definitely worth a closer look. And with a 30-day free trial, there's no harm in seeing how it fits into your workflow.

"VWO allows me to A/B test messaging and imagery on my website, to see which experiments are more likely to lead to inbound conversions. For us, conversions are leads generated, content downloaded, videos viewed, and deeper engagement with the site.

VWO is simple to implement and has strong customer support. I use the platform weekly, and the calls with our CSM are productive. Integrations with Google Analytics and 6sense were straightforward, but then using 6sense data as variables for personalization is not quite as easy. The Heatmaps and Clickmaps features in VWO Insights are very helpful for making decisions about the website layout and design."

- VWO Testing Review, Matthew G, Digital Demand Director

"Getting used to the tool was a bit of a learning curve, but this was my first experience in using a third party for creating AB tests, so I imagine it would be a similar experience with any other competitor. However, support was easy to reach and helped to answer any questions or doubts."

- VWO Testing Review, Tay A, Senior UX Analyst

Explore the best marketing analytics tools to make better data-based decisions for your marketing campaigns.

Bloomreach is a newer find for me, but it quickly caught my attention as an AI-powered platform built specifically for e-commerce. It brings together product discovery, content personalization, email, and search into one place, which makes it feel more like a full experience engine than just an A/B testing tool.

What surprised me most was how deeply A/B testing is baked in across the platform. Whether it’s email, SMS, in-app content, ads, or even offline channels, you can test almost anything.

While I didn’t get to run a live test, I took a detailed product tour and spent time digging into how A/B testing works inside the platform.

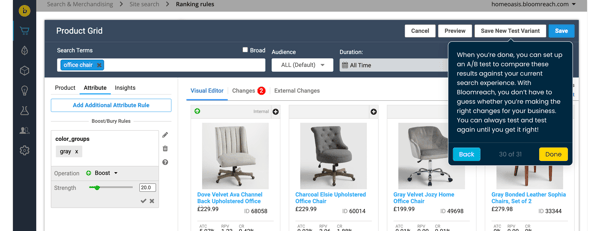

I could immediately tell it was built for teams that want to optimize digital experiences at the structural level. This isn't just a tool for swapping headlines or tweaking CTA colors. Bloomreach lets you test deeper changes, like product ranking logic, personalization rules, site search redirects, and even algorithm-level decisions.

Looking at user feedback on G2, I wasn’t the only one impressed by its flexibility. Many reviewers mention how reliable and feature-rich Bloomreach is, especially when it comes to personalization and customer journey control. People also praise the support team, which aligns with the helpful documentation I came across during my research.

That said, the depth of functionality also comes with a bit of a trade-off. A few G2 reviewers noted that some features don’t offer as much flexibility out of the box as they’d like. And also, user reviews on G2 noted that it's not the kind of tool you casually poke around in. It is difficult to get started with. It does take a bit of time to get comfortable with everything, but once it clicks, the possibilities are pretty exciting. I'd say it's better suited for teams with a clear testing strategy and the desire to customize experiences across a large catalog.

That said, I can say Bloomreach feels incredibly powerful for brands that want to go beyond surface-level A/B tests. If you want to fine-tune how products are delivered, personalize journeys, and back those decisions with real experiments, this is the kind of platform you should turn to.

"(Bloomreach has) many ways of campaign personalization, integrations with other systems; the funnels are built to generate conversions in a short time; great support, which also helps in specific and more difficult use cases; great tool for making workarounds."

- Bloomreach Review, Michaela T, Analytics Engineer

"It is not so much of a dislike - however, some advanced features require you to have semi-technical expertise to maximise the gains."

- Bloomreach Review, Sanket M, Digital Marketing Manager

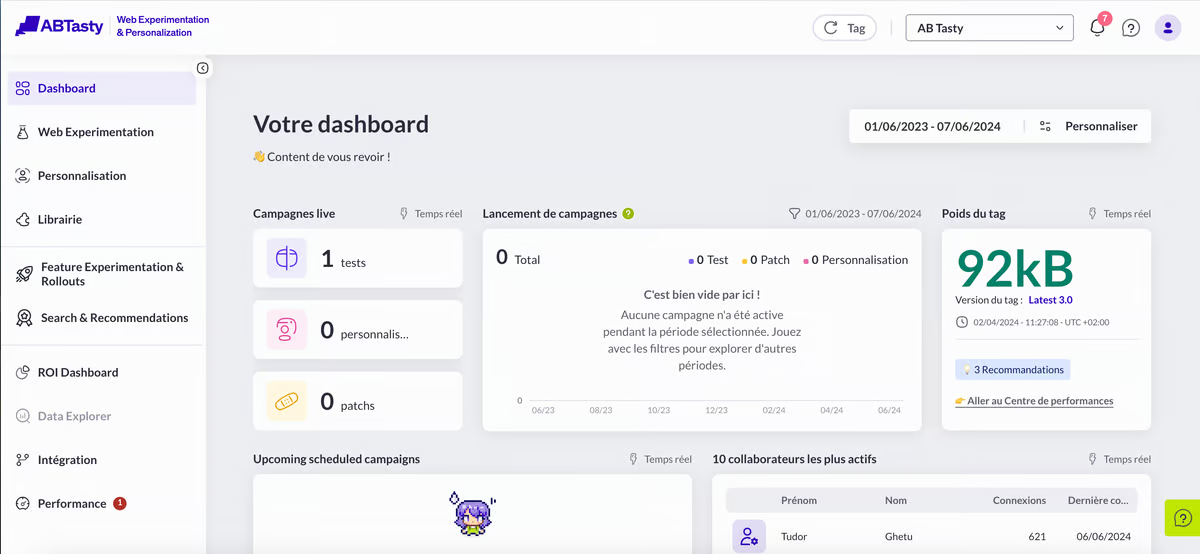

With a name like AB Tasty, I expected a solid A/B testing experience. Based on what I gathered from G2 user reviews and their documentation, the platform seems to live up to that expectation.

What really stood out is how accessible it appears to be. Many reviewers mentioned that even non-technical teams are able to launch experiments quickly without relying too much on developers. The interface is often described as clean, intuitive, and easy to navigate, which makes a big difference when you're trying to move fast.

Between the visual editor, personalization engine, and test setup tools, it looks like the platform is built for fast iteration and idea validation, especially when optimizing site experiences or conversion paths.

There are a few recurring downsides I noticed as well. Some G2 users mentioned that advanced features can take more time to implement or may require technical support.

There was also some feedback on G2 that the tool can feel a bit slow at times. Users note that it’s not a major issue, but it’s something to keep in mind if you are managing a fast-paced testing schedule or trying to roll out changes quickly.

From what I’ve seen, AB Tasty strikes a good balance between being user-friendly and feature-rich. It seems like a strong choice for digital marketing teams that want to run meaningful experiments without getting stuck in complicated setup processes.

If you're looking for a tool that empowers marketers and product managers to take the lead on testing, this one feels like a smart pick. In fact, it's one of the most recommended A/B testing tools for startups and growing teams, along with other tools like VWO Testing.

"The flexibility of the platform and the superb support are excellent. It took me a little while to understand how the wysiwyg worked, but that's also because of the nature of our website and how we've set it up, but when you know, you know. Setting up tests is super easy! Implementation/integration for us was a little tricky, and we ran into some troubles with the reporting, but we were helped along the way in all possible ways until it was solved.

We've set AB Tasty up to test on a variety of (landing) pages and as frequent as we can, so we're using AB Tasty on a weekly basis."

- AB Tasty Review, Linda A, Website Manager and CRO Specialist

"Sometimes the Editor can be slow and depending on the technical structure, a bit painful."

- AB Tasty Review, Thomas C, Senior Consultant, CRO and Web Analytics

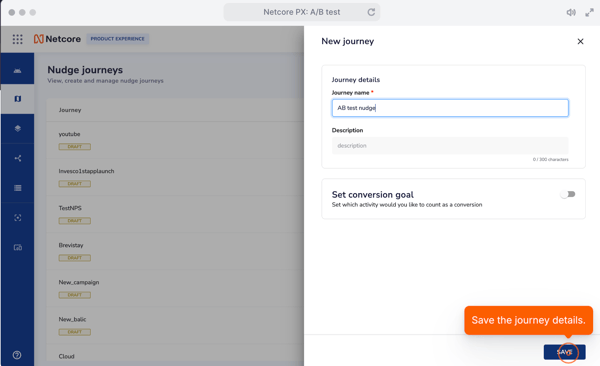

I’ve known about Netcore for a while, but taking a closer look at its A/B testing capabilities gave me a new appreciation for how versatile the platform really is, especially when it comes to orchestrating personalized customer journeys across channels.

From what I gathered in the demo and reviews, it's built right into the journey builder, so testing different variants across email, SMS, in-app nudges, or push notifications is easy to set up and manage.

I walked through the journey builder via an interactive demo that set up an A/B test using different nudge variants, and found the experience pretty intuitive. Choosing between rollout options, whether all at once or in phases, was simple, and it felt like something I could hand off to a marketer without needing constant dev support.

The platform seems to shine most when it comes to accessibility. Multiple G2 reviewers pointed out how smooth their experience has been when launching campaigns, which definitely tracks with what I saw in the demo.

Reviewers also mentioned it helps uncover customer behavior trends, which makes sense given how connected the platform is across channels.

That said, not everything is perfect. I saw a few G2 users mentioning that the platform can feel laggy at times, particularly when working with large datasets or switching between modules. There were also a couple of mentions on the G2 reviews I read about reporting being harder to navigate, especially when tracking engagement across multiple channels.

Overall, though, Netcore comes across as a strong contender for teams that want to move quickly and test messaging at scale. It’s built with customer engagement in mind, in my opinion, and it feels like a good fit for CRM teams, growth marketers, and product-led organizations looking to optimize experiences without needing to build custom testing flows from scratch.

If I were running in-app experiments or nudges across channels, I’d seriously consider giving this one a go.

"Netcore Cloud has maximized our productivity on every front. Leveraging it's email and AMP, we've streamlined communication channels seamlessly. The email AMP and A/B testing tools have facilitated precise targeting and optimization. They have provided deep insights, guiding our decision-making process effectively. Netcore Cloud is a game-changer for businesses striving for efficiency and effectiveness in digital engagement."

- Netcore Customer Engagement and Experience Platform Review, Surbhi A, Senior Executive, Digital Marketing

"The thing I dislike about Netcore is that it's a bit difficult to understand at first. And there is no user guide video. If we want to create or use any analytics, we need to connect with the POC. If there are any tutorial videos, it will be better for new users."

- Netcore Customer Engagement and Experience Platform Review, Ganavi K, Online Marketing Executive

There are tons of marketing tools out there that helps you to build automated workflows, but some are just better than the rest. Explore the best automation tools, reviewed and rated.

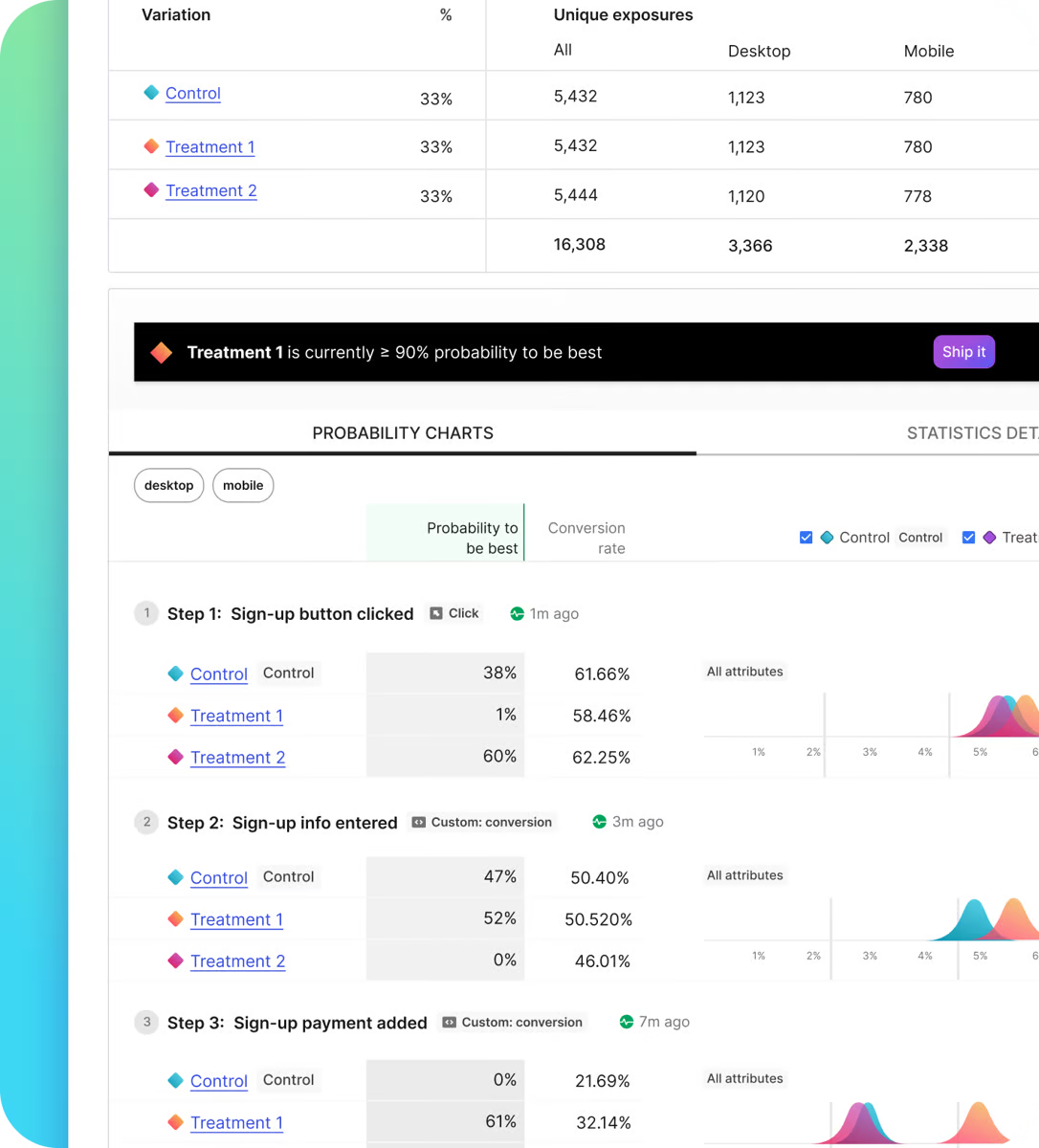

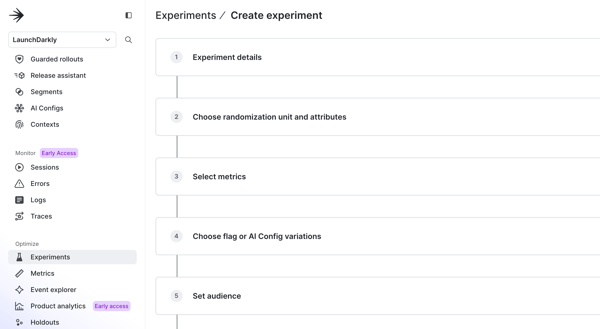

I’ve known LaunchDarkly as one of the best A/B testing software for app developers. But after looking into it more closely and digging through G2 user reviews, it’s clear that it plays a valuable role in experimentation, just in a different way than most visual or marketer-friendly platforms.

It’s geared more toward development and product teams who want to test, release, and roll back features without redeploying code.

What stood out most to me from the reviews is how widely appreciated LaunchDarkly is for helping teams safely ship new features and run backend experiments.

I could see reviewers consistently talking about using feature flags to control releases and run A/B tests by segmenting users or gradually rolling out new functionality. The platform gives teams control over who sees what and when, which makes it ideal for testing changes in real production environments without risking a full release.

The big win here seems to be around flexibility and safety. One user described it as their go-to for selective releases and targeted rollouts. Another mentioned that it helps them run experiments at the infrastructure level, where performance, functionality, or user experience might vary. For me, this means we’re not dragging and dropping elements on a landing page. We’re flipping switches under the hood with precision.

But, a few G2 reviewers did mention some friction. The interface can get a little overwhelming when you're managing a large number of feature flags, especially across different teams or environments. G2 feedback also shows that the UI update rolled out last year took users some time to get adjusted to. It wasn’t a dealbreaker, but for teams juggling a complex flag strategy, it might take a bit of an effort to get fully comfortable.

Overall, LaunchDarkly feels like the right fit for dev-heavy teams that treat experimentation as part of the release pipeline, in my opinion.

If you're a product manager, engineer, or growth team working closely with code, it's a powerful way to run safe, targeted experiments. Just know that it’s not designed for quick visual tweaks or content-based split tests. It’s more about managing risk and testing logic at scale.

"I like LaunchDarkly's ability to seamlessly manage feature flags and control rollouts, enabling quick, safe deployments and A/B testing without requiring code changes or restarts. Its real-time toggling, comprehensive analytics, and role-based access controls make it invaluable for dynamic feature management and reducing risk during releases."

- LaunchDarkly Review, Togi Kiran, Senior Software Development Engineer

""The new UI that LaunchDarkly recently launched for feature flagging is not something that I like, I had built muscle memory for using the tool hence it has become a little tough for me to use it."

- LaunchDarkly Review, Aravind K, Senior Software Engineer

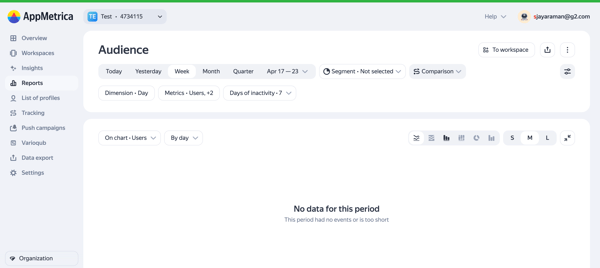

I’ve always thought of AppMetrica as more of a mobile analytics and attribution tool, but after reading through reviews and looking deeper into what it offers, it’s actually capable of much more.

From what I could gather, it’s not a visual A/B testing platform in the traditional sense, but for data-driven product teams, it offers the kind of backend insight you need to understand what’s working and why.

What stood out most to me from user reviews is the ease of integration, the detailed technical documentation, and the ability to slice data quickly with flexible filters.

The A/B testing features are praised for being straightforward, especially when testing mobile app flows or messaging variants. A few users highlighted how valuable it is for monitoring errors, tracking installs, and analyzing in-app events in real time, which makes experimentation feel much more responsive and actionable.

Of course, no tool is perfect. I saw some reviewers note limitations on G2, like inadequate reporting functionalities. A couple of developers expressed that the UI can be slightly clunky at times, especially when working with larger datasets or trying to drill down into specific performance metrics. These aren’t huge issues, but I'd definitely want to keep en eye out for.

On the whole, AppMetrica is considered one of the top A/B testing platforms for optimizing mobile apps. If I were running growth experiments or behavioral testing within a mobile app, it would definitely be on my radar. It’s especially useful for product teams and mobile marketers who care more about what happens under the hood than flashy UI tools.

"I personally like the ease of filtration and A/B test functionality, which are crucial in comparison of different user behaviour while implementing new features. I use it daily for error analytics and usage statistics. It was easy to implement and integrate to our daily work. Customer support is reliable and react to questions fast."

- AppMetrica Review, Anna L, Senior Product Manager

"Being a developer, App Metrica provides limited customization in reports and also in ad tracking limitations."

- AppMetrica Review, Mani M, Developer.

Pair your experiments with the best session replay software to see exactly how users interact with each variation.

I’ve always seen MoEngage as one of those solid, go-to platforms when it comes to customer engagement and automation, especially if you're looking to work across channels like push, email, in-app, and SMS. It’s designed for scale, and it shows.

You get real-time analytics, user segmentation, campaign automation, and the ability to run A/B tests across your entire funnel. What stood out to me is how often reviewers mention how intuitive the platform feels once you’re inside. A lot of people talked about how easy it is to create and segment campaigns, even for more complex workflows, and how quickly they were able to push live experiments with personalized variants.

MoEngage’s A/B testing features really come to life when paired with its segmentation tools, from what I gathered. The platform gives you fast feedback on what’s working.

Reviewers on G2 highlighted how useful it is to test across multiple audience segments without having to set up separate campaigns. And since everything sits on top of a unified customer view, the test results feel tightly connected to actual behavior, not just vanity metrics.

That said, a few G2 reviewers did mention that it can be a little tricky to implement at first, especially if you're comparing it to lighter tools. One G2 user also mentioned pricing being a bit on the higher side, which tracks with the level of capability you’re getting. But overall, complaints were rare, and I'd say things to be aware of rather than major red flags.

If I were on a CRM or lifecycle marketing team looking to run cross-channel experiments with deep behavioral insights, MoEngage would absolutely be on my shortlist.

"I love that LogicGate is incredibly customizable to meet your organization's specific needs; however, there are also templates and applications to get you started if you aren't sure how to proceed."

- MoEngage Review, Ashleigh G.

"While MoEngage is a powerful platform, there are a few areas that could use improvement. The platform's reporting capabilities, although robust, could benefit from more customization options to better tailor insights to our specific needs. Occasionally, the system can be a bit slow when handling large datasets, which can be frustrating when working under tight deadlines.

Additionally, while the platform is generally intuitive, there is a slight learning curve for new users when navigating more advanced features. However, these issues are relatively minor compared to the overall benefits the platform provides."

- MoEngage Review, Nabeel J, Client Engagement Manager.

Read about the best practices for marketing automation to get the most out of it.

A/B testing is the process of comparing two or more variations of a message, layout, or experience to see which one performs better. It’s how you replace guesswork with real data, whether you're optimizing a subject line, a product page, or a user journey. For marketers, product teams, and growth leads, it’s one of the most effective ways to learn what actually works.

There’s no single “best” tool. It really depends on your team, goals, and how technical you want to get. For example, tools like VWO and AB Tasty are great for marketers who want visual testing options. LaunchDarkly is a better fit for developers and product teams testing feature flags. Platforms like MoEngage, Netcore, and AppMetrica shine when A/B testing is part of broader customer engagement or app analytics strategies.

Yes, many tools offer no-code or low-code setups, especially those built for marketing or growth teams. Platforms like AB Tasty, VWO, and MoEngage make it possible to create and launch tests directly from their dashboards. That said, some tools, especially those focused on backend or feature-level testing, may require developer involvement.

Yes, if you're just getting started or working with a small team, free A/B testing tools can be a great way to dip your toes in. They often come with limitations—like caps on traffic volume or fewer targeting options—but they’re still useful for testing headlines, CTAs, or basic campaign flows. Just keep in mind that as your experimentation strategy matures, you’ll probably outgrow them pretty quickly.

Start with your use case. If you’re testing marketing messages or landing pages, tools like VWO, AB Tasty, or MoEngage might be ideal. For app analytics and user behavior tracking, AppMetrica could make more sense. If you’re on the product or dev side, LaunchDarkly or Netcore offer deeper control through feature flags and server-side experiments. Prioritize tools that fit how your team works, not just what looks shiny on the surface.

The right tool is the one that fits your goals without adding unnecessary complexity.

For SaaS companies, flexibility and scalability are key. Tools like VWO Testing and LaunchDarkly stand out. VWO is ideal for optimizing landing pages, onboarding flows, and conversion funnels without heavy dev work. LaunchDarkly, on the other hand, helps SaaS product teams run feature-level experiments with safe rollouts, making it perfect for testing subscription features, pricing tiers, or backend functionality.

Agencies often need speed and ease of use to run multiple client experiments. AB Tasty is a strong fit here. It’s designed for marketers, with a visual editor and quick setup for web experiments. VWO Testing is another good option if an agency also wants built-in heatmaps and session replays to show clients not just results, but why those results happened.

Marketing teams typically look for tools that minimize dev dependency. VWO Testing, AB Tasty, and MoEngage are frequently favored. VWO and AB Tasty allow marketers to tweak web content directly, while MoEngage lets lifecycle marketers A/B test campaigns across email, push, and in-app messages — all without relying on engineering.

Product managers often need deeper control over experiments tied to user behavior or new feature rollouts. LaunchDarkly is highly rated for feature flagging and backend testing, while AppMetrica gives PMs strong mobile analytics with in-app A/B testing capabilities. For web or funnel-focused PMs, VWO Testing is also a solid choice thanks to its robust reporting and behavioral insights.

If there’s one thing I’ve learned while diving into A/B testing tools, it’s that experimentation isn’t just a feature, it’s a mindset. Whether you’re optimizing landing pages, rolling out new features, or fine-tuning omnichannel campaigns, having the right testing platform in place gives you the power to validate what works before making it permanent. And that peace of mind? It’s invaluable.

Another big takeaway? Your choice of tool should reflect the team running the test. A great feature set doesn’t matter if it’s too complex for the people using it. If your marketers are hands-on, they’ll need clean UIs, drag-and-drop journeys, and fast reporting. If your engineers are doing the testing, they’ll care more about API access, flag control, and integration with CI/CD pipelines. Choosing the right tool means choosing the right workflow.

At the end of the day, A/B testing isn’t about proving who had the better idea. It’s about building better experiences with less risk. And when you pair the right tool with the right team, that’s when experimentation stops feeling like guesswork and starts driving real growth.

Need more help? Explore the best conversion rate optimization tools on G2 to find the right platform for your goals.

Soundarya Jayaraman is a Senior SEO Content Specialist at G2, bringing 4 years of B2B SaaS expertise to help buyers make informed software decisions. Specializing in AI technologies and enterprise software solutions, her work includes comprehensive product reviews, competitive analyses, and industry trends. Outside of work, you'll find her painting or reading.

I’ve been working in content marketing for several years now, experimenting with everything...

.png) by Tanuja Bahirat

by Tanuja Bahirat

As a marketer, I’ve often found myself buried under manual workflows, sending follow-up...

.png) by Devyani Mehta

by Devyani Mehta

I’ve been working in content marketing for several years now, experimenting with everything...

.png) by Tanuja Bahirat

by Tanuja Bahirat

.png)