AI knows it all — but what happens when it makes it up?

I remember research analysts being the most frustrated group back in November 2022 when ChatGPT exploded onto the tech scene. They were being asked to experiment with and use AI in their workflows, but it didn’t take long for them to encounter a major stumbling block — hallucinations. After all, would you risk your career and credibility over a new technology fad?

While content creators like myself, data scientists, and engineers were thriving with AI adoption, we could only empathize with our research analyst peers as we partnered with them to find new ways to make OpenAI, Gemini, LangChain, and Perplexity cater to their requirements.

But soon, the consensus was clear: AI hallucinations were a problem for knowledge workers everywhere.

Fast forward to 2025. Despite leaps in reasoning models and agentic AI, hallucinations haven’t disappeared. Companies like Anthropic, OpenAI, NVIDIA, and now Amazon continue to push boundaries. Yet the ghost of hallucinations still lingers. Our G2 LinkedIn poll from Q1 of 2025 showed that nearly 75% of professionals had experienced hallucinations, with over half saying it’s happened multiple times. In the AI-world, that’s a lifetime ago, and we’re curious if this has changed?

New developments may promise smarter, faster, and more reliable AI, but the question remains: Are AI LLMs strong enough to prevent hallucinations?

AI hallucinations are instances when a large language model (LLM) generates information that sounds confident and plausible but is factually incorrect, fabricated, or outdated. In other words, it’s when AI “fills in the blanks” instead of admitting it doesn’t know.

As AI models evolve, the way we interact with information is also transforming. We’re witnessing the rise of what our very own Tim Sanders calls the “Answer Economy.” People are shifting from search-based research to an answer-driven way of learning, buying, and working.

But here’s the catch. While AI chatbots often deliver instant, confident responses, they’re sometimes wrong. And despite accuracy concerns, these outputs continue shaping decisions across industries.

That raises a critical question: Are we too quick to accept AI’s answers as truth, especially when the stakes are high?

While AI chatbots are shaking up search and businesses are leaping towards AEO and agentic AI, how strong are their roots when hallucinations haunt?

AI hallucinations can be as trivial as Gemini telling people to eat rocks and glue pizza. Or as big as fabricating claims like the ones below.

AI hallucinations are no longer just theoretical risks, they’ve shown up in courtrooms, filings, and compliance reports worldwide throughout 2025. What began as a technical glitch has become a legal and ethical flashpoint for professionals who rely on generative AI without verifying its outputs.

Here’s a timeline of the lawsuits, penalties, and corporate exposures that brought AI hallucinations into the legal spotlight.

There were several other notable AI hallucination mishaps in 2024 involving brands like Air Canada, Zillow, Microsoft, Groq, and McDonald’s.

The takeaway across all these incidents is clear; the liability typically falls on the human using AI without validation.

Hallucinations are exposing new accountability gaps in research, legal, and compliance workflows. For GTM leaders, that lesson extends beyond the courtroom: automation without oversight is a brand risk.

As AI adoption scales across industries, these legal flashpoints serve as a warning. So how are the world’s most-used chatbots actually performing when it comes to accuracy and reliability? Let’s look at what G2 data tells us.

We revisited reviews and compared the four most popular AI chatbots, ChatGPT, Gemini, Claude, and Perplexity to see whether hallucination concerns are easing or worsening.

Accuracy-related mentions have dropped by more than half since early 2025, but reviewers still flag factual errors in complex topics. ChatGPT remains a top choice for speed and breadth, not for perfect precision.

ChatGPT scorecard based on G2 Reviews:

Overall rating: 4.46

LTR (Likelihood to Recommend): 9.2 / 10

Accuracy Mentions (%): 10.5 (45 of 427 reviews)

Top ChatGPT use cases:

But for some users, the benefits far outweigh the pitfalls. For instance, in industries where speed and efficiency are crucial, ChatGPT is proving to be a game-changer.

Peter Gill, a G2 Icon and freight broker, has embraced AI for industry-specific research. He uses ChatGPT to analyze regional produce trends across the U.S., identifying where seasonal peaks create opportunities for his trucking services. By reducing his weekly research time by up to 80%, AI has become a critical tool in optimizing his business strategy.

“Traditionally, my weekly research could take me over an hour of manual work, scouring data and reports. ChatGPT has slashed this process to just 10-15 minutes. That’s time I can now invest in other critical areas of my business.”

Peter Gill

G2 Icon and Freight Broker

Peter advocates that AI's benefits extend far beyond the logistics sector, proving to be a powerful ally in today's data-driven world.

Accuracy complaints fell from 59 to 22 since early 2025. Gemini wins for productivity and collaboration but still faces trust gaps in fact-based research.

Gemini scorecard based on G2 Reviews:

Overall rating: 4.38

LTR (Likelihood to Recommend): 9.1 / 10

Accuracy Mentions (%): 8.9 (22 of 248 reviews)

We noted several research analysts use Gemini. Some particularly prefer the research mode and use it for academic and market research.

“Daily use, particularly in love with research mode. Gemini’s speed enhances the surfing experience overall, especially for those who use the internet for extensive research and work duties or who multitask.”

Elmoury T.

Research Analyst

Top Gemini use cases:

Source: G2.com Reviews

Claude maintains the lowest hallucination rate and the highest trust scores for its “honesty over hallucination” ethos favored in regulated and research-intensive sectors.

Claude scorecard based on G2 Reviews:

Overall rating: 4.57

LTR (Likelihood to Recommend): 9.4 / 10

Accuracy Mentions (%): 6.2 (6 of 97 reviews)

Claude earns trust by admitting what it doesn’t know, making it the top pick for users who value accuracy over speculation.

Source: G2.com Reviews

Top Claude use cases:

• Conversational analysis and customer interactions

• Content summarization and knowledge synthesis

• High-stakes or ethical research requiring transparency

Accuracy mentions rose slightly but remain minimal. Perplexity is a research favorite for speed and citation-backed answers.

Perplexity scorecard based on G2 Reviews:

Overall rating: 4.41

LTR (Likelihood to Recommend): 9.0 / 10

Accuracy Mentions (%): 7.8 (8 of 103 reviews)

Users praise its ability to provide comprehensive, context-aware insights. The frequent integration of the latest AI models ensures it remains a step ahead.

Top Perplexity use cases:

Michael N., a G2 reviewer and head of customer intelligence, stated that Perplexity Pro has transformed how he builds knowledge.

“Easiest way of conducting tiny and complex research with proper prompting.”

Vitaliy V.

G2 Icon and Product Marketing Manager

Business leaders and CMOs like Andrea L. are using different AI chatbots to either supplement, complement, or complete their research.

Source: G2.com Reviews

Luca Pi, a G2 Icon and CTO at Studio Piccinotti, uses AI to navigate complex market dynamics. His team uses AI to process vast amounts of data from surveys, social media, and customer feedback for sentiment analysis, helping them gauge public opinion and spot emerging trends. AI also streamlines their survey workflows by automating question generation, data collection, and analysis, making their research more efficient.

To translate insights into actionable strategies, Luca relies on predictive analytics to forecast consumer behavior, monitor competitors, and personalize marketing campaigns. His preferred AI tools? Perplexity for research and ChatGPT for managing and refining the data.

While Piccinotti's team also leverages APIs, local models, and other AI wrappers, he says Perplexity and ChatGPT remain unmatched in their effectiveness today.

![]()

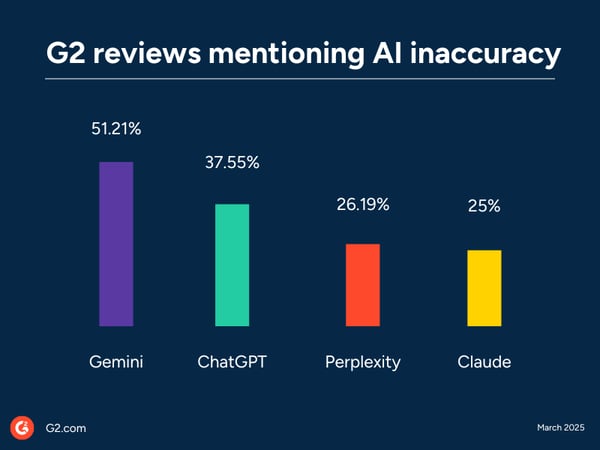

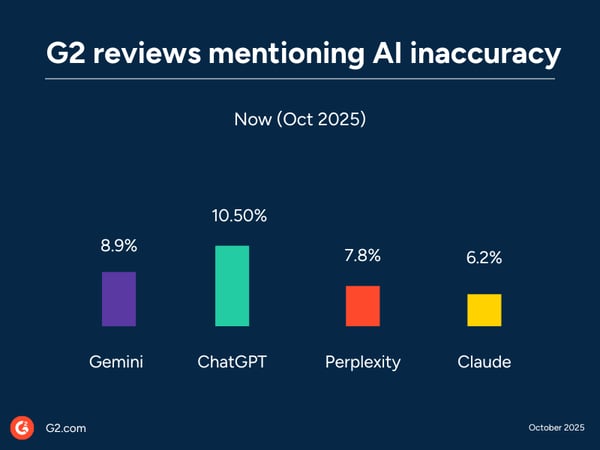

Between March and October 2025, mentions of AI inaccuracy fell sharply across ChatGPT, Gemini, Claude, and Perplexity.

Source: G2 review data from March 2025

On average, reviews mentioning hallucinations dropped from 35% in March 2025 to 8.3% in October 2025, according to G2 review data.

Source: G2 review data as of October 2025

G2 review data signals stronger contextual reasoning and better safeguards.

But even as hallucinations decline, trust still favors models that show their sources, not just their confidence.

|

AI Chatbot |

Accuracy concerns (As of March 2025) |

Accuracy Mentions (As of October 2025) |

Reviews Flagging Hallucinations |

|

ChatGPT |

101 |

45/427 |

10.5% |

|

Gemini |

59 (33 accuracy + 26 context) |

22/248 |

8.9% |

|

Claude |

7 |

6/97 |

6.2% |

|

Perplexity |

7 |

8/103 |

7.8% |

Mentions of hallucinations and accuracy errors have declined across most chatbots, showing vendors are tightening contextual reasoning. Yet buyers continue to reward models that show their work and admit uncertainty over those that sound confident but get it wrong.

Here’s a look at other tools professionals are testing to support research and knowledge work.

These watchlist vendors show that hallucination concerns are not limited to chatbots; they spill into collaboration, productivity, and customer service platforms.

It’s still a cautious yay — which is still better than the classic “it depends”.

Despite visible improvements, hallucinations persist. ChatGPT and Gemini continue to drive productivity, but they remain under scrutiny for their factual accuracy. Claude continues to lead in trust scores, while Perplexity earns user loyalty for speed and citation-backed answers.

The signal is clear: Hallucinations may be shrinking in volume, but they’re not going away. Buyers trust AI more when it’s transparent and verifiable — not when it’s fast and confident but wrong.

Q1. What is an AI hallucination?

A chatbot response that sounds plausible but is factually incorrect, fabricated, or outdated.

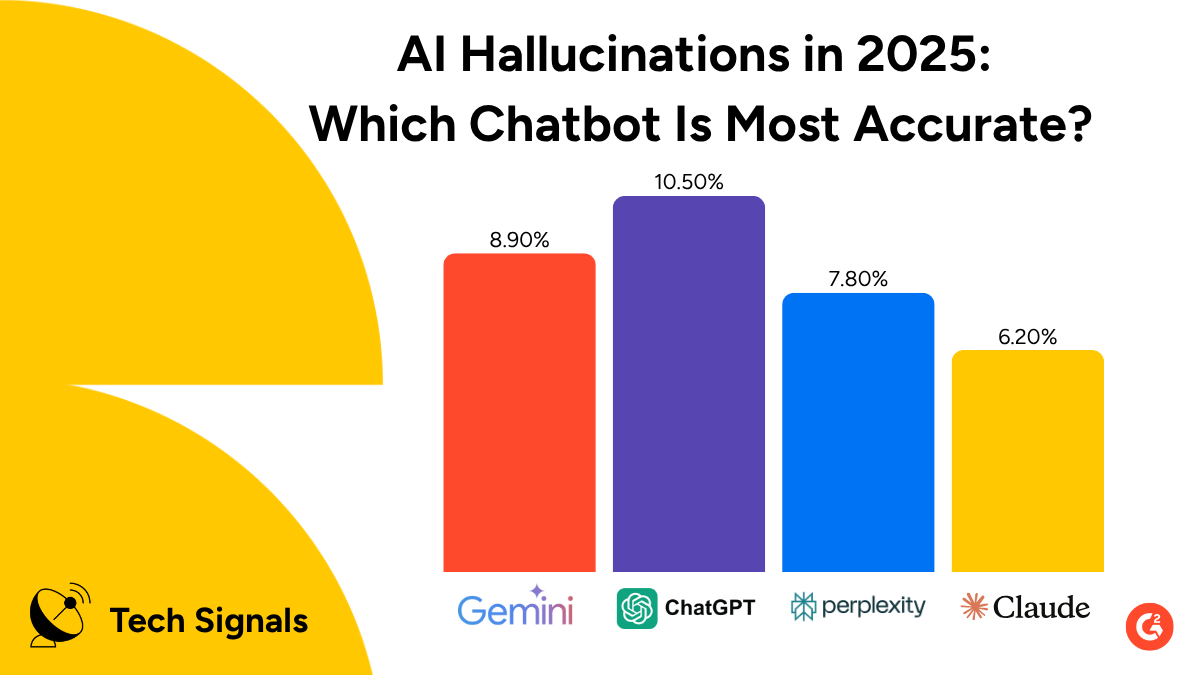

Q2. Which chatbot is most accurate in 2025?

Claude has the fewest accuracy complaints (6.2%), followed by Perplexity (7.8%), Gemini (8.9%), and ChatGPT (10.5%).

Q3. How can businesses prevent AI hallucinations?

Adopt human-in-the-loop checks, use citation-backed tools and integrate AI into workflows with guardrails.

Q4. Which industries face the most risk?

High-stakes sectors: legal, healthcare, finance — where errors carry regulatory or reputational consequences.

Q5. Is AI reliable enough for research today?

Yes — but cautiously. Productivity gains are clear, but accuracy still demands verification and transparency.

Think your brand is AI-ready? Register for Reach 25, join Bozoma Saint John, Profound, Zendesk, Reddit, Canva, and more on Nov 5 to learn strategies and actionable insights.

Edited by Supanna Das

Kamaljeet Kalsi is Sr. Editorial Content Specialist at G2. She brings 9 years of content creation, publishing, and marketing expertise to G2’s TechSignals and Industry Insights columns. She loves a good conversation around digital marketing, leadership, strategy, analytics, humanity, and animals. As an avid tea drinker, she believes ‘Chai-tea-latte’ is not an actual beverage and advocates for the same. When she is not busy creating content, you will find her contemplating life and listening to John Mayer.

We’ve been tracking the rise of AI search and predicting its impact on the tech landscape...

by Kamaljeet Kalsi

by Kamaljeet Kalsi

AI search isn’t just an evolution of Google — it’s a revolution in buyer behavior.

by Kamaljeet Kalsi

by Kamaljeet Kalsi

The AI landscape is a lot like a New York minute — fast and relentless. Marketing and MarTech...

by Kamaljeet Kalsi

by Kamaljeet Kalsi

We’ve been tracking the rise of AI search and predicting its impact on the tech landscape...

by Kamaljeet Kalsi

by Kamaljeet Kalsi

AI search isn’t just an evolution of Google — it’s a revolution in buyer behavior.

by Kamaljeet Kalsi

by Kamaljeet Kalsi