You can think of supervised learning as a teacher supervising the entire learning process.

It's one of the most common ways machines learn, and it’s an invaluable tool in the field of artificial intelligence. This learning process is comparable to a student-teacher relationship, although machines aren't as stubborn as humans.

If you've just stepped into the world of artificial intelligence, supervised learning might not be a term you have previously come across. In short, it's a machine learning strategy that enables AI systems to learn and progress.

Supervised learning is a sub-category of machine learning that uses labeled datasets to train algorithms. It's a machine learning approach in which the program is given labeled input data along with the expected output results.

Simply put, supervised learning algorithms are designed to learn by example. Such examples are referred to as training data, and each example is a pair of an input object and the desired output value. The pair of input and output data fed into the system is generally referred to as labeled data.

By feeding labeled data, you show a machine the connections between different variables and known outcomes. With supervised learning, the AI system is explicitly told what to look for in the given input data. This enables algorithms to get better periodically and create machine learning models that can predict outcomes or classify data accurately when presented with unfamiliar data.

Generally, three datasets are used in different stages of the model creation process:

Model fitting refers to the measure of how well the model generalizes to similar data to that on which it was trained. A well-fitted model produces accurate results; an overfitted model matches the data too closely; an under fitted model doesn't match the data closely enough.

Training plays a pivotal role in supervised learning. During the training phase, the AI system is fed with vast volumes of labeled training data. As previously mentioned, the training data instructs the system on how the desired output should be like from each distinct input value.

The trained model is then given the test data. This allows data scientists to determine the effectiveness of training and the accuracy of the model. The accuracy of a model is dependent on the size and quality of the training dataset and the algorithm used.

However, high accuracy isn't always a good thing. For example, high accuracy could mean that the model is suffering from overfitting – a modeling error or the incorrect optimization of a model when it gets overly tuned to its training dataset and may even result in false positives.

In such an instance, the model might perform remarkably well in test scenarios but might fail to deliver correct output in real world circumstances. To eradicate the chances of overfitting, ensure that the test data is entirely different from the training data. Also, check that the model doesn't draw answers from its previous experience.

The training examples should also be diverse. Otherwise, when presented with never-before-seen cases, the model will fail to work.

In the context of data science and data mining (the process of turning raw data into useful information), supervised learning can be further broken down into two types: classification and regression.

A classification algorithm tries to determine the category or class of the data it's presented with. Email spam classification, computer vision, and drug classification are some of the common examples of classification problems.

On the other hand, regression algorithms try to predict the output value based on the input features of the data provided. Predicting click rates of digital advertisements and predicting a house's price based on its features are some of the common regression problems.

One of the best ways to understand the difference between supervised and unsupervised learning is by looking at how you would learn to play a board game – let’s say chess.

One option is to hire a chess tutor. A tutor will teach you how to play the game of chess by explaining to you the basic rules, what each piece of chess does, and more. Once you're aware of the game's rules and the scope of each piece, you can go ahead and practice by playing against the tutor.

The tutor would supervise your moves and correct you whenever you make mistakes. Once you've gathered enough knowledge and practice, you can start playing competitively against others.

This learning process is comparable to supervised learning. In supervised learning, a data scientist acts like a tutor and trains the machine by feeding the basic rules and overall strategy.

If you don't want to hire a tutor, you can still learn the game of chess. One way is to watch other people play the game. You probably can't ask them any questions, but you can watch and learn how to play the game.

Despite not knowing the names of each chess piece, you can learn how each piece moves by observing the game. The more games you watch, the better you understand, and the more knowledgeable you become about different strategies you can adopt to win.

This learning process is similar to unsupervised learning. The data scientist lets the machine learn by observing. Although the machine doesn't know the specific names or labels, it will be able to find patterns on its own.

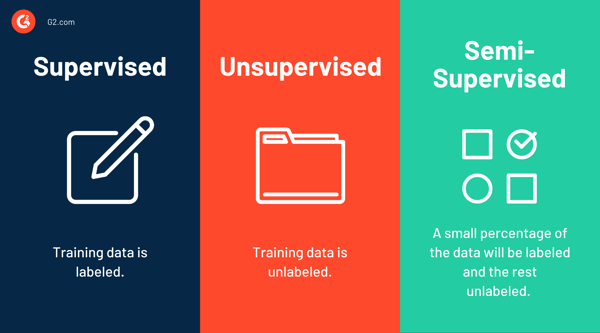

Simply put, unsupervised learning is when an algorithm is given a training dataset that contains only the input data and no corresponding output data.

As you can see, both learning methods have notable strengths and weaknesses.

For supervised learning, you require a knowledgeable tutor who could teach the machine the rules and strategy. In the example of chess, this means you need a tutor to learn the game. If not, you could end up learning the game wrongly.

In the case of unsupervised learning, you require vast volumes of data for the machine to observe and learn. Although unlabeled data is cheap (and abundant) and easy to collect and store, it must be devoid of duplicate or garbage data. Flawed or incomplete data can also result in machine learning bias – a phenomenon in which algorithms produce discriminatory results.

In the example of chess, if you're learning by observing other players, this means you need to watch dozens of games before you understand it. Also, if you're watching players who play the game incorrectly, you could end up doing the same.

Then, there's semi-supervised learning.

As you might have guessed, semi-supervised learning is a mix of supervised and unsupervised learning. In this learning process, a data scientist trains the machine just a little bit so that it gains a high-level overview. The machine then learns the rules and strategy by observing patterns. A small percentage of the training data will be labeled, and the rest will be unlabeled.

In the example of learning chess, semi-supervised learning would be similar to a tutor explaining just the basics to you and letting you learn by playing competitively.

Another learning process is reinforcement learning (RL). It's a machine learning strategy in which an AI system faces a game-like situation. To teach the AI, a programmer uses a reward-penalty technique, in which the system must focus on taking suitable actions to maximize reward and avoid penalties.

Numerous computation techniques and algorithms are used in the supervised learning process.

When choosing a supervised machine learning algorithm, the following factors are generally considered:

Here are some of the common supervised machine learning algorithms you'll come across.

Linear regression is both a statistical algorithm as well as a machine learning algorithm. It's an algorithm that tries to model the relationship between two variables by attaching a linear equation to the observed data. Out of the two variables, one is considered to be an explanatory variable and the other a dependent variable.

Linear regression can also be used to identify the relationship between a dependent variable and one or more independent variables. In the machine learning realm, linear regression is used to make predictions.

Logistic regression is a mathematical model used to estimate an event's probability based on the provided previous data. Credit scoring and online transaction fraud detection are some of the real-world applications of this algorithm. In other words, it's a predictive analysis algorithm based on the concepts of probability used to solve binary classification problems.

Just like logistic regression, linear regression too was borrowed from the field of statistics. However, unlike linear regression that works with continuous dependent variables, logistic regression works with binary data, such as "true" or "false".

Artificial neural networks (ANNs) are primarily used by deep learning algorithms. They're a series of algorithms that mimic the functions of the human brain to recognize relationships between vast volumes of data. As you might have guessed, ANNs are critical for artificial intelligence systems.

Neural networks are made up of layers of multiple nodes. Each node consists of inputs, weights, bias, and outputs. An ANN is trained by adjusting the input weights based on the network's performance. For example, if the neural network classifies an image rightly, weights contributing to the correct answer are increased while other weights are decreased.

Naive Bayes is a classification method based on the principle of class conditional independence of the Bayes Theorem. In simpler terms, the Naive Bayes classifier approach assumes that the presence of a specific feature in a class doesn’t impact the presence of any other feature.

For instance, a fruit may be considered to be an apple if it's red in color, round, and approximately three inches in diameter. Even if these features are dependent on each other, all of these properties individually contribute to the probability that the very fruit is an apple.

The Naive Bayes model is useful when dealing with large datasets. It's easy to build, fast, and is known to perform even better than advanced classification methods.

Support vector machine (SVM) is a well-known supervised machine learning algorithm developed by Vladimir Vapnik. Despite being predominantly used for classification problems, SVMs can be used for regression as well.

SVMs are built on the idea of finding a hyperplane that best divides a given dataset into two classes. Such a hyperplane is referred to as a decision boundary and separates data points into either side. Face detection, text categorization, image classification are some of the many real-world applications of SVM.

The K-nearest neighbors (KNN) algorithm is a supervised machine learning algorithm used to solve regression and classification problems. It's an algorithm that groups data points based on their proximity to and relationship with other data.

It's easy to understand, straightforward to implement, and has a low calculation time. However, the algorithm becomes notably slow as the size of data in use increases. KNN is generally used for image recognition and recommendation systems.

Random forest is a learning method that consists of a large number of decision trees operating as an ensemble (the use of multiple learning algorithms to gain better predictive performance). Each decision tree delivers a class prediction, and the class with the highest votes becomes the model's prediction.

The random forest algorithm is extensively used in the stock market, banking, and medical field. For instance, it can be used to identify customers who are more likely to repay their debt on time.

As previously mentioned, predicting house prices, the click-through rates of online ads, and even a customer’s willingness to pay for a particular product are some of the notable examples of supervised learning models.

Here are some more examples that you might come across in daily life.

By leveraging labeled data, supervised learning algorithms can create models that can classify big data with ease and even make predictions on future outcomes. It's a brilliant learning technique that introduces machines to the human world.

Speaking of learning techniques to make machines intelligent, have you ever wondered what artificial intelligence systems we have today are truly capable of? If so, feed your curiosity by reading more about narrow AI.

Amal is a Research Analyst at G2 researching the cybersecurity, blockchain, and machine learning space. He's fascinated by the human mind and hopes to decipher it in its entirety one day. In his free time, you can find him reading books, obsessing over sci-fi movies, or fighting the urge to have a slice of pizza.

With data becoming cheaper to collect and store, data scientists are often left overwhelmed by...

by Holly Landis

by Holly Landis

If you used Google, Spotify, or Uber in the past week, you’ve engaged with products that...

by Devin Pickell

by Devin Pickell

Deep learning is an intelligent machine's way of learning things.

by Amal Joby

by Amal Joby

With data becoming cheaper to collect and store, data scientists are often left overwhelmed by...

by Holly Landis

by Holly Landis

If you used Google, Spotify, or Uber in the past week, you’ve engaged with products that...

by Devin Pickell

by Devin Pickell