June 12, 2023

by Samudyata Bhat / June 12, 2023

by Samudyata Bhat / June 12, 2023

Kubernetes has experienced tremendous growth in its adoption since 2014. Inspired by Google's internal cluster management solution, Borg, Kubernetes simplifies deploying and administering your applications. Like all container orchestration software, Kubernetes is becoming popular among IT professionals because it’s secure and straightforward. However, as with every tool, recognizing how its architecture helps you use it more effectively.

Let's learn about the foundations of Kubernetes architecture, starting with what it is, what it does, and why it’s significant.

Kubernetes or Kubernetes architecture is an open-source platform for managing and deploying containers. It provides service discovery, load balancing, regenerative mechanisms, container orchestration, container runtime, and infrastructure orchestration focused on containers.

Google created the adaptable Kubernetes container management system, which handles containerized applications across many settings. It helps automate containerized application deployment, make changes, and scale up and down these applications.

Kubernetes isn't only a container orchestrator, though. In the same way, desktop apps operate on MacOS, Windows, or Linux; it’s the operating system for cloud-native applications because it serves as the cloud platform for those programs.

Containers are a standard approach for packaging applications and their dependencies so that the applications can be executed across runtime environments easily. Using containers, you can take essential measures toward reducing deployment time and increasing application dependability by packaging an app's code, dependencies and configurations into a single, easy-to-use building block.

The number of containers in corporate applications can become unmanageable. To get the most out of your containers, Kubernetes helps you orchestrate them.

Kubernetes is an incredibly adaptable and expandable platform for running container workloads. The Kubernetes platform not only provides the environment to create cloud-native applications, but it also helps manage and automate their deployments.

It aims to relieve application operators and developers of the effort of coordinating underlying compute, network, and storage infrastructure, allowing them to focus solely on container-centric processes for self-service operation. Developers can also create specialized deployment and management procedures, along with higher levels of automation for applications made up of several containers.

Kubernetes can handle all significant backend workloads, including monolithic applications, stateless or stateful programs, microservices, services, batch jobs, and everything in between.

Kubernetes is often chosen for the following benefits.

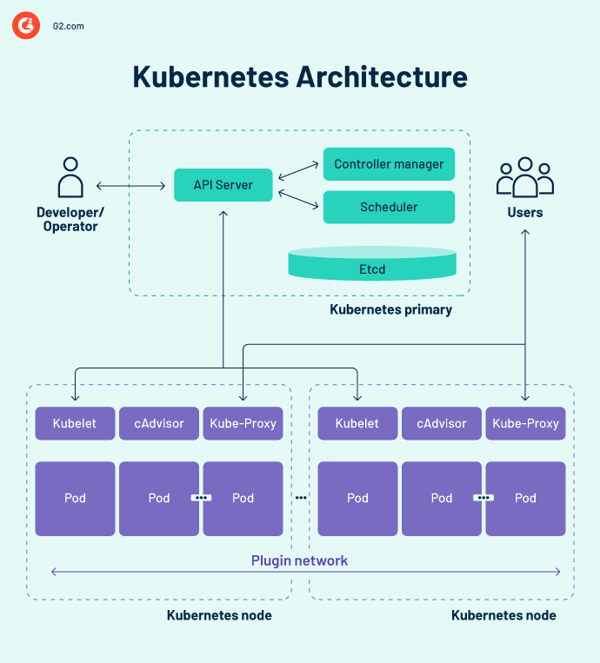

The basic Kubernetes architecture comprises many components, also known as K8s components, so before we jump right in, it is important to remember the following concepts.

Kubernetes architecture diagram

The control plane is the central nervous system center of the Kubernetes cluster design, housing the cluster's control components. It also records the configuration and status of all Kubernetes objects in the cluster.

The Kubernetes control plane maintains regular communication with the compute units to ensure the cluster operates as expected. Controllers oversee object states and make system objects' physical, observed state or current status to fit the desired state or specification in response to cluster changes.

The control plane is made up of several essential elements, including the application programming interface (API) server, the scheduler, the controller manager, and etcd. These fundamental Kubernetes components guarantee that containers are running with appropriate resources. These components can all function on a single primary node, but many companies duplicate them over numerous nodes for high availability.

The Kubernetes API server is the front end of the Kubernetes control plane. It facilitates updates, scaling, configures data, and other types of lifecycle orchestration by offering API management for various applications. Because the API server is the gateway, users must be able to access it from outside the cluster. In this case, the API server is a tunnel to pods, services, and nodes. Users authenticate through the API server.

The kube-scheduler records resource utilization statistics for each computing node, evaluates if a cluster is healthy, and decides whether and where new containers should be deployed. The scheduler evaluates the cluster's overall health and the pod's resource demands, such as central processing unit (CPU) or memory. Then it chooses an appropriate computing node and schedules the task, pod, or service, considering resource constraints or assurances, data locality, service quality requirements, anti-affinity, or affinity standards.

In a Kubernetes environment, multiple controllers govern the states of endpoints (pods and services), tokens and service accounts (namespaces), nodes, and replication (autoscaling). The kube-controller manager, often known as the cloud controller manager or just the controller, is a daemon that manages the Kubernetes cluster by performing various controller duties.

The controller monitors the objects in the cluster while running the Kubernetes core control loops. It monitors them for their desired and existing states via the API server. If the current and intended states of managed objects don’t match, the controller takes corrective action to move the object status closer to the desired state. The Kubernetes controller also handles essential lifecycle tasks.

etcd is a distributed, fault-tolerant key-value store database that keeps configuration data and cluster status information. Although etcd may be set up independently, it often serves as a part of the Kubernetes control plane.

The raft consensus algorithm is used to keep the cluster state in etcd. This aids in dealing with a typical issue in the context of replicated state machines and requires many servers to agree on values. Raft establishes three roles: leader, candidate, and follower, and creates consensus through voting for a leader.

As a result, etcd is the single source of truth (SSOT) for all Kubernetes cluster components, responding to control plane queries and collecting different information about the state of containers, nodes, and pods. etcd is also used to store configuration information like ConfigMaps, subnets, secrets, and cluster state data.

Worker nodes are systems that run containers the control plane manages. The kubelet – the core Kubernetes controller – runs on each node as an agent for interacting with the control plane. In addition, each node runs a container runtime engine, such as Docker or rkt. Other components for monitoring, logging, service discovery, and optional extras are also run on the node.

Some key Kubernetes cluster architecture components are as follows.

A Kubernetes cluster must have at least one computing node, but it can have many more depending on capacity requirements. Because pods are coordinated and scheduled to execute on nodes, additional nodes are required to increase cluster capacity. Nodes do the work of a Kubernetes cluster. They link applications as well as networking, computation, and storage resources.

Nodes in data centers may be cloud-native virtual machines (VMs) or bare metal servers.

Each computing node uses a container runtime engine to operate and manage container life cycles. Kubernetes supports open container initiative-compliant runtimes like Docker, CRI-O, and rkt.

A kubelet is included on each compute node. It’s an agent that communicates with the control plane to guarantee that the containers in a pod are operating. When the control plane demands that a specific action be performed in a node, the kubelet gets the pod specifications via the API server and operates. It then makes sure that the related containers are in good working order.

Each compute node has a network proxy known as a kube-proxy, which aids Kubernetes networking services. To manage network connections inside and outside the cluster, the kube-proxy either forwards traffic or depends on the operating system's packet filtering layer.

The kube-proxy process operates on each node to ensure services are available to other parties and to cope with specific host subnetting. It acts as a network proxy and service load balancer on its node, handling network routing for user datagram protocol (UDP) and transmission control protocol (TCP) traffic. The kube-proxy, in reality, routes traffic for all service endpoints.

So far, we've covered internal and infrastructure-related ideas. Pods, however, are crucial to Kubernetes since they’re the primary outward-facing component developers interact with.

A pod is the simplest unit in the Kubernetes container model, representing a single instance of an application. Each pod comprises a container or several tightly related containers that logically fit together and carry out the rules that govern the function of the container.

Pods have a finite lifespan and ultimately die after being upgraded or scaled back down. Although ephemeral, they execute stateful applications by connecting to persistent storage.

Pods may also scale horizontally, which means they can increase or decrease the number of instances operating. They’re also capable of doing rolling updates and canary deployments.

Pods operate on nodes together, so they share content and storage and may communicate with other pods through localhost. Containers may span several computers, and so can pods. A single node can operate several pods, each collecting numerous containers.

The pod is the central management unit in the Kubernetes ecosystem, serving as a logical border for containers that share resources and context. The pod grouping method, which lets several dependent processes operate concurrently, mitigates the differences between virtualization and containerization.

Several sorts of pods play a vital role in the Kubernetes container model.

Kubernetes maintains an application's containers but may also manage the associated application data in a cluster. Users of Kubernetes can request storage resources without understanding the underlying storage infrastructure.

A Kubernetes volume is a directory where a pod can access and store data. The volume type determines the volume's contents, how it came to be, and the media that supports it. Persistent volumes (PVs) are cluster-specific storage resources often provided by an administrator. PVs can also outlive a given pod.

Kubernetes depends on container images, which are stored in a container registry. It might be a third-party register or one that the organization creates.

Namespaces are virtual clusters that exist within a physical cluster. They’re designed to create independent work environments for numerous users and teams. They also keep teams from interfering with one another by restricting the Kubernetes objects they can access. Kubernetes containers within a pod can communicate with other pods through localhost and share IP addresses and network namespaces.

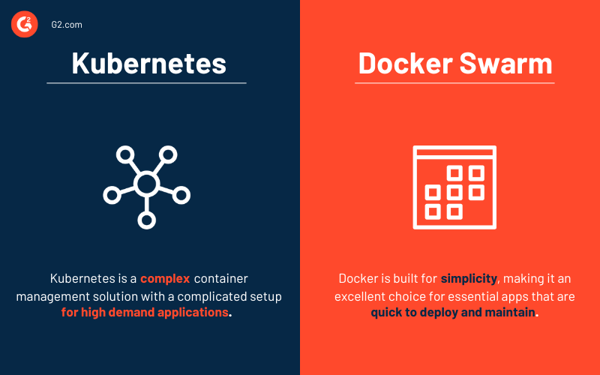

Both Kubernetes and Docker are platforms that provide container management and application scaling. Kubernetes provides an effective container management solution ideal for high-demand applications with a complicated setup. In contrast, Docker Swarm is built for simplicity, making it an excellent choice for essential apps that are quick to deploy and maintain.

The demands of your company determine the right tool.

Container orchestration systems enable developers to launch several containers for application deployment. IT managers can use these platforms to automate administering instances, sourcing hosts, and connecting containers.

The following are some of the best container orchestration tools that facilitate deployment, identify failed container implementations, and manage application configurations.

*The five leading container orchestration solutions from G2’s Spring 2023 Grid® Report.

Implementing a platform strategy that considers security, governance, monitoring, storage, networking, container lifecycle management, and orchestration is critical. However, Kubernetes is widely challenging to adopt and scale, especially for businesses that manage both on-premises and public cloud infrastructure. To simplify it, discussed below are some best practices that must be considered while architecting kubernetes clusters.

Tip: Explore container management solutions for better deployment practices.

Kubernetes, the container-centric management software, has become the de facto standard for deploying and operating containerized applications due to the broad usage of containers within businesses. Kubernetes architecture is simple and intuitive. While it gives IT managers greater control over their infrastructure and application performance, there is much to learn to make the most of the technology.

Intrigued to explore the subject more? Learn about the growing relevance of containerization in cloud computing!

Samudyata Bhat is a former Content Marketing Specialist at G2. With a Master's degree in digital marketing, she specializes her content around SaaS, hybrid cloud, network management, and IT infrastructure. She aspires to connect with present-day trends through data-driven analysis and experimentation and create effective and meaningful content. In her spare time, she can be found exploring unique cafes and trying different types of coffee.

If you're new to the world of containers, Kubernetes and Docker are two terms you've probably...

by Samudyata Bhat

by Samudyata Bhat

The more a business relies on information technology (IT), the more important it is to have a...

by Keerthi Rangan

by Keerthi Rangan

We have moved past the age of bulky computers and messy networks.

by Dibyani Das

by Dibyani Das

If you're new to the world of containers, Kubernetes and Docker are two terms you've probably...

by Samudyata Bhat

by Samudyata Bhat

The more a business relies on information technology (IT), the more important it is to have a...

by Keerthi Rangan

by Keerthi Rangan