With an alarming spurt of security breaches and crimes, industries need a pivot from traditional security practices to facial recognition.But, how exactly does facial recognition have an edge over traditional security?

Been around for a while, facial recognition is a subset of artificial intelligence which simulates human vision within computers and enables them to verify human faces. Several industries across retail, automotive, finance, cybersecurity and real estate are adopting facial recognition to authenticate individuals and prevent suspicious infiltration.

By integrating present data security infrastructure with image recognition software, users can easily break down features of faces within images and videos and make their premise safer.

Let's get into the details of the science behind facial recognition and real-world applications across the market today.

Facial recognition is a computer vision technique that analyzes facial features and compares it with a training dataset to confirm the authenticity and identity of a specific individual. It is used in surveillance cameras, biometric authentication and police verification processes to confirm a person's identity with increased accuracy.

Facial recognition is used for a variety of industrial purposes like finding missing culprits, confirming event attendees and verify identities within video footages.

Since facial recognition systems work on sensitive images and videos, they must be secure and accurate in output.

Let’s say you’re going to work. When you went through onboarding, you had your picture taken and stored in your company’s image database. In addition to an employee badge, your company uses facial recognition to increase security.

Facial recognition interprets and measures your facial features and follows machine learning (ML) and deep learning (DL) techniques to make a computer understand how a face is built. Once the computer at your job registers your face vectors, it searches for your match in the photo database.

Facial recognition technology (FRT) can be roughly divided into three categories.

On the first leg of the journey, a computer must analyze a particular face from multiple faces in an image. Once that is done, the other characteristics, like texture, angle, pose, and illumination, vectors, background, pixels and gradients are scanned and compared with underlying dataset.

Deep learning algorithms like the Viola-Jones algorithm, the histogram of oriented gradients (HOGG), recurrent convolutional neural networks (R-CNN), and you only look once (YOLO) can detect data from front and side-facing profiles.

Source: IT Chronicles

Fun fact! Different sensors like red, green, and blue light emitting diodes ( RGB LED), depth, Electroencephalography sensor (EEG), thermal, and wearable inertial sensors are deployed as hardware to sense and extract face data. It can work on both static or dynamic images and videos.

Once your system finds a match, it categorizes the face within the image or video. This can be done in many ways, but the most recent method is converting analog facial data into digital signals. The computer accepts these digital signals and matches them to stored templates. This process is also known as template matching.

Once a match is found, the system triggers an alert of “identification successful”.

Source: Alamy

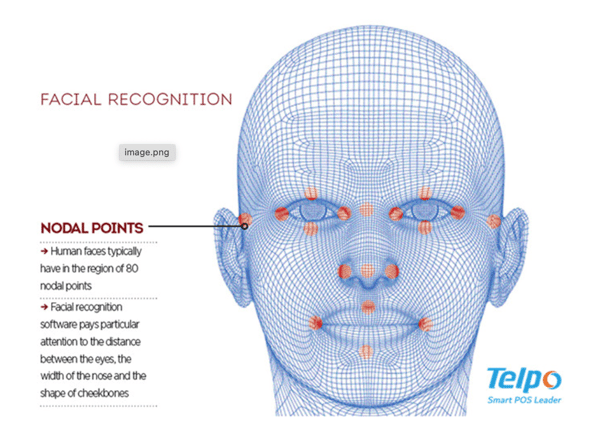

To finish facial detection, the system must be sure that other options are ruled out. The computer vision system computes “nodal points” on the face. It measures the depth of eye sockets, length of lips, cheekbones, and so on. The distribution of relative nodal distances creates a rough blueprint or faceprint. It is matched with the model database, which contains millions of faceprints.

Source: Telpo

The system must be sure that an image contains a face. It assigns a threshold value at the beginning of the process. An assigned confidence score of 99% means the algorithm is almost sure the image has a face. Matches with lower confidence scores are compared with the next closest category.

Once the presence of a face is noted, the system can go on to classify, verify, and grant user access. While performing the facial hypothesis, the system analyzes the following features:

In facial recognition, nodal points are specific coordinates marked on a face. One point highlights the width of the nose; another can highlight the widow’s peak of the hairline, and so on. 80 nodal points are analyzed in this way.

With so many processes involved, you might think you’ve got your work cut out for you with facial recognition. But not when you have dedicated software at your fingertips.

The demand for FRT increases as enterprises continues to prioritize security. Replacing traditional facility maintenance with facial recognition ensures the safety of employees and guests. It’s time for software buyers to analyze how they could use an FRT solution.

Newer, trendier technologies are bound to impact people. But not all of them have been as successful as facial recognition. Why is that? The answer lies in the algorithm that never fails to work.

Tip: Google Photos facial recognition categorizes your phone gallery based on individual (including pets!) faces in the photos. It may not be entirely accurate, but it simplifies your life by making it quicker to find your loved ones on your endless camera roll.

Facial recognition is already making revelations in the social media space. It has been a hub of discussion for science and technology experts for decades, and a few inventions have gone live in recent years.

Created by Facebook in 2015, this facial recognition system identifies human faces in pictures uploaded to the social media platform with up to 97% accuracy. Each time a Facebook user is tagged in a photograph, DeepFace maps information about their facial characteristics. When enough data is collected, the software is able to tag them in a new photograph.

In June 2015, Google released FaceNet, which is used on the Labeled Faces in the Wild (LFW) dataset. FaceNet achieves a new record for accuracy at an astounding 99.63%. It relies on its unique algorithm, plus an artificial neural network, and is incorporated into Google Photos to tag pictures when a person is automatically recognized.

In the summer of 2019, one app swept the nation and went viral- FaceApp. From celebrities to NBA players to even my family over Sunday dinner, everyone was using the app to upload a selfie and see how they look once they aged into the future with the “old age” filter.

Using artificial intelligence and deep learning technology, FaceApp could do more than age a photo. It can add lipstick and eyeshadow, change the hair color, or add a beard or a mustache.

In 2017, Apple launched Face ID on the iPhone X, which lets users unlock their phones with a faceprint mapped by the phone’s front-facing camera. This software was designed with 3D modeling technique. It’s resistant to being spoofed by photos and works if users wear masks because it captures and compares over 30,000 variables. Face ID still works even if your phone is under poor lighting or weather conditions.

Face ID can also be used to make purchases with payment gateways like Apple Pay, the iTunes Store, App Store, and the iBooks Store.

In June 2021, a student from Washington, DC, built “Faces of the Riot,” an open-source facial recognition app that features over 6000 images of faces from 827 videos. The Federal Bureau of Investigation (FBI) currently uses it for the facial verification of protestors, rioters, and journalists.

Amazon Rekognition, a prominent face biometrics software tool, was one of the first ventures by Amazon in the facial recognition domain. It could easily add image data and video analysis to business applications. However, in June 2020, Amazon announced “a year’s halt” of its services due to the initiation of US federal policies.

According to US federal policies, collecting and storing facial data from remote states is too easy and cheap. The databases are collected in government systems and maintained by a single vendor. Even if one of the systems got corrupted, the entire database would be at the hacker’s mercy.

You can unlock your Mac and Android gadgets using the face recognition feature. For MacOS, go to the “Settings menu > Face ID and Passcode > Set up Face ID. Hold your device in portrait mode and bring it in the same line of sight as your face. The system will scan your face twice and set your Face ID. For Android devices, the steps may vary. A standard protocol is to go to Settings > Lock and Security > Biometrics> Unlock by Face.

British Airways has enabled facial recognition services for US-bound passengers. The process expedites customs processes and boarding by quickly scanning traveller's face and boarding pass at the same time. This process also beats check-in queues and security check-ins and directly enables passengers to reach the departure gates.

Cigna is a US-headquartered insurance company that allows customers in China to apply for health insurance with facial know your customer (KYC). They scan and verify the applicant’s face pattern as a mandatory registration step. Evaluating applicants with facial details reduces the instances of a fluke.

Coca-Cola uses facial recognition for gamification. In China and Australia, vending machines dispense Coca-Cola cans after they scan and match a customer’s face. This venture has signed cross-border profitable deals for the company. It also increased the customer's love and credibility as they develop an empathetic corner for Coca Cola and a belief that they are valuable as customers to the brand.

Sephora is marketing its products with augmented reality. By enabling virtual face recognition technology, consumers can try makeup products virtually. Elevating a user’s experience and perception of the product results in increased sales. By detecting facial features, Sephora automates it's make-up and beauty recommendations and also informs the customer about which product would scientifically be the best fit for their complexion.

Snapchat is one of the first movers in facial recognition. It allows brands and enterprises to catalog and pre-launch their products using Snapchat filters. Those celebrity, puppy, and crown filters you see on social media are created using Snapchat. Snapchat allows users to only interact and communicate with each other once they certify their facial identity and confirm their authenticity on the platform.

If you saw the world around you in rectangular boxes, it would be difficult for you to interact with anyone. But that’s how computers relate to us.

More than interaction, it can tell who’s an authorized user and a trespasser, which keeps your devices safe. Let’s look at a few more ways facial recognition helps us out.

There are several big question marks all over facial recognition, given the amount of bias the algorithms produce. Listed below are some challenges impeding its worldwide adoption.

Facial recognition algorithms detect faces in a straight configuration. If the face was a little tilted or sideways, the algorithm failed to perform. But now, algorithms can detect faces and objects as they move past the camera. Times have transcended from manual computation of facial points to automatic matching with near-perfect accuracy.

Some of the most widely deployable facial recognition algorithms are

A data mining approach that classifies faces in the presence of fewer input datasets, the principal component analysis compares your face against a common eigenface (a matrix of anatomical and orthogonal organs) model. The input face is projected on this Eigenface, and the difference between the two faces is calculated. The result affirms the presence of a face.

With this algorithm, all elements of an image are calculated and compared against a facial recognition technology (FERET) database under two different architectures. The image is treated as a linear mixture of random variables and pixels. A unique, factorial face code is also produced. A classifier combines these two different methods to give the best facial detection.

This makes projections of training images in a subspace. These projections are known as “fisher faces,” and the space is known as “fisher space.” Once the projections are made, the algorithm uses the k-nearest neighbor algorithm to identify and match a face with the input

Slightly more improved than eigenface; this algorithm uncovers other details about a face rather than orthogonal features. In this algorithm, faces are downsampled as statistical points and mapped on a graph. The graph has 40 nodal points positioned at fiducial points.

The nodes are called “jets”, which contain almost 40 Gabor wavelet coefficients. The Gabor wavelet coefficients extract a face's edges, textures, frequency, or location. This data makes it possible for the algorithm to recognize faces much sooner. This algorithm handles large image galleries and variations in a pose.

Fisherfaces help you distinguish a face based on the brightness of light falling on it. Like eigenface, it maps features of an input face with a pre-existing face model. Because it considers light, the classification process becomes better and faster. It also understands facial expressions like laughter, crying, or scowling.

It is used to detect faces in low light conditions or night mode. It recognizes blood flow, skin color, and other determinants to make a decision. It’s popular with DSLR cameras or the latest versions of iPhones.

Although facial recognition algorithms are storming the charts, a few drawbacks must be resolved.

Did you know? Facial recognition has recently been in the news for its biased nature of output. The algorithm resulted in “false positives” for African and Asian races 10 to 100 times more than the white ones.

Facial recognition software (FRS) screens users and cross-verifies them against existing records. For example, if you want to issue a driver’s license, the software scans your face as a “new applicant” and assigns you a new number. If you apply again, the system will trigger the “record already exists” action and decline your application.

The facial recognition software tool locates faces, creates feature maps, pools facial data for underlying templates, and classifies them using the softmax layer. The softmax classifier uses particular regularization based on distinctive features. It assigns a probability score to each category, and the category with the highest score becomes the output.

In short, a facial recognition software tool:

Did you know? According to a 2021 survey by the National Institute of Standards and Technology (NIST), facial recognition algorithms now have an error rate of 0.08%, compared to 4.1% in 2014.

The next time you unlock your phone with your face, remember all that goes behind it. Your smartphone stores your facial data and matches it with a scanned pattern to unlock your phone.

Besides smartphones, facial recognition systems have bolstered the security game for many other industries.

AI is used in the prototyping stage of the facial recognition system. The main technology helps a computer learn and interpret patterns from a human face. AI software accepts live data and compares it with stored data to find an exact match.

Facial recognition systems have a default rate of +0.37%, which means the accuracy rate is as high as 99.63%. ID verification or KYC verification algorithms have achieved this peak of accuracy. The facial recognition vendor (FRVT) test by the National Institute of Standards and Technology has confirmed the accuracy of facial recognition algorithms.

Facial recognition compares live image data with a reference database. It identifies facial attributes, like the jawline, cheek apples, eye sockets, or eye focus. Using that data, it calculates relative distances to build faceprints and identify individuals.

Image data is sensitive and needs to be protected from viruses or hackers. Face recognition systems are prone to unethical hacking, data theft, or leakage. Because of this threat, use cases should stay limited. Use cases can be border protection, security check-ins, and so on.

As security and encryption are growing concerns, facial recognition takes center stage. The intricacies of human facial expressions, moods, and blood flow are hard to decipher and duplicate.

Leaving little room for error, facial recognition can potentially save the world. Your machines are trained to interpret and recognize faces, words, and human touch. Soon, they will interact with you as your friends or family do.

Learn about you only look once (YOLO), which has been a trailblazer in the image recognition industry due to it's competitive precision and accuracy.

Shreya Mattoo is a former Content Marketing Specialist at G2. She completed her Bachelor's in Computer Applications and is now pursuing Master's in Strategy and Leadership from Deakin University. She also holds an Advance Diploma in Business Analytics from NSDC. Her expertise lies in developing content around Augmented Reality, Virtual Reality, Artificial intelligence, Machine Learning, Peer Review Code, and Development Software. She wants to spread awareness for self-assist technologies in the tech community. When not working, she is either jamming out to rock music, reading crime fiction, or channeling her inner chef in the kitchen.

You unlock your phone using Face ID. A traffic cop identifies a suspect with real-time...

by Katam Raju Gangarapu

by Katam Raju Gangarapu

Businesses spend a lot of time, revenue and manpower on collating raw data.

.png) by Tanuja Bahirat

by Tanuja Bahirat

Artificial intelligence is used as a broad catchall term for many subsets of AI, which is in...

by Rebecca Reynoso

by Rebecca Reynoso

You unlock your phone using Face ID. A traffic cop identifies a suspect with real-time...

by Katam Raju Gangarapu

by Katam Raju Gangarapu

Businesses spend a lot of time, revenue and manpower on collating raw data.

.png) by Tanuja Bahirat

by Tanuja Bahirat