September 28, 2021

by Sagar Joshi / September 28, 2021

by Sagar Joshi / September 28, 2021

Some professionals view data lineage as the GPS of data.

It's because data lineage helps users get a visual overview of the data’s path and transformations. It documents how data is processed, transformed, and transmitted to constitute meaningful information businesses use to run their operations.

Data lineage helps businesses get a granular view of how data flows from source to destination. Many organizations use data virtualization software with data lineage to help them track their data while providing real-time information to users.

Data lineage is the process of identifying the origin of data, recording how it transforms and moves over time, and visualizing its flow from data sources to end-users. It helps data scientists gain granular visibility of data dynamics and enables them to trace errors back to the root cause.

Data lineage informs engineers about data transformations and why they occur. It helps organizations track errors, perform system migrations, bring data discovery and metadata closer together, and implement process changes with less risk.

.png?width=600&name=G2CM_FI072_Learn_Article_Images-%5BData_Lineage%5D_Infographic_V1a%20(1).png)

Strategic business decisions depend on data accuracy. Without good data lineage, it becomes challenging to track data processes and verify them. Data lineage enables users to visualize the complete flow of information from source to destination, making it easier to detect and fix anomalies. With data lineage, users can replay specific portions or inputs of data flow to debug or generate lost output.

In situations where users don't need details on technical lineage, they use data provenance to gain a high-level overview of the data flow. Many database systems leverage data provenance to address debugging and validation challenges.

Data provenance is the documentation of where data comes from and the methods by which it’s produced.

Although data provenance and data lineage have similarities, data provenance is more useful to business users who need a high-level overview of where data is coming from. On the contrary, data lineage includes both business-level and technical-level lineage and provides a granular view of data flow.

Data governance is the set of rules and procedures organizations use to maintain and control data. Data lineage is an essential part of data governance as it informs how data flows from the source to the destination.

Businesses use different tiers of data lineages based on their needs. Lower levels of data lineage provide a simple visual representation of how data flows within an organization, without including specific details about the transformations occurring as it moves through the pipeline. The highest tier is attribute-level data lineage that offers insights into how data flow can be optimized and ways to improve data platforms.

Organizations choose the data lineage tier based on their governance structure, costs incurred in implementation and monitoring, regulatory concerns, and the impact it would have on the business.

Understanding data lineage is a critical aspect of metadata management, making it essential for data warehouse and data lake administrators. Metadata management allows you to view data flow through various systems, making it easier to find all data associated with a particular report or extract, transform, load (ETL) process.

Josef Viehhauser

Platform lead at BMW

Data lineage doesn’t only help you fix issues or perform system migrations, it also enables you to ensure the confidentiality and integrity of data by tracking changes, how they were performed, and who made them.

With data lineage, IT teams can visualize the end-to-end journey of data from start to finish. It makes an IT professional’s job easier and provides business users with the confidence to make effective decisions.

Data lineage tools help you answer the following questions:

The requirements for a data lineage system are primarily determined by an individual’s role and the organization’s objective. However, data lineage can have a significant impact in areas that include:

Professionals see data lineage as a dataGovOps practice where lineage, testing, and sandboxing come under data governance practices.

Wolfgang Strasser

Data Consultant at Cubido Business Solutions GMBH

Wolfgang Strasser added further "The need to understand the dependencies between the data islands and systems in organizations is vital. It's not only required from a technical point of view; the better you know how your data flows between systems allows you to react better and see where a piece of information originated from as well as the transformations that were applied on the way to the destination system. In some of our projects, we've been able to find system dependencies that even the customer wasn’t aware of."

There are various ways data lineage can help individuals in different job roles. For example, an ETL developer can find bugs in an ETL job and check for any modifications in data fields like column deletions, additions, or renaming. A data steward can use lineage to identify the least and most useful data asset in an ETL job. For business users, it helps to check the accuracy of reports and identify the processes and jobs involved when wrong reports are generated.

Data lineage also finds its application in machine learning, where it’s used to retrain models based on new or modified data. It also helps reduce model drift. Model drift refers to the degradation of model performance due to changes in data and relationships between input and output variables.

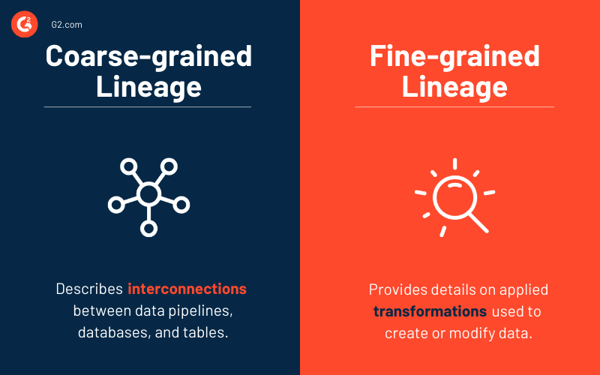

Academic scholars sometimes use coarse-grained and fine-grained data lineage differently, but the concept basically covers the level of data lineage that a user can get.

Coarse-grained data lineage describes data pipelines, databases, tables, and how they’re interconnected. Typically, a lineage collection system accumulates coarse-grained lineage at run time. They capture the interconnectedness between data pipelines, databases, and tables without details on transformations used to modify data. This helps them lower their capture overheads (detailed information about data flow). In a situation where a user wants to conduct forensic analysis for debugging purposes, they’d have to replay the data flow in order to collect fine-grained data lineage.

On the other hand, fine-grained data lineage covers detailed applied transformations that create or modify data. Active lineage collection systems capture coarse-grained or fine-grained data lineage at run time. It enables excellent replay and debugging. However, capture overheads are high due to the volume of fine-grained lineage data.

Data lineage helps organizations trace data flow throughout the lifecycle, see dependencies, and understand transformations. Teams leverage the granular view of data flow and use it for many purposes.

There is confusion in situations where sales numbers don’t match the finance department’s records, and it’s challenging to pinpoint where the actual error exists. Data lineage provides a reasonable explanation for such instances. Business intelligence (BI) managers can use data lineage to track the complete data flow and see any modifications made during processing.

Regardless of whether an error exists, BI managers can feel confident providing a reasonable explanation for the situation. If there is an error, teams can rectify it at its source, enabling uniformity of end-user data across different teams.

While upgrading or migrating to a new system, it’s essential to understand which datasets are relevant and which have become obsolete or non-existent. Data lineage helps you know the data you actually use to carry out business operations and limit spending on storing and managing irrelevant data.

With data lineage, you can seamlessly plan and execute system migrations and updates. It helps you visualize the data sources, dependencies, and processes, enabling you to know exactly what you need to migrate.

Any good business identifies reports, data elements, and end-users affected before implementing a change. Data lineage software helps teams visualize downstream data objects and measure the impact of the change.

Data lineage lets you see how business users interact with data and how a change would affect them. It helps businesses understand the impact of a particular modification and allows them to decide if they should follow through.

Organizations can perform data lineage on strategic datasets using a few standard techniques. These techniques ensure that every data transformation or processing is tracked, enabling you to map data elements at every stage when information assets go through processes.

Data lineage techniques collect and store metadata after each data transformation, which is later used for data lineage representation.

Lineage by parsing one of the most advanced lineage forms that reads the logic used to process data. You can get comprehensive end-to-end traceability by reverse engineering data transformation logic.

Lineage by parsing technique is relatively complicated to deploy as it requires understanding all tools and programming languages used to transform and process data. This can include ETL logic, structured query language (SQL) based solutions, JAVA solutions, extensible markup language (XML) solutions, legacy data formats, and more.

It's tricky to create a data lineage solution that supports a dozen of programming languages, and various tools that support dynamic processing add to its complexity. While choosing a data lineage solution, ensure that it accounts for input parameters, runtime information, and default values and parses all these elements to automate end-to-end data lineage delivery.

Pattern-based lineage uses patterns to provide lineage representation instead of reading any code. Pattern-based lineage leverages metadata about tables, reports, and columns and profiles them to create a lineage based on common similarities and patterns.

You without a doubt have the advantage of monitoring data instead of algorithms in this technique. Your data lineage solution doesn’t have to understand programming languages and tools used to process data. It can be used in the same way across any database technology like Oracle or MySQL. But at the same time, this technique doesn’t always show accurate results. Many details, such as transformation logic, aren’t available.

This approach is suitable for data lineage use cases when understanding programming logic isn’t possible because of inaccessible or unavailable code.

Self-contained lineage tracks every data movement and transformation within an all-inclusive environment that provides data processing logic, master data management, and more. It becomes easy to track data flow and its lifecycle.

Still, the self-contained solution remains exclusive to one specific environment and is blind to everything outside it. As new needs appear and new tools are used to process data, the self-contained data lineage solution can fall short on delivering the expected results.

With lineage by data tagging, each piece of data that moves or transforms gets tagged by a transformation engine. All tags are then read from start to finish to produce a lineage representation. Although it appears to be an effective data lineage technique, it only works if there is a consistent transformation engine or tool to control data movement.

This technique excludes data movements outside the transformation engine, making it suitable for performing data lineage on closed data systems. In some cases, this might not be a preferred data lineage technique. For example, developers refrain from adding formal data columns to the solution model at every touchpoint for data movements.

Blockchain is one potential solution to address complexities of lineage by data tagging, but it doesn’t have enough widespread adoption to cause a significant impact on data lifecycle in organizations.

Manual lineage involves talking to people to understand the flow of data in an organization and documenting it. You can interview application owners, data integration specialists, data stewards, and others associated with the data lifecycle. Next, you can define the lineage using spreadsheets with simple mapping techniques.

At times, you may find contradictory information or miss interviewing someone, leading to improper data lineage. While going through the code, you’ll also have to manually review tables, compare columns, and so on, making it a time-consuming and tedious process. The dynamically growing code volume and its complexity add to manual data lineage complications.

Regardless of these challenges, this approach proves beneficial to understand what’s going on in an environment. Manual data lineage also proves effective when code is unavailable or inaccessible.

Implementing data lineage strongly depends on your organization’s data culture. Ensure you have an established data management framework and build a strong collaboration with data management professionals and other stakeholders for successful data lineage implementation.

Follow these seven steps to successfully implement data lineage in your organization.

Lineage helps you get trustworthy and accurate data to support your company’s decision-making process. Planning and implementing is a critical element of data governance - you need to be sure where your data is coming from and where it’s taking you.

There are a few practices you can consider while planning and implementing data lineage in your organization:

Data lineage allows organizations to get granular visibility of data flow throughout the lifecycle and helps them identify the root cause of errors, manage data governance, conduct impact analysis, and make data-driven business decisions.

Documenting data lineage can be tricky, but it’s beneficial for organizations to effectively understand and use their data.

Learn more about how to get real-time data to make strategic business decisions with data virtualization.

Sagar Joshi is a former content marketing specialist at G2 in India. He is an engineer with a keen interest in data analytics and cybersecurity. He writes about topics related to them. You can find him reading books, learning a new language, or playing pool in his free time.

The real challenge for data teams is how to choose a data science and machine learning...

by Yashwathy Marudhachalam

by Yashwathy Marudhachalam

As a marketing professional, I am best friends with data. If we zoom in to the absolute core...

.png) by Shreya Mattoo

by Shreya Mattoo

The real challenge for data teams is how to choose a data science and machine learning...

by Yashwathy Marudhachalam

by Yashwathy Marudhachalam