June 5, 2024

by Hardik Shah / June 5, 2024

by Hardik Shah / June 5, 2024

For ages, computers have tried to mimic the human brain and its sense of intelligence.

The segue of artificial neural networks dates back to the 1950s. Engineers have been fascinated by quick and on-the-point decision-making since the beginning of time and have strived to replicate this in computers. This later took shape as neural network learning or deep learning. One specific branch, artificial neural network software, has been used significantly for machine translation and sequence prediction tasks.

Learn how computers bridged the gap between the brain reflexes of humans and fueled this power within computers to improvise language translations, conversations, sentence modifications, and text summarization. Known as artificial neural network, now computers could understand words and interpret them like humans.

Keep reading to find out how you can deploy artificial neural network software to power contextual understanding within your business applications and simulate human actions via computers.

An artificial neural network, also known as a neural network, is a univariate and sophisticated deep learning model that replicates the biological functioning of the human brain. It replicates the central nervous system mechanism. Each input signal is considered to be independent of the next input signal. So, input data that exists between two nodes does not have any relationship.

The neural network is used for sequential processing because it predicts output based on the nature of each individual input node.

When a dozen terms like artificial intelligence, machine learning, deep learning, and neural networks, it’s easy to get confused. However, the actual difference between these artificial intelligence terms is not that complicated.

The answer is exactly how the media defines it. An artificial neural network is a system of data processing and output generation that replicates the neural system to unravel non-linear relations in a large dataset. The data might come from sensory routes and might be in the form of text, pictures, or audio.

The best way to understand how an artificial neural network works is by understanding how a natural neural network inside the brain works and drawing a parallel between them. Neurons are the fundamental component of the human brain and are responsible for learning and retention of knowledge and information as we know it. You can consider them the processing unit in the brain. They take the sensory data as input, process it, and give the output data used by other neurons. The information is processed and passed until a decisive outcome is attained.

An artificial neural network is based on a human brain mechanism. The same scientific process is used to extract responses from the artificial neural network software and generate output.

The basic neural network in the brain is connected by synapses. You can visualize them as the end nodes of a bridge that connects two neurons. So, the synapse is the meeting point for two neurons. Synapses are an important part of this system because the strength of a synapse determines the depth of understanding and the retention of information.

Source: neuraldump.net

All the sensory data that your brain collects in real time is processed through these neural networks. They have a point of origination in the system. As they are processed by the initial neurons, the processed form of an electric signal coming out of one neuron becomes the input for another neuron. This micro-information processing at each layer of neurons is what makes this network effective and efficient.

By replicating this recurring theme of data processing across the neural network, ANNs can produce superior outputs.

In an ANN, everything is designed to replicate this very process. Don’t worry about the mathematical equation. That’s not the key idea to be understood right now. All the data entered with the label ‘X’ in the system has a weight of ‘W’ to generate a weighted signal. This replicates the role of a synaptic signal’s strength in the brain. The bias variable is attached to control the results of the output from the function.

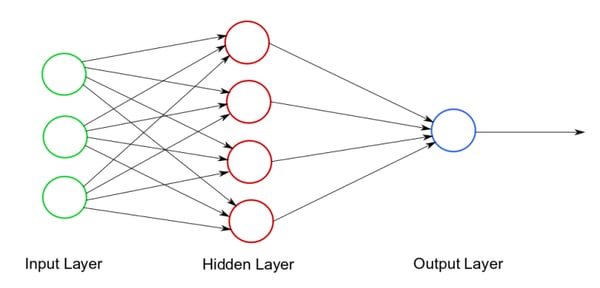

So, all of this data is processed in the function, and you end up with an output. That’s what a one-layer neural network or a perceptron would look like. The idea of an artificial neural network revolves around connecting several combinations of such artificial neurons to get more potent outputs. That is why the typical artificial neural network’s conceptual framework looks a lot like this:

Source: KDnuggets

We’ll soon define these layers as we explore how an artificial neural network functions. But for a rudimentary understanding of an artificial neural network, you know the first principles now.

This mechanism is used to decipher large datasets. The output generally tends to be an establishment of causality between the variables entered as input that can be used for forecasting. Now that you know the process, you can fully appreciate the technical definition here:

“Artificial neural network is a network modeled after the human brain by creating an artificial neural system via a pattern recognizing computer algorithm that learns from, interprets and classifies sensory data"

Brace yourself; things are about to get interesting here. And don’t worry – you don’t have to do a ton of math right now.

The magic happens first at the activation function. The activation function does initial processing to determine whether the neuron will be activated or not. If the neuron is not activated, its output will be the same as its input. Nothing happens then. This is critical to have in the neural network, otherwise, the system will be forced to process a ton of information that has no impact on the output. You see, the brain has limited capacity but it has been optimized to use it to the best.

One central property common across all artificial neural networks is the concept of non-linearity. Most variables studied possess a non-linear relationship in real life.

Take, for instance, the price of chocolate and the number of chocolates. Assume that one chocolate costs $1. How much would 100 chocolates cost? Probably $100. How much would 10,000 chocolates cost? Not $10,000; either the seller will add the cost of using extra packaging to put all the chocolates together, or she will reduce the cost since you are moving so much of her inventory off her hands in one go. That is the concept of non-linearity.

An activation function will use basic mathematical principles to determine whether the information is to be processed or not. The most common forms of activation functions are the Binary Step Function, Logistic Function, Hyperbolic Tangent Function, and Rectified Linear Units. Here’s the basic definition of each one of these:

Look at the same two diagrams of a perceptron and a neural network. What is the difference, apart from the number of neurons? The key difference is the hidden layer. A hidden layer sits right between the input layer and the output layer in a neural network. Its job is to refine the processing and eliminate variables that will not have a strong impact on the output.

If there are many instances in a dataset where the impact of the change in the value of an input variable is noticeable on the output variable, the hidden layer will show that relationship. The hidden layer makes it easy for the ANN to give out stronger signals to the next layer of processing.

Even after doing all this math and understanding how the hidden layer operates, you might be wondering how an artificial neural network actually learns.

Learning, in the simplest terms, is establishing causality between two things (activities, processes, variables, etc.). This causality might be difficult to establish. Correlation does not equal causation. If it is difficult to comprehend which variable impacts the other one. How does an ANN algorithm understand this?

This can be done mathematically. The causality is the squared difference between the dataset's actual value and its output value. You can also consider the degree of error. We square it because sometimes the difference can be negative.

You can brand each cycle of input-to-output processing with the cost function. Your and the ANN’s job is to minimize the cost function to its lowest possible value. You achieve it by adjusting the weights in the ANN. (weights are the numeric thresholds given to each input token to justify its impact on the rest of the sentence). There are several ways of doing this, but as far as you understand the principle, you would just be using different tools to execute it.

With each cycle, we aim to minimize the cost function. The process of going from input to output is called forward propagation. The process of using output data to minimize the cost function by adjusting weight in reverse order from the last hidden layer to the input layer is called backpropagation through time (BPTT).

You can keep adjusting these weights using either the Brute Force method, which renders inefficient when the dataset is too big, or the Batch-Gradient Descent, which is an optimization algorithm. Now, you have an intuitive understanding of how an artificial neural network learns.

Understanding these two forms of neural networks can also be your introduction to two different facets of AI application – computer vision and natural language processing. In the simplest form, these two branches of AI help a machine interpret text sequences and label the components of an image.

-png.png?width=600&height=375&name=_containers%20as%20a%20service%20vs%20infrastructure%20as%20a%20service%20(6)-png.png)

Convolutional neural networks are ideally used for computer vision processes in artificial intelligence systems. These networks are used to analyze image features and interpret their vector positions to label the image or any component within the image. Apart from the generally used neural activation functions, they add a pooling function and a convolution function. A convolution function, in simpler terms, would show how the input of one image and an input of a second image (a filter) will result in a third image (the result). This is also known as feature mapping. You can imagine this by visualizing it as a filtered image (a new set of pixel values) sitting on top of your input image (original set of pixel values) to get a resulting image (changed pixel values). The image is fed to a support vector machine that classifies the category of the image.

Recurrent neural networks, or RNNs, establish the relationship between parts of the text sequence to clear the context and generate potent output. It is designed for sequential data, where connections between words can form a directed graph along the temporal sequence. This allows RNN to retain information from previous input while working with current input. The previous input from the hidden layer is fed to the next hidden layer at the current time step along with the new input word, which is supplied to the same layer. This mechanism makes RNN well-suited for tasks such as language modeling, speech recognition, and time series prediction. By maintaining a form of memory through hidden states, RNNs can effectively capture patterns and dependencies in sequences, allowing for the processing of input sequences of varying lengths.

What we’ve talked about so far was all going on underneath the hood. Now we can zoom out and see these ANNs in action to fully appreciate their bond with our evolving world:

One of the earliest applications of ANNs has been personalizing eCommerce platform experiences for each user. Do you remember the really effective recommendations on Netflix? Or the just-right product suggestions on Amazon? They are a result of the ANN.

There is a ton of data being used here: your past purchases, demographic data, geographic data, and the data that shows what people buying the same product bought next. All of these serve as the inputs to determine what might work for you. At the same time, what you really buy helps the algorithm get optimized. With every purchase, you are enriching the company and the algorithm that empowers the ANN. At the same time, every new purchase made on the platform will also improve the algorithm’s prowess in recommending the right products to you.

Not long ago, chatboxes had started picking up steam on websites. An agent would sit on one side and help you out with your queries typed in the box. Then, a phenomenon called natural language processing (NLP) was introduced to chatbots, and everything changed.

NLP generally uses statistical rules to replicate human language capacities and, like other ANN applications, gets better with time. Your punctuations, intonations and enunciations, grammatical choices, syntactical choices, word and sentence order, and even the language of choice can serve as inputs to train the NLP algorithm.

The chatbot becomes conversational by using these inputs to understand the context of your queries and formulate answers that best suit your style. The same NLP is also being used for audio editing in music and security verification purposes.

Most of us follow the outcome predictions being made by AI-powered algorithms during the presidential elections as well as the FIFA World Cup. Since both events are phased, it helps the algorithm quickly understand its efficacy and minimize the cost function as teams and candidates get eliminated. The real challenge in such situations is the degree of input variables. From candidates to player stats to demographics to anatomical capabilities – everything has to be incorporated.

In stock markets, predictive algorithms that use ANNs have been around for a while now. News updates and financial metrics are the key input variables used. Thanks to this, most exchanges and banks are easily able to trade assets under high-frequency trading initiatives at speeds that far exceed human capabilities.

The problem with stock markets is that the data is always noisy. Randomness is very high because the degree of subjective judgment, which can impact the price of a security, is very high. Nevertheless, every leading bank these days is using ANNs in market-making activities.

ANNs are used to calculate credit scores, sanction loans, and determine risk factors associated with applicants registering for credit.

All lenders can analyze customer data with strongly established weights and use the information to determine the candidate's risk profile associated with loan application. Your age, gender, city of residence, school of graduation, industry of engagement, salary, and savings ratio are all used as inputs to determine your credit risk scores.

What was earlier heavily dependent on your individual credit score has now become a much more comprehensive mechanism. That is the reason why several private fintech players have jumped into the personal loans space to run the same ANNs and lend to people whose profiles are considered too risky by banks.

Tesla, Waymo, and Uber have been using ANNs in their engine mechanisms. In addition to ANN, they use other techniques, such as object recognition, to build sophisticated and intelligent self-driving cars.

Much of self-driving has to do with processing information that comes from the real world in the form of nearby vehicles, road signs, natural and artificial lights, pedestrians, buildings, and so on. Obviously, the neural networks powering these self-driving cars are more complicated than the ones we discussed here, but they do operate on the same principles that we expounded.

Based on G2 user reviews, we have curated a list of top five artificial neural network software for businesses in 2024. These software will help optimize data engineering and data development workflows while adding intelligent features and benefits to your core product domain.

Google Cloud Deep Learning VM Image can optimize high-performance virtual machines pre-configured specifically for deep learning tasks. These VMs are pre-installed with the tools you need, including popular frameworks like TensorFlow and PyTorch, essential libraries, and NVIDIA CUDA/cuDNN support to leverage your GPU for faster processing. This minimizes the time you spend setting up your environment and lets you jump right in. Deep Learning VM Images also integrate seamlessly with Vertex AI, allowing you to manage training and allocate resources cost-effectively.

"You can quickly provision a VM with everything you need for your deep learning project on Google Cloud. Deep Learning VM Image makes it simple and quick to create a VM image containing all the most popular."

- Google Cloud Deep Learning VM Image Review, Ramcharn H.

"The learning curve to use the software is quite steep."

-Google Cloud Deep Learning VM Image Review, Daniel O.

The Microsoft Cognitive Toolkit (formerly CNTK) empowers businesses to optimize data workflows and reduce analytics expenditure through a high-performance, open-source deep learning framework. CNTK leverages automatic differentiation and parallel GPU/server execution to train complex neural networks efficiently, enabling faster development cycles and cost-effective deployment of AI solutions for data analysis.

"Most helpful feature is easy navigation and low code for model creation. Any novice can easily understand the platform and create models easily. Support for various libraries for different languages makes it stand out! Great product compared to Google AutoML."

- Microsoft Cognitive Toolkit (Formerly CNTK), Anubhav I.

"Less control to customize the services to our requirements and buggy updates to CNTK SDKs which sometimes breaks the production code."

-Microsoft Cognitive Toolkit (Formerly CNTK), Chinmay B.

AIToolbox leverages a high-performance, modular artificial neural network architecture for scalable data processing. It simplifies complex tasks and includes latest advanced machine learning techniques like Tensorflow, Support Vector Machine, Regression, Exploratory Data Analysis and Principal Component Analysis for your teams to generate forecasts and predictions. Our framework empowers businesses to streamline workflows, optimize feature engineering, and reduce computational overhead associated with traditional analytics, ultimately delivering cost-effective AI-powered insights.

"Aitoolbox offers many tutorials, article and guides that help learn about new technologies. Aitoolbox provides access to various ai and ml tools and libraries, making it easier for user to implement and experimental with new technologies.aitoolbox is designed to be user friendly with features that make it easy for users access and use the platform."

- AIToolbox Reviews, Hem G.

"AIToolbox encounters limitations within its specific context. Consequently, there exists a deficiency in general intuition, and the AI's precision is not always absolute. Emotions and security measures are absent from its functionalities."

-AIToolbox Review, Saurabh B.

Caffe offers a high-throughput, open-source deep learning framework for rapid prototyping and deployment. Its modular architecture supports diverse neural network topologies (CNNs, RNNs) and integrates with popular optimization algorithms (SGD, Adam) for efficient training on GPUs and CPUs. This empowers businesses to explore cost-effective AI solutions for data analysis and model development.

"The upsides of using Caffe are its speed, flexibility, and scalability. It’s incredibly fast and efficient, allowing you to quickly design, train, and deploy deep neural networks. It provides a wide range of useful tools and libraries, making it easier to create complex models and to customize existing ones. Finally, Caffe is very scalable, allowing you to easily scale up your models to large datasets or to multiple machines, making it an ideal choice for distributed training."

- Caffe Review, Ruchit S.

"Being in research department and doing more of deep learning work on images , I would require openCL which is still need to add more features. So I need to switch to other software for that some. Would be better if it has openCl features added."

-Caffe Review, Sonali S.

Synaptic.js is a open source code editor which provides software library support for node.js, Python, R and other programming languages. This tool is based on artificial neural network architecture and helps you train and test your data smoothly with simple functional calls. The integration of Synaptic.js with your notebook allows for smooth deployment and delivery of neural networks.

"It is very easy to build a neural network in Javascript by making use of Synaptic.js. It includes built-in architectures like multilayer perceptron, Hopfield networks, etc. Also, there aren't many other libraries out there that allow you to build a second order network."

- Synaptic.js Review, Sameem S.

"Not suitable for a large neural network. It needs more work on the documentation; some of the links on the readme are broken."

-Synaptic.js, Chetan D.

ANNs are getting more and more sophisticated day by day. ANN-powered NLP tools are now helping in early mental health diagnosis, medical imaging, and drone delivery. As ANNs become more complex and advanced, the need for human intelligence in this system will become less. Even areas like design have started deploying AI solutions with generative design.

Learn how the future of artificial intelligence, which is artificial general intelligence, will set the stage for vast industrial automation and AI globalization.

This article was originally published in 2020. It has been updated with new information.

Hardik Shah is a Tech Consultant at Simform, an application development services company. He leads large scale mobility programs that cover platforms, solutions, governance, standardization, and best practices. Connect with him to discuss the best practices of software methodologies.

Humans can decipher words organically due to the brain's central signals. They can interpret...

.png) by Shreya Mattoo

by Shreya Mattoo

For teams building next-generation products in image and video recognition, from autonomous...

by Sudipto Paul

by Sudipto Paul

Earlier, translating and analyzing natural language was a lengthy and resource intensive...

.png) by Shreya Mattoo

by Shreya Mattoo

Humans can decipher words organically due to the brain's central signals. They can interpret...

.png) by Shreya Mattoo

by Shreya Mattoo

For teams building next-generation products in image and video recognition, from autonomous...

by Sudipto Paul

by Sudipto Paul