Growth requires change.

If your brand doesn't adapt to audience needs, that's reason enough for them to move to your competitors.

But how do you make the right changes? Any decision you make will impact your digital presence and brand recognition. Therefore, whatever changes you make must be positive and sustainable.

Instead of making random changes and hoping for the best, you should adopt a strategic and logical approach with the help of A/B testing.

A/B testing is the process of comparing two versions of a website or campaign to see which one appeals more to visitors. It helps brands choose the version that results in better engagement, user experience, and other metrics.

With A/B testing tools, it becomes easy to track which variation of your landing page or content encourages more visitors to convert and engage.

In simple terms, this approach is all about finding the best possible option using data and statistical analysis. So, rather than relying on guesswork and intuition, you utilize data to optimize your website and drive key business metrics.

The key to growing a successful business is identifying what’s working and what isn’t. A/B testing offers this, along with a better understanding of user behavior, audience pain points, and website performance.

An A/B test also lets you test changes on a smaller group within a controlled environment, minimizing the risk of shipping something that doesn't resonate with your target audience. It's a cost-effective and time-saving strategy.

Ideally, A/B testing should be integral to your marketing campaigns. It helps optimize the better-performing elements while understanding what needs to be changed or improved.

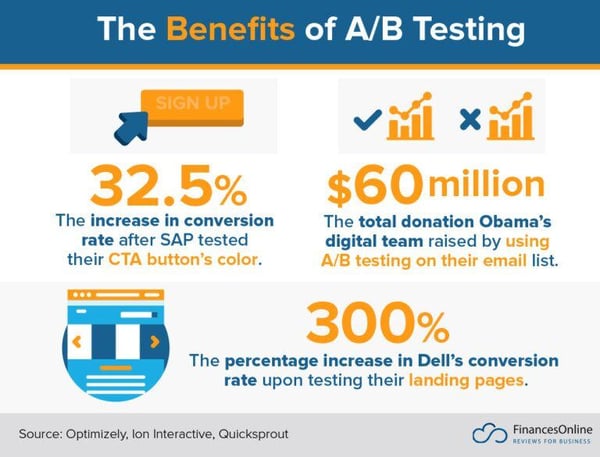

Source: Finances Online

While dealing with common conversion problems like cart abandonment and page drop-offs, businesses often overlook the core issue—bad user experience. This usually happens when visitors face problems that prevent them from achieving a particular goal on your website.

Regular A/B testing helps identify these pain points while allowing you to make calculated changes to improve user experience. Moreover, it identifies factors that most impact user experiences so you can further optimize key elements and improve your core conversion metrics.

In A/B testing, you have a “control,” which is the original version of a page, and a “variation,” which is the updated version. The test will help display these versions simultaneously to randomly selected visitors for a specific duration.

At the end of the test, the results are analyzed to determine which version performed better in terms of the previously defined goals.

Common conversion goals for A/B testing include increasing purchases, add-to-carts, form submissions, free trial sign-ups, and so on. A/B testing platforms randomly assign visitors to the control and variation. The version that performs better is declared the winner of the A/B test.

Since this is done by analyzing the results at the end of the test, A/B testing offers a statistically significant way to measure the impact of the changes you made in the variation.

Also, once you determine a winner, this new version can be used as the “control” variation. You can then choose to continue the A/B testing process or directly implement the changes on the page.

Moreover, A/B testing is an essential part of conversion rate optimization (CRO) – a process where visitor behavior is analyzed to optimize the overall page experience and boost conversion rates. A conversion occurs when a visitor takes the desired action on your website or app.

The ultimate goal of A/B testing is to improve conversions, i.e., persuade more visitors to take the action you want them to take. This action could be anything from clicking a call-to-action (CTA) button, adding items to the cart, scrolling beyond the 50% viewpoint of the page, or viewing a video.

You can experience the benefits of A/B testing in many ways. Let's take a look at the different methods to conduct A/B tests.

This is an experiment where two versions of the same web page are tested. In scenarios where you wish to test significant changes on an existing page, like a homepage redesign, you should opt for split URL testing.

The idea is to change multiple variables on the same page and test all possible combinations simultaneously to see which performs best. For example, using this method, you can create multiple variations of a form where changes to the headline, number of fields, and the CTA button design are tested simultaneously.

In multipage testing, you change specific elements across several pages and determine which page performs better based on how your visitors interact with them.

Let’s say your sales funnel includes a home page, a product page, and a checkout page. With the help of multipage testing, you can create new versions of each page and test this new set of pages against the original sales funnel. Moreover, you can use this method to test how changes to recurring elements in these pages impact the entire funnel's conversions.

Regardless of the method, a well-defined A/B testing framework allows you to make data-driven decisions, improve website performance, and optimize your target audience's overall experience.

As you experiment with different changes on your website, ensure that your A/B testing platform is tracking important metrics and delivering useful insights for each test.

Here are the common goals tracked during A/B tests that you should focus on while monitoring the different tests on your website.

A/B testing helps improve user experience and discover untapped opportunities to boost website performance. It gives you the right insights to make data-backed decisions to boost marketing strategies further.

Each A/B test helps you better understand how the audience interacts with your website. It also helps you visualize user journeys and identify roadblocks that prevent users from taking a specific action.

Take WorkZone, for example, a US-based software company offering robust project management solutions to organizations. On its lead generation page, visitors were asked to complete a demo request form.

Source: VWO

To build trust and reputation, WorkZone added a “What Customers Say” section next to the form. However, with the help of A/B testing, they discovered that the brand logos added in this section were distracting the visitors from filling up the form.

So, they decided to run a quick A/B test with a different variation: the color of all the brand logos was changed to black and white.

Source: VWO

This simple change in the new version generated a 34% increase in form submissions for WorkZone. Similarly, based on the results of your tests, you can also make smart, informed decisions that boost audience engagement and improve the conversion rate.

A/B testing helps you better understand the goals, intentions, preferences, and other crucial aspects related to your target audience. With apt insights, it's easy to tweak certain elements and test various data-backed hypotheses that solve customer pain points and encourage them to take the desired action.

For instance, Northmill is a popular fintech brand from Sweden that wanted to optimize its loan application page. The original version had an application form on the side where visitors could fill in their details and apply for a loan.

Source: VWO

The team at Northmill hypothesized that replacing the side form with a single CTA button would help visitors better understand the company’s offerings leading to more people applying for the loan.

So, in the new version, the form was replaced with a green “Apply” button at the top-right corner of the page.

Source: VWO

After running the test for almost five months, this new version generated 7% higher conversions.

Besides improving user engagement, the data collected through A/B testing also tells why users quickly drop off or bounce off your website.

For example, Inside Buzz UK (now known as Target Jobs) noticed many visitors dropping off their home page without taking action.

Source: VWO

They decided to update the entire page and run an A/B test to find out which version performed better in terms of engagement. The new version had a simple design with a single CTA button and only included elements considered necessary for the visitors.

Source: VWO

The test results showed that the new variation had a lower bounce rate and a 17.8% increase in user engagement.

Every element in your conversion funnel should be optimized for your target audience. This includes images, headlines, CTA buttons, page design, etc.

When Hubstaff, a leading brand that offers workforce management solutions, wanted to optimize its landing page, it turned to A/B testing.

Source: VWO

With continuous experimentation, the team identified elements on their landing page that were not performing well. Based on this data, they redesigned the entire page and ran a “Split URL” test to compare both versions.

Source: VWO

At the end of the test, the new version saw a 49% increase in visitor-to-trial conversion and a 34% rise in the number of people sharing their emails.

So, A/B testing not only positively impacts conversion rates but also boosts your website's overall performance.

Now that you’ve seen the potential benefits, you can start running successful A/B tests on your website, right?

Well, not exactly.

Before you run any A/B test, consider a few important factors.

Although it seems like a simple idea that can be implemented immediately, A/B testing requires a lot of thinking, research, and analysis.

First, you must understand that A/B testing is not a one-off concept. It's a continuous process that requires proper planning, consistency, and structure.

Moreover, you can’t just make random changes to certain pages and expect positive results. A/B testing is more about creating highly optimized page experiences that can convert visitors and boost your CRO processes.

To efficiently manage these elements, you need a good A/B testing platform that can run continuous tests and provide actionable insights about customers, traffic, performance, and other important factors.

So, here’s how you can start your A/B testing journey:

To create a successful A/B testing strategy, you must first study and analyze your website. Start by understanding its current state, the pages performing well, the type of visitors converting, and the amount of traffic coming through different campaigns.

Tracking performance lets you identify pages that drive the highest traffic or elements with a good potential to convert visitors. This is a solid starting point for your A/B testing plan. You can then shortlist these pages and identify opportunities to optimize or improve their performance first.

Once you finalize the pages you wish to optimize, perform a detailed analysis of the traffic coming through these pages. The best A/B testing tools offer a complete overview of visitor data through features like on-page surveys, heatmaps, and session recordings.

Apart from collecting the usual type of data like age, region, and demographics, these tools gather crucial information like time spent on a page, scrolling behavior, etc. The visitor insights help you identify common pain points in their journey and work on finding optimal solutions.

Now that you’ve analyzed the website and identified problems visitors face, research and develop data-based hypotheses for these issues.

For example, you may observe that a large number of visitors are dropping off without submitting your form. You hypothesize that reducing the number of mandatory fields will result in more people submitting the form. Similarly, you can develop other data-backed hypotheses for this problem.

You can test one or all of these hypotheses against the form's existing version (control). This is the most important part of A/B testing.

Firstly, you need to choose a method for testing your hypotheses. After that, define specific goals for the A/B test based on conversion rate, number of visitors, duration of the test, and so on.

This will help you analyze the results and determine the better-performing version. Ensure that each test version runs simultaneously and for the same duration. Also, make sure that all versions' traffic is split equally (and randomly) whenever possible.

Don't forget to actively monitor the performance of all versions and run the tests for a specific duration to get statistically accurate results.

After your test concludes, evaluate the results.

This is, undoubtedly, one of the most important steps of any A/B test as you finally get a chance to analyze the results based on the previously defined goals.

If your hypothesis is proved right, you can make the changes and replace the existing version with the winning variation. However, if the results fail to deliver a winner, gather relevant insights and continue testing other factors that may lead to positive results.

A/B testing is a continuous process that requires in-depth research and analysis while giving you a chance to boost conversion rates and improve the overall site performance.

Next, start identifying page or site elements with the highest potential to drive revenue.

Creating a strong A/B testing hypothesis can be quite challenging. Here are a few A/B testing ideas for experimentation and analysis.

These ideas will kickstart your A/B testing journey and take your conversion optimization campaigns to the next level.

Although a robust A/B testing framework can boost your marketing campaigns, you might encounter a few challenges during the process.

Most organizations struggle with the A/B testing process when formulating hypotheses, especially if they don't have reliable data. To come up with effective hypotheses, conduct thorough research and identify problem areas in your website.

The sample size of the test group significantly impacts an A/B test's results. Each A/B test should have a large sample size to get accurate and statistically significant test results. If the sample size is too small, the results of your A/B test might be unreliable.

Analyzing the results correctly and making informed decisions is another key challenge. Misinterpretation often results in incorrect decisions that can hamper your business growth. So, carefully analyze the test results and remove any bias from external factors you didn't consider.

It's not always easy to make changes based solely on A/B testing. More often than not, various factors like bandwidth issues and resource limitations stand in the way. To successfully implement the changes, create a clear roadmap for your A/B testing program and prepare ahead of time.

Just because a few of your A/B tests failed doesn't mean you give up. A/B testing requires constant analysis and planning. Determining why your A/B test failed will help you uncover problem areas and gain a better perspective of the audience.

Developing an A/B testing culture that blends seamlessly with your overall marketing strategy is crucial for your website's long-term growth and improvement. Although these challenges are common for any A/B testing program, you can gradually overcome them with some best practices.

A/B testing involves research, statistics, and analysis. But more importantly, it requires consistency.

Here are a few tips and general best practices to keep in mind when managing a successful and efficient A/B testing program.

Before implementing A/B testing on your website, define your goals, expectations, insights, opportunities, and other important aspects of your A/B testing framework. This also involves formulating hypotheses based on reliable data and actionable insights.

Just because Amazon has a bright, orange CTA button that works well for them doesn't mean you make similar changes to your CTA button. One of the most common A/B testing mistakes is replicating successful test results of other websites on your pages. Instead, you should make calculated decisions based on your website's goals, audience, and traffic to achieve accurate results.

Deciding what to test on your website can be tricky. Based on research and analysis, you need to choose elements that significantly impact traffic and conversions. For example, start by looking at pages that register the highest traffic and identify key elements to optimize and improve conversions.

Running multiple tests simultaneously on the same page isn't a good idea. You may be unable to identify the elements that influenced your results among the tests. When you change only one variable at a time, you give yourself a better chance of understanding the results while avoiding skewed data.

What is the best duration for an A/B test?

Although there's no fixed number, you can determine the duration of a test based on the traffic, goals, and other important aspects of your website. You can use free A/B test duration calculators to better understand your test's ideal length. Running your A/B tests for sufficient time is crucial to ensure reliable and accurate results.

Suppose that your website usually sees a spike in traffic during the weekends. Running your test over multiple weekends can help account for this increase and avoid misinterpreting the test results.

As soon as the test concludes, you should focus on studying the results carefully. Apart from determining a “winner,” these results will also give you some useful insights that you can use to improve future tests and campaigns.

With the help of these best practices, you can give your A/B testing program a better chance of delivering reliable and effective results for your website.

Choosing the right A/B testing tool can make all the difference in optimizing user experiences, improving conversion rates, and making informed product decisions. The best platforms offer more than basic split testing. They provide advanced experimentation, personalization, and deep analytics to ensure every change is backed by data.

To qualify for inclusion in G2's A/B Testing Tools category, a product must:

Below are the top five A/B testing tools from G2’s Winter 2025 Grid® Report. Some reviews may be edited for clarity.

AB Tasty is built for marketers who want more than just simple A/B tests. It offers advanced experimentation, AI-driven insights, and dynamic personalization that help marketing teams refine user experiences without relying heavily on developers.

With its intuitive drag-and-drop editor and extensive segmentation capabilities, teams can launch tests quickly and see real-time results. Plus, built-in heatmaps and session recordings provide deeper behavioral insights, making it easier to optimize conversion campaigns.

"The flexibility of the platform and the superb support are excellent. It took me a little while to understand how the WYSIWYG worked, but that's also because of the nature of our website and how we've set it up, but when you know, you know. Setting up tests is super easy! Implementation/integration for us was a little tricky, and we ran into some troubles with the reporting, but we were helped along the way in all possible ways until it was solved. We've set AB Tasty up to test on various (landing) pages as frequently as possible, so we're using it weekly."

- AB Tasty Review, Linda A.

"If not a developer expert in javascript, the tool is not user-friendly enough to be autonomous in creating many AB tests. Especially the ones that require adding a new bloc based on a mockup or updating something that needs to be reflected on all pages, not just one (for example, changing the CTA color on all PLPs without manually doing it for all CTAs on 1 PLP)."

- AB Tasty Review, Julie P.

VWO is a favorite among product managers who need data-backed decisions. It goes beyond basic A/B testing with robust split testing, multivariate testing, and funnel analysis.

The platform excels in user behavior analysis with features like session recordings, form analytics, and heatmaps. These help product managers validate new features before full-scale deployment. It also integrates well with major analytics platforms, making data-driven experimentation easier.

"VWO provides a user-friendly approach to A/B testing and site insights. Bipin has been a helpful resource as our Customer Success Manager, always available to answer questions and solve our testing needs. He also collaborated with us when we needed test setup and troubleshooting assistance. The editor is easy to use, and we can implement tests fairly quickly without the need for advanced developer knowledge."

- VMO Review, Brielle C.

"Sometimes the platform can be buggy. Heatmaps and clickmaps often don't work or are a challenge to share internally - likely due to the security provisions we have on our browsers/devices. Personalization is not as easy to do at scale compared to other platforms; setting up custom variables requires some effort. Simple edits are easy in the WYSIWYG editor, but more advanced changes require CSS/HTML coding and can be difficult to implement without bugs in how they are displayed."

- VMO Review, Matthew G.

Bloomreach stands out for e-commerce businesses by combining A/B testing with AI-driven personalization. It helps online retailers optimize product listings, checkout flows, and promotional strategies based on real-time user data. Its ability to run tests across multiple channels, including web, mobile, and email, makes it an all-in-one solution for conversion rate optimization.

Predictive merchandising and AI-powered recommendations also give it an edge for e-commerce-specific use cases.

"Bloomreach offers a robust suite of tools that provide flexibility and scalability, making it a valuable platform for managing digital experiences. I particularly appreciate how it enables smooth integration across various systems and the degree of control it gives over user journeys. The platform's ability to cater to diverse business needs is a standout feature."

- Bloomreach Review, Daniel W.

"Just some things that don't work as expected or have the potential to be better. An example is building an email with a content block; the content block is not previewable within the builder, making the testing process far more complicated than necessary. Another example is that although the platform's whole thing automatically handles new data, etc., I accidentally set a list attribute to a string by mistake, breaking the whole attribute for all customers. There should have been a warning or error message, etc."

- Bloomreach Review, Tom H.

Netcore Customer Engagement and Experience Platform is the go-to choice for startups seeking an affordable yet feature-packed A/B testing solution. It offers powerful behavioral analytics, no-code experimentation, and automation to help small businesses optimize their growth strategies without needing a full-fledged data team.

With strong email, push notification, and in-app message testing, Netcore enables startups to refine customer engagement across multiple touchpoints.

"Netcore’s Customer Engagement and Experience Platform stands out for its strong feature set, ease of use, and a highly responsive team. Their sales and product teams build great personal connections and provide honest, to-the-point responses, making the overall experience smooth. The platform itself is robust, offering effective engagement solutions that compete with the best in the industry."

- Netcore Review, Ashwini D.

"The thing I dislike about Netcore is that it's a bit difficult to understand at first. There is no user guide video. If we want to create or use any analytics, we need to connect with PvP. If there is a tutorial video, it will be better for new users. And one more: it's regarding the WhatsApp chatbot. In the chat section, we can't review the old text. If it's like the WhatsApp theme, it's really easy to use."

- Netcore Review, Ganavi K.

LaunchDarkly isn’t just an A/B testing tool. It’s a feature flag management powerhouse. Designed for SaaS companies, it enables teams to roll out new features gradually, run feature experiments, and instantly revert changes without deploying new code. It’s particularly valuable for DevOps and engineering teams, offering robust integration with CI/CD pipelines.

With real-time feature control and user targeting, LaunchDarkly ensures that SaaS businesses can optimize product features with minimal risk.

"One of the biggest benefits for me has been the ability to easily toggle features on or off in real-time during periods of service degradation. When performance issues arise, I can disable non-essential features instantly, keeping critical parts of the service running smoothly while we troubleshoot. This has saved us from several potential outages. The integration process was overall smooth as well.

The ability to roll out features incrementally has allowed us to test new functionality in production without fully exposing it to all users. If something doesn’t go as planned, rolling back is as simple as flipping a switch — no redeployment needed. This has reduced the stress around releases, knowing that we always have a safety net."

- LaunchDarkly Review, Sahil C.

"One downside of LaunchDarkly is its complexity at scale, particularly when managing a large number of feature flags across multiple environments. It can be challenging to maintain flag hygiene, and the cost can increase significantly as the usage grows. Additionally, the UI might feel cluttered when dealing with numerous flags, making it difficult to find specific ones quickly."

- LaunchDarkly Review, Togi Kiran K.

Still in doubt? Find more answers below.

Enterprises benefit from Adobe Target, Kameleoon, and Optimizely Enterprise, which offer AI-driven personalization, predictive testing, and automated content delivery. These platforms integrate with Adobe Experience Cloud, Google Cloud AI, and BigQuery to provide deep insights into audience behavior and engagement.

A/B testing compares two or more distinct variations of a single element (e.g., two headlines). Multivariate testing, on the other hand, tests multiple elements simultaneously (e.g., headline, CTA button, and image all at once) to see which combination works best. A/B testing is simpler and requires less traffic, while multivariate testing is more complex but provides deeper insights.

Marketing teams often use Optimizely, VWO, and AB Tasty for A/B testing landing pages, ad creatives, email campaigns, and content personalization. These tools integrate with Adobe Analytics, Google Tag Manager, and HubSpot, providing data-driven insights to improve marketing performance.

A/B testing for mobile apps, using tools like Firebase A/B Testing, Apptimize, and Optimizely, helps optimize onboarding flows, push notification strategies, and in-app features. These tools connect with Adobe Mobile Analytics, Amplitude, and Google Firebase, allowing app developers to track retention, churn rates, and engagement metrics.

Digital agencies prefer Optimizely, Unbounce, and AB Tasty because they offer client-friendly dashboards, drag-and-drop visual editors, and seamless multi-account management. These tools integrate smoothly with Adobe, Google, and Omniconvert, enabling agencies to deliver customized testing strategies while tracking performance across multiple brands.

Enterprises rely on Adobe Target, Optimizely Enterprise, and Kameleoon for cross-platform A/B testing, AI-driven personalization, and advanced statistical modeling. These solutions integrate with Adobe, Google, and Amplitude, enabling businesses to run large-scale experiments simultaneously across websites, mobile apps, and marketing campaigns.

Most A/B testing platforms integrate with analytics tools like Google Analytics, Adobe Analytics, Amplitude, and Mixpanel. These integrations help track experiment performance, user segments, and statistical significance directly within analytics dashboards, making analyzing and acting on test results easier.

A/B testing is a powerful concept that can help optimize your marketing efforts and drive key business metrics. Although there are quite a few factors that one must consider while running different tests, the core idea of A/B testing is to improve user experience by optimizing key conversion metrics.

Want to build the best possible version of your website? Learn how to evaluate consumer responses to multiple variables and track engagement using multivariate testing.

Ashley Bhalerao is a Content Marketer at VWO. He loves creating engaging content around topics that can help organizations grow their business and drive revenue. Besides writing, he also loves dancing, watching movies, and exploring different types of delicious street food.

When you work in marketing, you're constantly making decisions that could go either way....

by Soundarya Jayaraman

by Soundarya Jayaraman

A company-wide understanding of user/customer engagement should be a standardized,...

by Nikola Kožuljević

by Nikola Kožuljević

When you work in marketing, you're constantly making decisions that could go either way....

by Soundarya Jayaraman

by Soundarya Jayaraman

A company-wide understanding of user/customer engagement should be a standardized,...

by Nikola Kožuljević

by Nikola Kožuljević