October 4, 2023

.jpg?width=400&height=150&name=Jorge-03%20(1).jpg) by Jorge Peñalva / October 4, 2023

by Jorge Peñalva / October 4, 2023

If you’re part of a leadership team, you’re probably tasked with one of the most important decisions in the last decade: how to implement AI in your business. What are the biggest challenges that AI can solve?

Once you identify those challenges, what’s your AI strategy? How do you choose strategic partners or vendors when everything changes so fast?

I'm the CEO of Lang.ai and in partnership with GTM Fund, we've built the first framework to implement AI for GTM teams. Lang.ai is an AI Platform for Customer Experience. GTM Fund and their community is made up of over 300 C-suite and VP-level GTM operators.

The number one thing on just about everyone's mind right now is how can I implement AI to grow more efficiently?

When Max Altschuler, GP at GTM Fund, answered that question, he shared, “AI is not a silver bullet. No technology is a silver bullet. If your GTM motion is not working today, it’s certainly not going to work with AI. You’ll likely just go faster in the wrong direction. It happens with any new major breakthrough in technology like mobile, blockchain, and now AI. People have a tendency to get distracted by the technology itself and lose track of the underlying problems that they’re really trying to fix.

"A few years ago, each of your teams would have run out to buy the latest AI point solution because of FOMO. Now, I would urge teams to go back to the basics. Get your leadership team together, re-evaluate each juncture in your GTM process from customer discovery to upsell, and re-imagine a better way to engage your customers using these new advancements in AI.

"Map that new world out, use a framework like the one below to evaluate which AI option is right for your organization, conduct some smaller tests, iterate based on the data you get, and then roll it out across a single business unit. After that, it goes to the entire organization.

"Without a holistic strategy like this, I actually think that AI has the ability to do more harm to your business than good. There’s no doubt in my mind that AI will help us rewrite the current GTM playbook, but it’s early days. This is one of those situations where companies need to slow down in order to speed up."

Matthew Miller, principal analyst at G2 focused on AI, agrees. His research of almost 200 categories with generative AI features bares this out. Despite the bells and whistles of newfangled technology, the needle has hardly moved when it comes to how well the software fulfills the requirements of software users. Determining needs should come first, and only then should you try to figure out how to use the best software to achieve the best results.

If you’re in GTM teams, such as sales, marketing, product, customer experience, or customer success, you can benefit from this framework to make the right decisions when it comes to establishing AI.

Currently, three primary options to implement AI in a company are available. Let’s detail each.

Large cloud providers, like AWS, Google, or Microsoft, all provide services to implement generative AI in a secure way in the cloud. In the case of Microsoft, they only offer the Open AI model. Google provides their Palm 2 model and Amazon has multiple options, including AWS Bedrock.

On the other hand, large language model (LLM) providers are the new players on the scene for this new AI wave. They help you run generative AI in an enterprise environment with their own models (Anthropic and Open AI) or Open Source Models (Huggingface and H2O.ai). You will be able to run your model of choice as you host it based on if it’s open source or it’s hosted by the provider.

Differentiator of cloud/LLM providers: Engineers can make tweaks and have varying degrees of control over the underlying models being used.

Vertical leaders are software platforms that have grown in a certain vertical, or persona, such as sales, customer support, CRM, or finance. They typically specialize in a specific business function or area. Therefore, they have the most comprehensive dataset with regard to that function, built over years of expertise. Some of them have already launched AI models trained on all the historical data from their customers.

Some examples of vertical leaders with new AI tools:

Some other players, like Copy.ai and Jasper.ai, have become vertical leaders with a new product in the market because they were able to nail the timing in the new AI wave.

Differentiator: Outreach, Gong, Zendesk, Copy.ai have access to the largest datasets in a specific vertical or business function and can fine-tune the best model without the need for engineers.

Enterprise AI startups are companies focused on safely implementing AI for enterprise-specific use cases, especially privacy and security. Enterprises want to know that their data isn't being used to train models; these startups cater to that need.

Some examples of Enterprise AI startups include:

Differentiator: Quick delivery of bespoke models tailored to the customers’ data, ensuring data privacy, preventing customer data from use as training models. All without the need for engineering resources on the customer side.

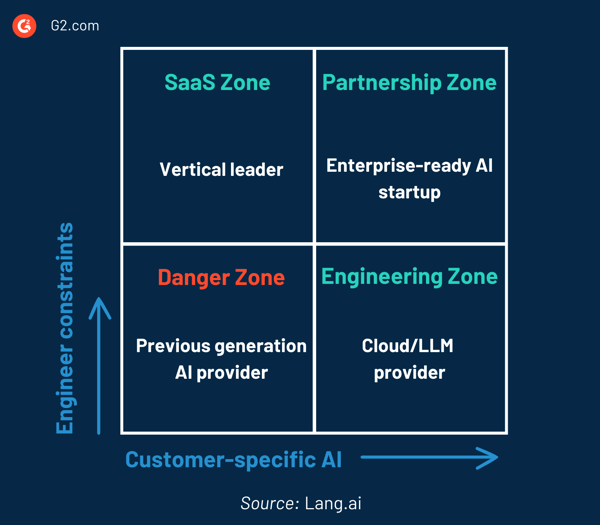

With all these choices, you can see that setting up AI is a difficult decision for GTM teams. We’ve created this framework to make it easier to choose which type of vendor works for your company and your specific AI use case.

Below we’ll cover how to use this framework. But before we dive into the details, it’s important to understand what the different axes mean.

Engineer constraints: The constraints that exist in your organization in terms of the engineers that work on this issue. High constraints mean you can’t dedicate engineers to this problem.

Customer-specific AI: The need to customize the AI to your own data and the use case you’re trying to solve. High customer-specific AI means you need a high level of customization.

The engineering zone is best for problems that are a core operation for the company. Companies are normally willing to dedicate internal engineering resources. They will have a need for customization and privacy, as it’s how they differentiate from their competitors.

In this case, you use LLMs to build your own AI models. You ensure zero data-privacy risk by hosting it and fast maintenance by dedicating an engineering team to the model.

Examples of uses for the engineering zone:

The SaaS zone is best for problems that aren’t part of the core operation of the company and for which you can’t invest engineering resources. At the same time, the data that’s part of these problems isn’t critical or high-risk.

To solve these types of issues, you can work with a SaaS provider that has a “megamodel” trained by all customer data, including your own. The benefit here is that the provider has data about other companies, and you don’t have to invest engineering resources – you just contract the software with the AI features monthly or yearly.

Examples of use cases for the SaaS zone:

The partnership zone is the best for processes that may not be the main focus of the company, so you don’t have engineering availability. These procedures may have specific company needs (because of privacy, internal processes, or complexities) that require customization and not just generic models. By partnering with an enterprise-ready startup, you get the power of fast execution while keeping data private and saving resources.

It also applies when:

Examples of use cases for the partnership zone:

At the same time, they can’t dedicate engineers to their brand or marketing teams. Partnering with a private, customer-specific startup with AI for marketing would be the best move for these brands.

The danger zone is where companies can find themselves if they don’t adapt to the exponential change of AI that’s happened in the past year. Being in the danger zone means you’re investing time and money in engineers to create a model that you don’t own. This model isn’t customer-specific, so your data may be used across multiple clients.

This used to be common as machine learning (ML) models required a lot of training and fine-tuning to solve a problem, and providers needed huge amounts of data to be successful. For instance, it was common to pay for AI providers that had an in-house team of ML engineers training the algorithms, but the data and the model belonged to the service-provider, not to the company that was buying the AI software.

With LLMs, it doesn’t make sense to be in the danger zone from an AI strategy perspective. If you are, change providers or push them to deliver AI models in a way that doesn’t require you to pay for engineering resources.

You should be out of this zone for any AI process in your company.

AI and the ecosystem of problems and companies surrounding it are evolving exponentially, so while we tried to summarize everything in a simple framework, there are other variables that are also relevant in order to make decisions, such as:

Even though generative AI commoditizes a lot of aspects of AI, building a solution is different than implementing a technology. We’ve seen a common question being asked to AI providers these days: “Why is this different from what I can do with ChatGPT/ Open AI?”. We wanted to point out that the difference doesn’t necessarily come from a technology perspective. What’s a true advantage is if your AI vendor is thinking about the problem you’re trying to solve 24/7 and therefore has the best solution or product.

A lot of times customers are pushing to implement AI, but it’s good to take a step back and understand what the problem you’re trying to solve is and what’s the best approach before investing thousands or millions of dollars.

Be at the forefront of everything AI-related when you subscribe to our monthly newsletter, G2 Tea.

Jorge Peñalva is the CEO of Lang.ai, an AI Platform for Customer Experience teams to automate workflows and drive product decisions. Lang.ai is a Series A company with customers such as Ramp, Tinder, and Hims. As a two-time founder in the AI space, Jorge's expertise lies within driving value from unstructured data in Enterprise companies and he's seen the evolution of AI technology in the last 10 years. In his spare time, Jorge can be seen running in the trails of Marin County, playing basketball, or having a croissant in his favorite bakery.

Let’s address the elephant in the room: No marketing campaign performs equally well as the...

by Tanushree Verma

by Tanushree Verma

In the creative world, burnout isn't just a buzzword — it's an epidemic. A staggering 78% of...

by Tanushree Verma

by Tanushree Verma

Picture this: It's 2025. Your marketing intern used an AI tool to generate content for your...

by Tanushree Verma

by Tanushree Verma

Let’s address the elephant in the room: No marketing campaign performs equally well as the...

by Tanushree Verma

by Tanushree Verma

In the creative world, burnout isn't just a buzzword — it's an epidemic. A staggering 78% of...

by Tanushree Verma

by Tanushree Verma