July 3, 2024

by Rebecca Reynoso / July 3, 2024

by Rebecca Reynoso / July 3, 2024

The history of artificial intelligence may feel like a dense and impenetrable subject for people who aren’t well-versed in computer science and its subsets.

Despite how mystifying and untouchable artificial intelligence may seem when broken down, it’s a lot easier to understand than you might think.

In layman’s terms, AI is the understanding that machines can interpret, mine, and learn from external data in a way where said machines functionally imitate cognitive practices normally attributed to humans. Artificial intelligence is based on the notion that human thought processes have the ability to both be replicated and mechanized.

There have been countless innovations in the domain of artificial intelligence, and hardware and software tools are still being improved to incorporate more human qualities and simulation. Most of the innovations can be practically observed with the right data and artificial intelligence software, which perceives real-time data and bolsters business decision-making.

The history of artificial intelligence dates back to eminent mathematicians and Greek philosophers who were obsessed with the idea of a mechanical future. Initially, any device that ran on electricity, fuel or energy resources was said to be "automated", as it didn't need manual support. Greek scientists and researchers pictured the same turn of events for "software automation, "also known as "artificial intelligence" or "fifth generation of computers."

The history of artificial intelligence dates back to antiquity, with philosophers mulling over the idea of artificial beings, mechanical men, and other automatons. One of the earliest mentions is the first programmer, Lady Ada Lovelace, who invented the first digital computer program in the 1800s for the analytical engine. Charles Babbage and Lady Ada Lovelace coined the onset of digital automation in the world.

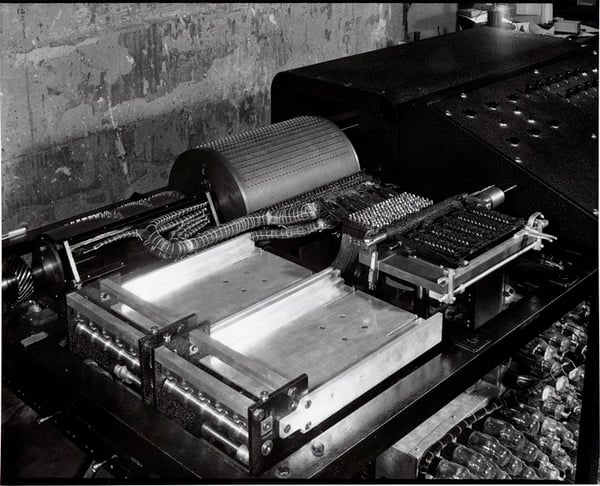

Thanks to early thinkers, artificial intelligence became increasingly more tangible throughout the 1700s and beyond. Philosophers contemplated how human thinking could be artificially mechanized and manipulated by intelligent non-human machines. The thought processes that fueled interest in AI originated when classical philosophers, mathematicians, and logicians considered the manipulation of symbols (mechanically), eventually leading to the invention of the programmable digital computer, the Atanasoff Berry Computer (ABC), in the 1940s. This specific invention inspired scientists to move forward with the idea of creating an “electronic brain,” or an artificially intelligent being.

Nearly a decade passed before icons in AI aided in the understanding of the field we have today. Alan Turing, a mathematician, among other things, proposed a test that measured a machine’s ability to replicate human actions to a degree that was indistinguishable from human behavior. Later that decade, the field of AI research was founded during a summer conference at Dartmouth College in the mid-1950s, where John McCarthy, a computer and cognitive scientist, coined the term “artificial intelligence.”

From the 1950s forward, many scientists, programmers, logicians, and theorists helped solidify the modern understanding of artificial intelligence. With each new decade came innovations and findings that changed people’s fundamental knowledge of the field and how historical advancements have catapulted AI from being an unattainable fantasy to a tangible reality for current and future generations.

It’s unsurprising that artificial intelligence grew rapidly post-1900, but what is surprising is how many people thought about AI hundreds of years before there was even a word to describe what they were thinking about.

Let's see how artificial intelligence has unraveled over the years.

Between 380 BC and the late 1600s, Various mathematicians, theologians, philosophers, professors, and authors mused about mechanical techniques, calculating machines, and numeral systems, which eventually led to the concept of mechanized “human” thought in non-human beings.

Early 1700s: Depictions of all-knowing machines akin to computers were more widely discussed in popular literature. Jonathan Swift’s novel “Gulliver’s Travels” mentioned a device called the engine, which is one of the earliest references to modern-day technology, specifically a computer. This device’s intended purpose was to improve knowledge and mechanical operations to a point where even the least talented person would seem to be skilled – all with the assistance and knowledge of a non-human mind (mimicking artificial intelligence.)

1872: Author Samuel Butler’s novel “Erewhon” toyed with the idea that at an indeterminate point in the future, machines would have the potential to possess consciousness.

Once the 1900s hit, the pace with which innovation in artificial intelligence grew was significant.

1921: Karel Čapek, a Czech playwright, released his science fiction play “Rossum’s Universal Robots” (English translation). His play explored the concept of factory-made artificial people, whom he called robots – the first known reference to the word. From this point onward, people took the “robot” idea and implemented it into their research, art, and discoveries.

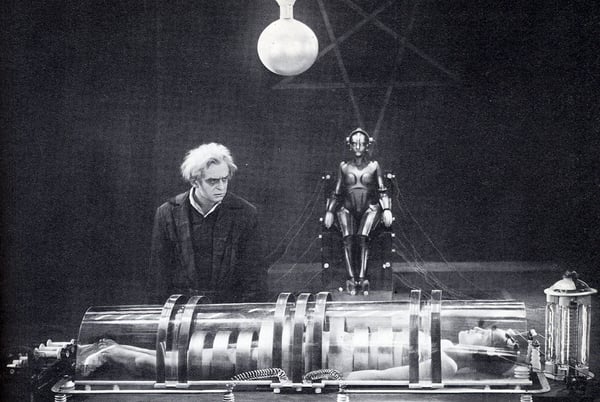

1927: The sci-fi film Metropolis, directed by Fritz Lang, featured a robotic girl who was physically indistinguishable from the human counterpart from which it took its likeness. The artificially intelligent robot-girl then attacks the town, wreaking havoc on a futuristic Berlin. This film holds significance because it is the first on-screen depiction of a robot and thus lent inspiration to other famous non-human characters, such as C-P30 in Star Wars.

1929: Japanese biologist and professor Makoto Nishimura created Gakutensoku, the first robot built in Japan. Gakutensoku translates to “learning from the laws of nature,” implying that the robot’s artificially intelligent mind could derive knowledge from people and nature. Some of its features included moving its head and hands and changing its facial expressions.

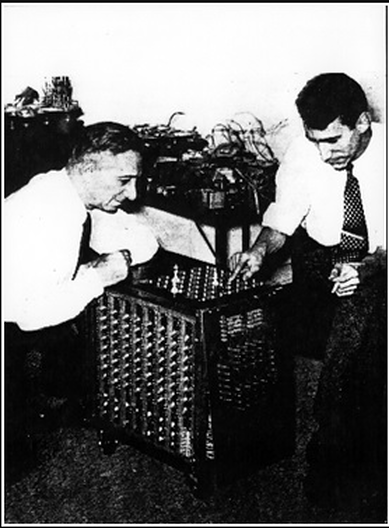

1939: John Vincent Atanasoff (physicist and inventor), alongside his graduate student assistant Clifford Berry, created the Atanasoff-Berry Computer (ABC) with a grant of $650 at Iowa State University. The ABC weighed over 700 pounds and could solve up to 29 simultaneous linear equations.

1949: Computer scientist Edmund Berkeley’s book “Giant Brains: Or Machines That Think” noted that machines have increasingly been capable of handling large amounts of information with speed and skill. He went on to compare machines to a human brain if it were made of “hardware and wire instead of flesh and nerves,” describing the machine's ability to that of the human mind, stating that “a machine, therefore, can think.”

The 1950s proved to be a time when many advances in artificial intelligence came to fruition, with an upswing in research-based findings in AI by various computer scientists, among others.

1950: Claude Shannon, “the father of information theory,” published “Programming a Computer for Playing Chess,” the first article to discuss the development of a computer program for chess.

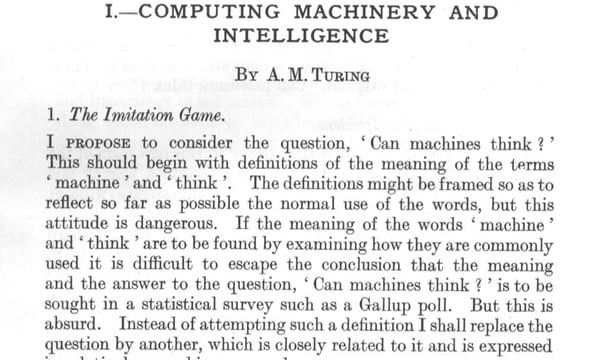

1950: Alan Turing published “Computing Machinery and Intelligence,” which proposed the idea of The Imitation Game – a question that considered if machines can think. This proposal later became The Turing Test, which measured machine (artificial) intelligence. Turing’s development tested a machine’s ability to think as a human would. The Turing Test became an important component in the philosophy of artificial intelligence, which discusses intelligence, consciousness, and ability in machines.

1952: Arthur Samuel, a computer scientist, developed a checkers-playing computer program – the first to independently learn how to play a game.

1955: John McCarthy and a team of men created a proposal for a workshop on “artificial intelligence.” In 1956, when the workshop took place, McCarthy officially gave birth to the word.

1955: Allen Newell (researcher), Herbert Simon (economist), and Cliff Shaw (programmer) co-authored Logic Theorist, the first artificial intelligence computer program.

1958: McCarthy developed Lisp, the most popular and still favored programming language for artificial intelligence research.

1959: Samuel coined the term “machine learning” when speaking about programming a computer to play a game of chess better than the human who wrote its program.

Innovation in artificial intelligence grew rapidly through the 1960s. The creation of new programming languages, robots and automatons, research studies, and films depicting artificially intelligent beings increased in popularity. This heavily highlighted the importance of AI in the second half of the 20th century.

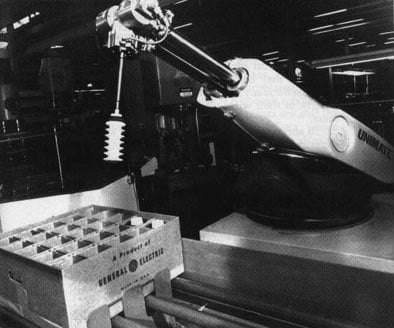

1961: Unimate, an industrial robot invented by George Devol in the 1950s, became the first to work on a General Motors assembly line in New Jersey. Its responsibilities included transporting die castings from the assembly line and welding the parts onto cars—a task deemed dangerous for humans.

1961: James Slagle, computer scientist, and professor, developed SAINT (Symbolic Automatic INTegrator), a heuristic problem-solving program whose focus was symbolic integration in freshman calculus.

1964: Daniel Bobrow, a computer scientist, created STUDENT, an early AI program written in Lisp that solved algebra word problems. STUDENT is cited as an early milestone of AI natural language processing.

1965: Joseph Weizenbaum, computer scientist, and professor, developed ELIZA, an interactive computer program that could functionally converse in English with a person. Weizenbaum’s goal was to demonstrate how communication between an artificially intelligent mind and a human mind was “superficial,” but they discovered many people attributed anthropomorphic characteristics to ELIZA.

1966: Charles Rosen, with the help of 11 others, developed Shakey the Robot. It was the first general-purpose mobile robot, also known as the “first electronic person.”

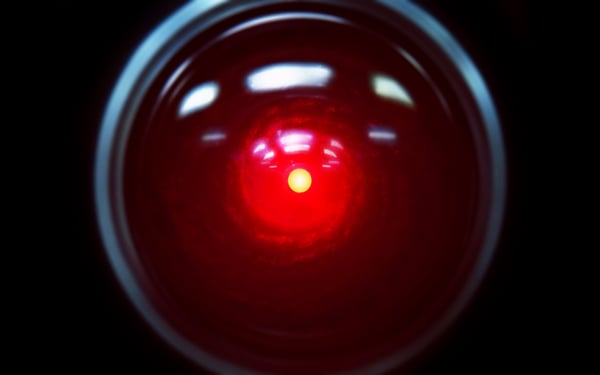

1968: The sci-fi film 2001: A Space Odyssey, directed by Stanley Kubrick, is released. It features HAL (Heuristically programmed Algorithmic computer), a sentient computer. HAL controls the spacecraft’s systems and interacts with the ship’s crew, conversing with them as if HAL were human until a malfunction changes HAL’s interactions in a negative manner.

1968: Terry Winograd, professor of computer science, created SHRDLU, an early natural language computer program.

Like the 1960s, the 1970s saw accelerated advancements, particularly in robotics and automation. However, artificial intelligence in the 1970s faced challenges, such as reduced government support for AI research.

1970: WABOT-1, the first anthropomorphic robot, was built in Japan at Waseda University. Its features included moveable limbs, ability to see, and ability to converse.

1973: James Lighthill, applied mathematician, reported the state of artificial intelligence research to the British Science Council, stating: “in no part of the field have discoveries made so far produced the major impact that was then promised,” which led to significantly reduced support in AI research via the British government.

1977: Director George Lucas’ film Star Wars is released. The film features C-3PO, a humanoid robot that is designed as a protocol droid and is “fluent in more than seven million forms of communication.” As a companion to C-3PO, the film also features R2-D2 – a small, astromech droid who is incapable of human speech (the inverse of C-3PO); instead, R2-D2 communicates with electronic beeps. Its functions include small repairs and co-piloting starfighters.

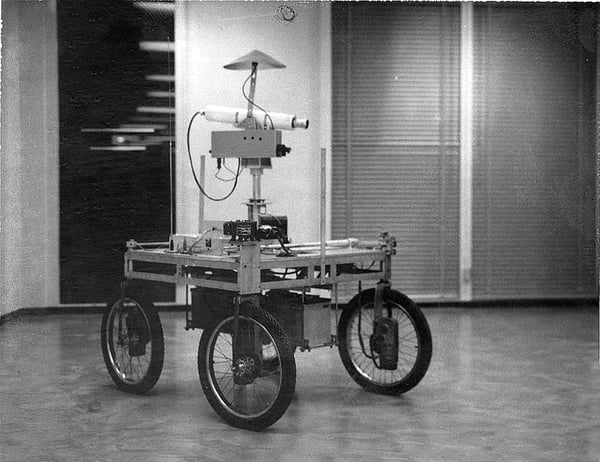

1979: The Stanford Cart, a remote-controlled, TV-equipped mobile robot, was created by then-mechanical engineering grad student James L. Adams in 1961. In 1979, Hans Moravec, a then-PhD student, added a “slider,” or mechanical swivel, that moved the TV camera from side to side. The cart successfully crossed a chair-filled room without human interference in approximately five hours, making it one of the earliest examples of an autonomous vehicle.

The rapid growth of artificial intelligence continued through the 1980s. Despite advancements and excitement about AI, caution surrounded an inevitable “AI Winter,” a period of reduced funding and interest in AI.

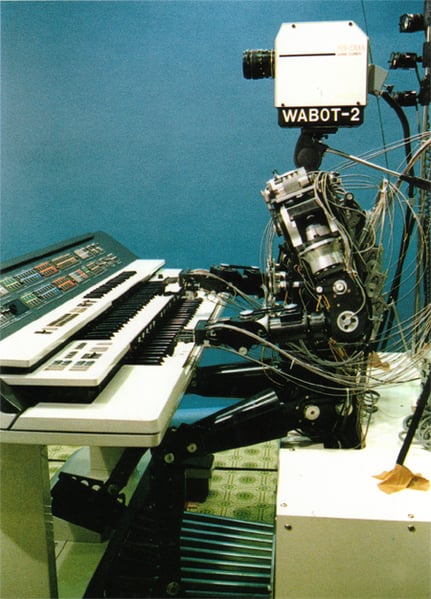

1980: WABOT-2 was built at Waseda University. This inception of the WABOT allowed the humanoid to communicate with people as well as read musical scores and play music on an electronic organ.

1981: The Japanese Ministry of International Trade and Industry allocated $850 million to the Fifth Generation Computer project, whose goal was to develop computers that could converse, translate languages, interpret pictures, and express human-like reasoning.

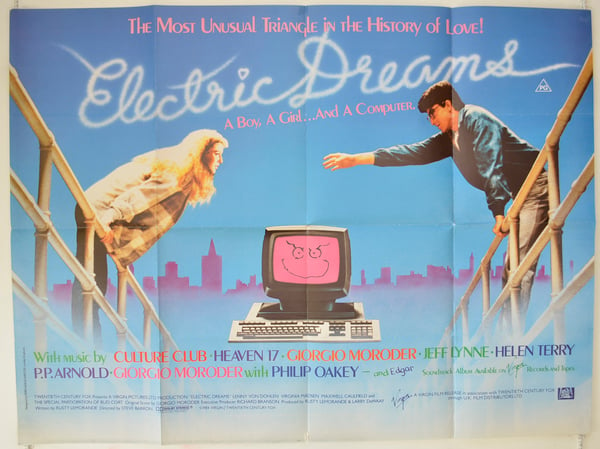

1984: The film Electric Dreams, directed by Steve Barron, is released. The plot revolves around a love triangle between a man, a woman, and a sentient personal computer called “Edgar.”

1984: At the Association for the Advancement of Artificial Intelligence (AAAI), Roger Schank (AI theorist) and Marvin Minsky (cognitive scientist) warn of the AI winter, the first instance where interest and funding for artificial intelligence research would decrease. Their warning came true within three years’ time.

1986: Ernst Dickmanns directed Mercedes-Benz's construction and release of a driverless van equipped with cameras and sensors. The van could drive up to 55 mph on a road with no other obstacles or human drivers.

1988: Computer scientist and philosopher Judea Pearl published “Probabilistic Reasoning in Intelligent Systems.” Pearl is also credited with inventing Bayesian networks, a “probabilistic graphical model” that represents sets of variables and their dependencies via a directed acyclic graph (DAG).

1988: Rollo Carpenter, programmer and inventor of two chatbots, Jabberwacky and Cleverbot (released in the 1990s), developed Jabberwacky to "simulate natural human chat in an interesting, entertaining and humorous manner." This is an example of AI via a chatbot communicating with people.

The end of the millennium was on the horizon, but this anticipation only helped artificial intelligence in its continued stages of growth.

1995: Computer scientist Richard Wallace developed the chatbot A.L.I.C.E (Artificial Linguistic Internet Computer Entity), inspired by Weizenbaum's ELIZA. What differentiated A.L.I.C.E. from ELIZA was the addition of natural language sample data collection.

1997: Computer scientists Sepp Hochreiter and Jürgen Schmidhuber developed Long Short-Term Memory (LSTM), a type of a recurrent neural network (RNN) architecture used for handwriting and speech recognition.

1997: Deep Blue, a chess-playing computer developed by IBM became the first system to win a chess game and match against a reigning world champion.

1998: Dave Hampton and Caleb Chung invented Furby, the first “pet” toy robot for children.

1999: In line with Furby, Sony introduced AIBO (Artificial Intelligence RoBOt), a $2,000 robotic pet dog crafted to “learn” by interacting with its environment, owners, and other AIBOs. Its features included the ability to understand and respond to 100+ voice commands and communicate with its human owner.

The new millennium was underway – and after the fears of Y2K died down – AI continued trending upward. As expected, more artificially intelligent beings were created as well as creative media (film, specifically) about the concept of artificial intelligence and where it might be headed.

2000: The Y2K problem, also known as the year 2000 problem, was a class of computer bugs related to the formatting and storage of electronic calendar data beginning on 01/01/2000. Given that all internet software and programs had been created in the 1900s, some systems would have trouble adapting to the new year format of 2000 (and beyond). Previously, these automated systems only had to change the final two digits of the year; now, all four digits had to be switched over – a challenge for technology and those who used it.

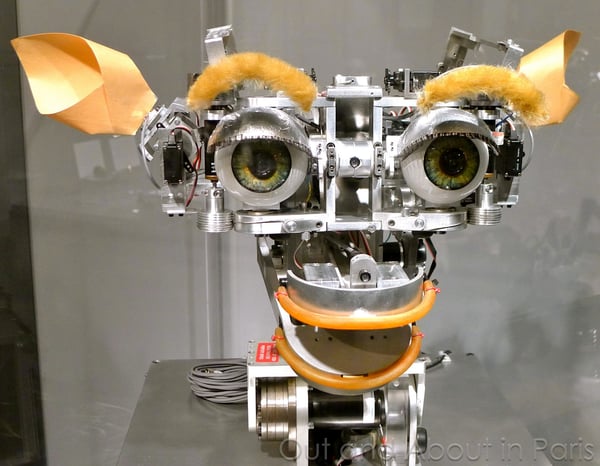

2000: Professor Cynthia Breazeal developed Kismet, a robot that could recognize and simulate emotions with its face. It was structured like a human face with eyes, lips, eyelids, and eyebrows.

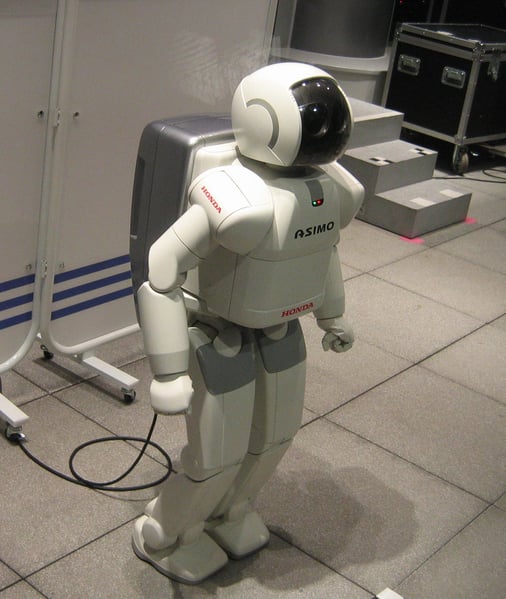

2000: Honda releases ASIMO, an artificially intelligent humanoid robot.

2001: Sci-fi film A.I. Artificial Intelligence, directed by Steven Spielberg, is released. The movie is set in a futuristic, dystopian society and follows David, an advanced humanoid child that is programmed with anthropomorphic feelings, including the ability to love.

2002: i-Robot released Roomba, an autonomous robot vacuum that cleans while avoiding obstacles.

2004: NASA's robotic exploration rovers Spirit and Opportunity navigate Mars’ surface without human intervention.

2004: Sci-fi film I, Robot, directed by Alex Proyas, is released. Set in the year 2035, humanoid robots serve humankind while one individual is vehemently anti-robot, given the outcome of a personal tragedy (determined by a robot.)

2006: Oren Etzioni (computer science professor), Michele Banko, and Michael Cafarella (computer scientists), coined the term “machine reading,” defining it as unsupervised autonomous understanding of text.

2007: Computer science professor Fei Fei Li and colleagues assembled ImageNet, a database of annotated images whose purpose is to aid in object recognition software research.

2009: Google secretly developed a driverless car. By 2014, it passed Nevada’s self-driving test.

The current decade has been immensely important for AI innovation. From 2010 onward, artificial intelligence has become embedded in our day-to-day existence. We use smartphones that have voice assistants and computers that have “intelligence” functions most of us take for granted. AI is no longer a pipe dream and hasn’t been for some time.

2010: ImageNet launched the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), their annual AI object recognition competition.

2010: Microsoft launched Kinect for Xbox 360, the first gaming device that tracked human body movement using a 3D camera and infrared detection.

2011: Watson, a natural language question-answering computer created by IBM, defeated two former Jeopardy! champions, Ken Jennings and Brad Rutter, in a televised game.

2011: Apple released Siri, a virtual assistant on the Apple iOS operating system. Siri uses a natural-language user interface to infer, observe, answer, and recommend things to its human user. It adapts to voice recognition and projects an “individualized experience” per user.

2012: Jeff Dean and Andrew Ng (Google researchers) trained a large neural network of 16,000 processors to recognize images of cats (despite giving no background information) by showing it 10 million unlabeled images from YouTube videos.

2013: A research team from Carnegie Mellon University released Never Ending Image Learner (NEIL), a semantic machine learning system that could compare and analyze image relationships.

2014: Microsoft released Cortana, their version of a virtual assistant similar to Siri on iOS.

2014: Amazon created Amazon Alexa, a home assistant that developed into smart speakers that function as personal assistants.

2015: Elon Musk, Stephen Hawking, and Steve Wozniak, among 3,000 others, signed an open letter banning the development and use of autonomous weapons (for purposes of war.)

2015-2017: Google DeepMind’s AlphaGo, a computer program that plays the board game Go, defeated various (human) champions.

2016: A humanoid robot named Sophia is created by Hanson Robotics. She is known as the first “robot citizen.” What distinguishes Sophia from previous humanoids is her likeness to an actual human being, with her ability to see (image recognition), make facial expressions, and communicate through AI.

2016: Google released Google Home, a smart speaker that uses AI to act as a “personal assistant” to help users remember tasks, create appointments, and search for information by voice.

2017: The Facebook Artificial Intelligence Research lab trained two “dialog agents” (chatbots) to communicate with each other in order to learn how to negotiate. However, as the chatbots conversed, they diverged from human language (programmed in English) and invented their own language to communicate with one another – exhibiting artificial intelligence to a great degree.

2018: Alibaba (Chinese tech group) language processing AI outscored human intellect at a Stanford reading and comprehension test. The Alibaba language processing scored “82.44 against 82.30 on a set of 100,000 questions” – a narrow defeat, but a defeat nonetheless.

2018: Google developed BERT, the first “bidirectional and generative large language model" that can be used on a variety of natural language tasks using transfer learning.”

2018: Samsung introduced Bixby, a virtual assistant. Bixby’s functions include Voice, where the user can speak to and ask questions, recommendations, and suggestions; Vision, where Bixby’s “seeing” ability is built into the camera app and can see what the user sees (i.e. object identification, search, purchase, translation, landmark recognition); and Home, where Bixby uses app-based information to help utilize and interact with the user (e.g. weather and fitness applications.)

Artificial intelligence advancements are occurring at an unprecedented rate. That being said, we can expect that the trends from the past decade will continue swinging upward in the coming year. A few things to keep our eyes on in 2019 include:

In order to keep up with the world of tech, we have to keep pace with innovations in artificial intelligence. From humanoid robots like Sophia to home speaker assistants like Alexa, AI is advancing at an accelerated rate. Someday, humans will have artificially intelligent companions beyond toys like AIBO or Furby; someday, AI and humankind might coexist in a fashion where humans and humanoids are indistinguishable from one another. However, that being said, AI will never be able to replace humans in their entirety for another hundred years to come.

And someday?

Someday might be sooner than we think.

We already have a foot in the door. Learn all about artificial intelligence and implement some of the new-age strategies to achieve your goals.

This article was originally published in 2021. It has been updated with new information.

Rebecca Reynoso is the former Sr. Editor and Guest Post Program Manager at G2. She holds two degrees in English, a BA from the University of Illinois-Chicago and an MA from DePaul University. Prior to working in tech, Rebecca taught English composition at a few colleges and universities in Chicago. Outside of G2, Rebecca freelance edits sales blogs and writes tech content. She has been editing professionally since 2013 and is a member of the American Copy Editors Society (ACES).

Large language models (LLMs) understand and generate human-like text. They learn from vast...

by Sagar Joshi

by Sagar Joshi

There were once more than seven thousand languages shaping human communication — until...

by Ankit Bhayani

by Ankit Bhayani

Robotics is one facet of artificial intelligence that everyone knows about to some degree –...

by Rebecca Reynoso

by Rebecca Reynoso

Large language models (LLMs) understand and generate human-like text. They learn from vast...

by Sagar Joshi

by Sagar Joshi

There were once more than seven thousand languages shaping human communication — until...

by Ankit Bhayani

by Ankit Bhayani