January 16, 2024

by Elena Prokopets / January 16, 2024

by Elena Prokopets / January 16, 2024

The healthcare industry rests on one important pillar: data.

Without the collective knowledge synthesized and shared by generations of healthcare professionals, we would have never become so efficient in treating diseases.

Healthcare professionals today have no shortage of data per se as hospitals have become increasingly digitalized. However, in its raw state, medical data has little to no value, especially when it cannot be effectively accessed by analytics tools.

This article will introduce you to the process of data integration in healthcare and provide the best practices for transforming extensive data sets into practical insights for decision-making.

Data integration is the process of combining various sources of information and making them accessible to more business applications.

By combining data from multiple data streams — electronic healthcare records (EHR) software, remote patient monitoring systems, and wearable medical devices — healthcare companies can obtain a 360-degree view of their operations and make more informed decisions.

A recent survey by Google Cloud found that better data interoperability empowers healthcare service providers to deliver more personalized care, explore new opportunities for preventive care, and achieve operational savings.

Source: Google Cloud

Healthcare data integration is becoming even more important with the adoption of big data analytics. By integrating previously disparate datasets, healthcare practitioners can:

In other words, medical data integration opens new opportunities to deliver better patient outcomes and improve overall healthcare delivery.

Integrating healthcare data may seem straightforward at first. However, in reality, it is far from simple. 68% of healthcare professionals say technical limitations pose a challenge to obtaining data for decision-making.

Indeed, the healthcare industry has a complex tech stack, with legacy mainframe systems often operating alongside cloud-native healthcare applications. High data volumes, a great degree of data variety, and poor system interoperability hinder hospitals' ability to profit from big data analytics.

Below are the main reasons why healthcare companies struggle to operationalize available big data.

Digital transformation in the healthcare industry is underway, but most institutions are slightly behind the curve.

medical providers still use medical equipment running on legacy operating systems. A large fraction also operates a backlog of legacy software for front- and back-end processes.

Source: kaspersky.com

Outdated software systems often lack native integrations with more modern solutions (e.g., open APIs) or process data in legacy formats, which makes it harder to connect them to newly acquired technologies.

Over the past decade, big data volumes in healthcare grew by 568%.

Real-time insights are readily available to healthcare professionals through EHR/EMR systems, connected medical equipment, remote patient monitoring solutions, and digital therapeutics applications.

The challenge, however, is that about 80% of all generated medical data is unstructured (e.g., medical images, audio notes, PDF reports, etc). Unstructured data cannot reside in traditional row-column databases, usually used for data storage. Likewise, it cannot be queried with self-service analytical tools.

To become useful, unstructured data must be transformed into an appropriate format, labeled, anonymized (if necessary), and securely uploaded to a data lake, where it could be queried with different data analytics engines.

To ensure compliance, organizations must also maintain clear data lineage and demonstrate system auditability, which is challenging without a proper data management strategy.

The Health Insurance Portability and Accountability Act (HIPAA) sets forward clear requirements for patient data protection.

Healthcare providers and their business associates, including software vendors, must implement organizational and technological measures to prevent the disclosure of electronic protected health information (ePHI).

This regulatory requirement makes implementing healthcare data integrations more complex. Sensitive patient data must be excluded from most analytics use cases.

Also, healthcare institutions must always consider which data assets can be exchanged internally and shared with authorized third parties (e.g., clinical research partners). Poorly architectured data pipelines can lead to accidental data disclosures (and substantial regulatory penalties) or public data breaches in the worst-case scenario.

Hospitals have increased spending on technology since the pandemic began, adopting telehealth platforms, remote monitoring solutions, and patient portals.

healthcare executives plan to further invest in third-party technologies, including new automation tools and virtual healthcare solutions, as they seek to improve back-end operations and data analytics capabilities in 2024.

Source: healthleadersmedia.com

That said, a larger technology stack often magnifies system interoperability issues. Software vendors don’t always follow the same standards in data formatting, storage, or electronic exchange methods.

Healthcare providers increasingly cite a lack of cross-platform interoperability and poor EMR integration with existing tech stacks as the top pain points with their existing tech stacks, overshadowing technology costs.

Source: Bain & Company

The 21st Century Cures Act has made some attempts to establish shared industry standards, but its impacts are still limited. At present, organizations address the problem by developing custom data integrations and healthcare system connectors when no native option is available.

Data integrations in healthcare require specific technical skills, which combine general engineering knowledge with healthcare domain acumen.

Such talent is hard to find.

Healthcare industry professionals name “lack of specific skills and talents” as their primary barrier to digital transformation.

Healthcare IT teams are more used to operating on-premises technologies and have much less experience with cloud infrastructure, for example. To create data integrations between legacy on-premises systems and newly acquired cloud software, IT teams also require knowledge of API development and cloud-native data architectures, which many also lack.

The data integration process in healthcare has its inherent complexities, but it is feasible when you’ve chosen the right strategy and implementation framework.

Below are the six recommended best practices.

Data discovery is the process of identifying and classifying different data assets and then establishing their storage location, lineage, and access permissions.

With healthcare data being spread across multiple cloud and on-premises locations, organizations often lose track of different assets, resulting in data silos and higher storage costs.

Attempting data integrations without conducting data discovery first is like driving without a map: You may get to your destination eventually, but there will be a lot of costly detours.

Specialized data discovery tools help:

Effectively, your task is to create a virtual map of your data estate to understand where the data rests and how it’s consumed by different applications and users. As part of the process, you create and assign sensitive labels for different types of data classes.

Sensitivity labels should specify allowed use cases, incorporate data retention policies, and establish appropriate identity and access management controls.

A well-organized data catalog helps you easily locate new datasets for self-service BI tools and ad-hoc healthcare analytics projects.

A significant part of data discovery is documenting your data landscape. As mentioned earlier, healthcare data can come in structured and unstructured form.

Structured data is easier to integrate as long as the two systems use the same data formats (which isn’t always the case). There are no unified data standards in healthcare, with structured data commonly stored in different formats (e.g., HDF, SDA, FHIR, HL7 v2, etc).

The lack of unified standards prevents healthcare organizations from exchanging prescription information with commercial laboratories, outpatient care facilities, or even pharmacies. Lack of interoperability also affects the speed and efficiency of reimbursements from insurance partners.

For these reasons, healthcare developers often need to think early on about the types of data transformations that will be required for improving system interoperability.

The two common approaches to data transformation are:

ETL works best for structured data that can be represented as tables with rows and columns. Data gets transformed from one structured format to another and then loaded to the DWH.

ELT, in turn, works better for unstructured data formats (images, audio, PDF) as it allows you to first load the data into the DWH or data lake and then apply further transformations to make it usable for other applications.

A common strategy for overcoming interoperability issues is creating a centralized cloud-based data repository for hosting raw and transformed data and then distributing it to connected big data analytic platforms and applications.

HIPAA allows the use of both public and private clouds for storing healthcare data as long as the underlying infrastructure is properly secured.

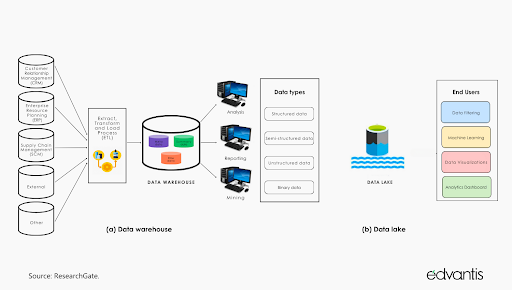

The two common types of cloud data storage are data warehouses (DWHs) and data lakes.

Source: ResearchGate

A data warehouse (DWH) is a centralized repository for storing structured data. It’s a large-scale repository that aggregates data from multiple sources, transforms it into a standardized format, and stores it in a way that makes it easy to retrieve and analyze.

Data warehouses typically use extract, transform, and load (ETL) processes to move data from source systems to the warehouse and the online analytical processing (OLAP) method for subsequent analysis.

There are some DWH platforms well-suited for running complex SQL queries to perform multi-dimensional analysis of the stored data. Modern cloud solutions also include native integration with popular self-service BI apps, analytics services, and reporting tools. Many solutions also include native tools for data cleansing, data quality management, and data governance.

The downsides of DWHs include limited database scalability potential (as most solutions are SQL-based) and higher operating costs due to all the transformation efforts and licensing.

A data lake is another type of cloud repository that stores raw data in its native format until it is needed for analysis or other purposes. Data lake solutions can host structured, semi-structured, and unstructured data at the same time.

Data lakes are designed to support big data analytics, machine learning, and other advanced analytics applications that require access to large amounts of raw data.

Unlike DWHs, data lakes offer instant data accessibility. You can start querying the lake immediately after new data is ingested — a characteristic that supports real-time big data analytics or advanced machine learning scenarios. Cloud data lakes are also highly scalable and more cost effective than DWH solutions, making them a more attractive option for long-term data storage.

In summary, DWH work better when you want to provide more healthcare data for business intelligence tools. Data lake, in turn, is a better architecture for supporting ad hoc data science and complex machine learning projects.

Legacy software in healthcare organizations, such as on-premises ERPs and old mainframe databases, is incompatible with modern cloud-native applications, IoT devices, and other systems that require their data.

One issue is the incompatibility of data formats, and the other is the inability of such apps to handle the call volume supported by newer SaaS apps.

The optimal approach to making data accessible from legacy healthcare software is to use a layer of application programming interface (API) abstraction.

Adding an API connectivity layer can isolate client-facing applications from backend services, hosting and provisioning the required data to other applications.

API management platforms also help implement optimal policies (e.g., rate limiting and throttling) to protect legacy systems from receiving too many requests (API calls) and experiencing downtime as a result.

Overall, APIs are more cost effective than re-architecturing or replacing legacy systems when building new data integrations. Moreover, robust API management platforms help organizations stream data from newer systems like medical wearable devices, connected hospital equipment, or remote patient monitoring solutions.

A data pipeline is a codified series of steps you perform when moving data from one destination (e.g., an EHR app) to another (e.g., a DWH). A typical data pipeline includes an orchestrated number of steps for data ingestion, processing, storage, and access — all performed automatically in seconds.

Source: Google Cloud

Well-architected data pipelines play a key role in promoting data integrity and security. They help ensure secure and rapid data transportation between different systems, prevent data duplication, and perform data enrichment.

Some 80% of healthcare professionals said they want to enrich their data from clinical data from other EHR systems (e.g., claims data, patient-reported outcomes, and pharmacy data) to get a 360-degree view of each patient. Data pipeline architecture helps enable such types of data integrations.

Data governance is a collection of processes, policies, and technologies to manage data availability, usability, integrity, and security. Its primary goal is to create a transparent, auditable, and unified overview of all data processes in your organization.

Data governance also promotes ethical and secure data use, which helps healthcare providers comply with applicable regulations. New data integrations (e.g., unprotected open APIs) can become vulnerabilities without proper oversight, increasing the risks of data breaches or cyber exploits.

Global organizations like OECD and AHIMA have issued recommendations on implementing health data governance on the process level. Once the processes are set right, incorporate technology to support your efforts.

Use data discovery services to maintain an up-to-date view of your data landscape. Automate meta-data collection and management to establish ownership of data assets and document usage.

Implement identity and access management (IAM) solutions to automatically provision access to new data integrations and data sets to the right users and implement granular controls for usage permissions. Finally, ensure you have proper security solutions to monitor your applications and infrastructure.

In healthcare, data integration can make a real-world difference. By having access to high-fidelity data, healthcare providers can expedite their diagnostic processes, leading to faster intervention and faster recovery.

Moreover, integrating data can accelerate the approval process of new drugs, which can then become available to patients who need them. Patient outcomes also improve as healthcare providers gain a more comprehensive understanding of their medical history, allowing for personalized treatment and prevention plans.

Although the data integration process requires a substantial initial investment, it saves your organization money in the long run. With modern, auditable systems, you don’t have to worry as much about compliance, cybersecurity risks, and unplanned downtime. Not to mention the positive impact of greater data accessibility on operational decision-making.

Ready to take your healthcare integration knowledge to the next level? Explore the ways data analytics revolutionizes healthcare.

Edited by Shanti S Nair

Elena Prokopets is a B2B writer at Edvantis and a content strategist who helps software companies and their technology partners create content that ranks well and drives industry conversations.

While investors have long leveraged traditional data sources – financial statements, earnings...

by Lindsey Phillips

by Lindsey Phillips

More and more businesses today are looking to extract insight out of their systems using data...

by Devin Pickell

by Devin Pickell

Data loss, no matter the cause, is a threat to organizations. The 3-2-1 backup strategy helps...

by Praveen E

by Praveen E

While investors have long leveraged traditional data sources – financial statements, earnings...

by Lindsey Phillips

by Lindsey Phillips

More and more businesses today are looking to extract insight out of their systems using data...

by Devin Pickell

by Devin Pickell