The world of recruitment has undergone a significant transformation in recent years thanks to the rapid advancements in artificial intelligence (AI) technology.

AI has revolutionized many aspects of the recruitment process, offering innovative tools and solutions that automate workflows, enhance decision-making, and improve the candidate experience.

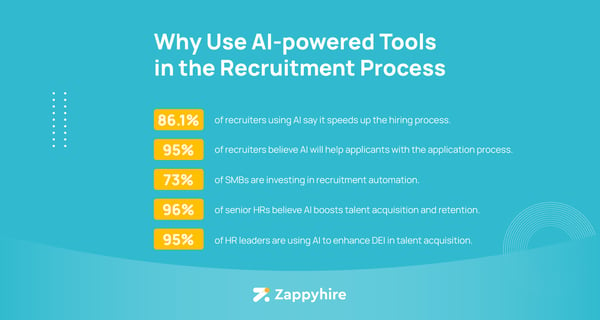

The impact of AI on recruitment has some impressive statistics.

A staggering 96% of senior HR professionals believe that AI will greatly enhance talent acquisition and retention. Furthermore, 86.1% of recruiters using AI confirm that it speeds up the hiring process, which demonstrates its efficiency and time-saving capabilities.

Adopting AI in recruitment is widespread, with at least 73% of companies investing in recruitment automation to optimize their talent acquisition efforts. This trend is reinforced by the 85% of recruiters who find AI to be useful in their recruitment practices.

However, as we embrace this transformative technology, we also have to address the ethics of AI in recruitment. While AI offers numerous advantages, it also poses challenges and potential pitfalls that must be carefully navigated.

In this blog, we’ll explore the intricacies of AI in recruitment, consider its potential, and highlight the importance of ethical considerations in its implementation.

Source: Zappyhire

Before delving into the ethical implications, let's first establish a clear definition and scope of AI in recruitment.

AI in recruitment refers to the use of machine learning (ML) algorithms, natural language processing (NLP), and other AI techniques to automate or augment various stages of the hiring process. It’s also called recruitment automation software.

In the context of employment, this could be anything from an algorithm that recommends candidates based on your specific requirements (e.g., "I want someone who has worked at companies like Google or Amazon") all the way up to video interviewing software or chatbots that screen candidates for you by asking them questions about their past experience and skill set.

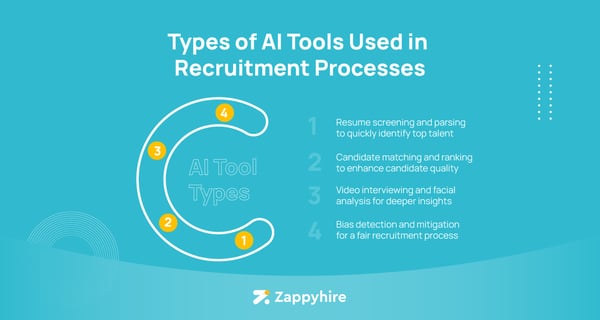

AI-powered recruiting software is becoming increasingly common throughout HR departments, commonly referred to as "HR tech" or "talent tech." Let’s take a look at some of them.

From résumé screening and candidate matching to video interviewing and bias detection, AI tools have the capacity to automate time-consuming recruitment tasks and optimize the overall hiring experience for everyone, including the candidates.

One of the initial stages in recruitment involves reviewing a vast number of résumés. AI-powered résumé screening and parsing tools can quickly analyze résumés, extract relevant information, and identify top candidates based on your predefined criteria.

This reduces your burden, allowing you to focus on more strategic aspects of talent acquisition.

AI-based candidate matching and ranking tools utilize algorithms that consider various factors, such as skills, experience, and cultural fit, to identify the most suitable candidates for each role.

This saves time and enhances the quality of candidates you’ll meet.

Video interviewing has gained popularity in recent years, offering convenience for candidates and recruiters.

AI-powered video interviewing tools go beyond mere video conferencing by analyzing facial expressions, tone of voice, and body language to provide deeper insights into a candidate's suitability for a role.

However, it’s important to balance the benefits of such analysis with privacy concerns and potential bias.

AI is adept at eliminating bias in recruitment by removing human subjectivity from decision-making. ML algorithms can detect and reduce bias in job descriptions, candidate evaluations, and selection processes.

However, while AI sure is on the path to reducing bias in recruiting, humans still influence it. Eliminating bias completely is a distant goal. Ethically using AI in recruiting means promoting fairness and inclusivity and striving for a diverse workforce – a work in progress for AI.

Source: Zappyhire

Algorithmic bias is a critical concern in AI recruitment systems as it can perpetuate inequalities and lead to discriminatory outcomes. Examine the sources and manifestations of bias to address your issues effectively.

Let's talk about two key aspects of bias in AI recruitment systems: biased training data and the different manifestations of bias.

One of the primary sources of bias in AI recruitment systems is biased training data.

AI algorithms learn from historical data, which reflect existing societal biases and inequalities. If the training data predominantly represents a particular demographic or exhibits unfair patterns, the AI system may perpetuate those biases in its decision-making processes.

For example, if a dataset used for training an AI system comprises primarily résumés from a certain demographic, the algorithm may inadvertently favor candidates from that demographic, leading to the exclusion of other qualified individuals. Make sure you’re working with diverse and representative training data to mitigate bias.

You have to be aware of the various ways bias in recruitment systems manifests so you address them effectively. Let's explore two common manifestations: educational and geographic biases and language and keyword biases.

AI systems trained on biased data may exhibit educational and geographic biases. Just like in the example above, if the training data predominantly consists of candidates from prestigious universities or specific geographical regions, the AI system may inadvertently favor candidates with similar educational backgrounds or from certain areas. This can result in the exclusion of other qualified candidates from alternative educational paths or other locations.

Unintentional exclusions based on educational and geographic biases hinder diversity and limit your potential talent pool. Ensure that your AI systems consider a broad range of educational backgrounds and geographic locations to prevent discrimination.

Language and keyword biases are two more manifestations of bias in AI recruitment systems. AI algorithms may learn to associate certain words or phrases with desirable or undesirable candidate attributes, which can lead to unconscious discrimination.

For example, if certain keywords or phrases are associated with gender, age, or race in the training data, the AI system may inadvertently favor or penalize candidates based on these factors.

Addressing language and keyword biases requires careful scrutiny of the training data and algorithmic design. Do everything you can to guarantee your AI system does not discriminate based on protected characteristics and that language-based evaluations are objective.

To reduce bias in AI recruitment systems, adopt best practices such as diverse and representative training data, regular bias audits, and evaluations of AI systems, along with enhancing transparency and explainability.

Organizations promote fairness, inclusivity, and equal opportunities in the recruitment process by actively identifying and addressing biases.

Using AI for recruitment isn’t inherently unethical but can lead to unintentional bias. Some studies suggest that AI-powered recruiting tools are more effective than traditional ones, and while they may be more efficient than human recruiters at first glance, they also have drawbacks.

A significant worry is that since AI tools rely on data sets that reflect existing societal biases, they will also perpetuate those biases in their decision-making process.

Bias can arise from skewed training data, algorithms, or interpretations of the output.

Let’s say an AI-powered recruitment tool is trained on historical data from a tech company. The company has a long history of hiring candidates from reputable universities. This tendency is embedded in the historical data.

This bias may be unintentionally maintained when the AI tool evaluates candidates. As trained, the algorithm prioritizes candidates from the predefined universities in its database and overlooks other qualified candidates with relevant skills and experience.

The bias arises from the skewed training data and manifests itself in the form of favoritism. Although designed to improve the hiring process, the AI algorithm inadvertently perpetuates existing biases, divulging from fair and inclusive candidate evaluation.

AI systems are complex and difficult to interpret, making it challenging for candidates and recruiters to understand why certain decisions are made. Lack of transparency erodes trust in the recruitment process and raises concerns about fairness and accountability.

To address transparency challenges, provide clear explanations about the ways AI algorithms work, the factors that influence decision-making, and the criteria it uses to evaluate candidates. Open communication and transparency empower candidates to understand and trust the AI-driven recruitment process. In fact, 48% of job seekers say that not getting proper feedback is one of the most frustrating aspects of applying for a job.

You have to collect and store sensitive candidate data when you use AI to recruit talent. This raises concerns about privacy and data protection. You must ensure you have the informed consent of each candidate and that their information is securely stored and protected from unauthorized access or misuse. Compliance with relevant data protection regulations, such as GDPR, is vital to safeguarding candidate privacy.

Adopt robust data security measures to protect candidate information. This includes implementing encryption protocols, access controls, and regular security audits. Additionally, you have to establish clear policies on data retention and guarantee that candidate data is only used for recruitment purposes and not shared with third parties without consent.

When used properly, recruitment software brings tons of benefits to your process. In fact, the integration of AI in recruitment has been most helpful in sourcing candidates, with 58% of recruiters finding AI valuable in this respect, followed closely by screening candidates at 56% and nurturing candidates at 55%.

The positive perception of AI extends beyond recruiters, as 80% of executives believe that AI has the potential to improve productivity and performance within their organizations.

Even in its early adoption phase, AI-powered recruiting software showcased remarkable results. Early adopters experienced a significant reduction in cost per screen, with a staggering 75% decrease.

Turnover rates also saw a notable decline of 35%. These findings, observed as far back as 2017, provide clear evidence of the positive effects of AI implementation in the recruitment process.

Now, let’s take a look at some best practices to ensure fairness, accuracy, and transparency in your recruiting process.

AI algorithms learn from the data they’re trained on. To prevent biases from being perpetuated, make sure your training data is representative of the candidate pool. Actively address underrepresentation and collect data from diverse sources to create a more inclusive and fair AI recruitment system.

To maintain the integrity of AI recruitment systems, set up regular audits and evaluations to detect any potential biases. These evaluations help identify and address systemic biases to improve the overall fairness of the hiring process. By continuously monitoring and evaluating AI systems, you confirm that they align with ethical standards and provide unbiased outcomes.

Employ interpretable AI models and algorithms that provide clear explanations for the decisions they make. By communicating the role of AI in the recruitment process and the factors considered in decision-making, you help candidates and recruiters understand and trust the technology.

When candidates receive notifications or feedback based on AI evaluations, the reasoning behind those decisions should be explained in a way that is understandable and meaningful to them. This transparency helps candidates navigate the recruitment process and builds trust in the AI system.

Since AI relies on candidate data, you have to prioritize privacy and data protection. Ensure compliance with relevant data protection regulations, such as GDPR or the California Consumer Privacy Act (CCPA).

Implement robust security measures to protect candidate information from unauthorized access, use, or breaches. By safeguarding privacy, you can establish trust and confidence in the use of AI in recruitment.

To foster responsible AI implementation in recruitment, establish clear guidelines for AI usage and decision-making. Designate accountable individuals or teams responsible for the AI recruitment system's performance and adherence to ethical practices.

Regular monitoring and governance of AI systems will help ensure accountability, mitigate potential risks, and promote ethical conduct throughout the recruitment process.

While AI can enhance efficiency in recruitment processes, you have to strike a balance between AI efficiency and human judgment. AI should be seen as a tool to support and augment our decision-making, not as a replacement for it. Incorporate human oversight and review to ensure that AI-based choices align with organizational values and ethics.

Human judgment brings essential qualities like empathy, intuition, and context understanding to the recruitment process. In fact, a little bit of human know-how combined with an AI system is all it takes to make certain of a quick, data-driven recruitment process.

A substantial 68% of recruiters believe that using AI in the recruitment process can effectively remove unintentional bias to work toward an objective assessment of candidates.

Source: Zappyhire

AI brings automation and data-driven insights to the table, but you have to recognize the value of human decision-making and incorporate it effectively.

“Embracing the power of human/AI collaboration in the recruitment process is the key to unlocking a new era of talent acquisition.”

Jyothis KS

Co-founder, Zappyhire

A staunch propagator of “human-first” decision-making, Jyothis reinstates, “Together, we can combine the insights and capabilities of artificial intelligence with the human touch to discover hidden potential, make unbiased decisions, and build diverse and exceptional teams.“

Let's explore some important aspects to keep in mind.

AI’s ability to automate repetitive tasks, analyze vast amounts of data, identify patterns, and provide data-driven insights empower you to make more informed choices while saving valuable time.

However, AI is not a substitute for human judgment. You have to incorporate human oversight and review to ensure fairness, mitigate biases, and interpret complex contexts that AI algorithms may not fully grasp. The human touch allows for a deeper understanding of candidates because we consider subjective factors and provide the necessary empathy that AI may lack.

Here's how you can strike the right balance between AI technology and human judgment.

Incorporate a collaborative workflow where AI technology and human expertise go hand in hand. Give your human recruiters the task of reviewing AI recommendations and decisions to certify alignment with organizational values, ethical standards, and legal requirements.

Foster a culture of continuous learning and improvement by regularly evaluating the performance of AI systems. This lets you identify and fix any potential biases and enhance the accuracy and fairness of AI-generated suggestions.

Define clear guidelines and policies for the usage of AI in your recruitment processes. Specify the roles and responsibilities of AI technology, recruiters, and stakeholders involved. This clarity ensures that AI is used ethically and in line with organizational goals.

In order to oversee AI recruitment systems and comply with ethical practices, these team members should have a deep understanding of AI technology, its limitations, and its potential risks.

As the recruitment landscape changes, you have to learn how your company will carefully and insightfully navigate the intersection of AI and human judgment. By drawing from the best of both worlds, you can elevate your recruitment practices and positively impact the candidates you engage with, which also boosts your employer branding.

Ultimately, successfully integrating AI and human judgment sets the stage for a more efficient, inclusive, and effective recruitment process.

Smart hiring invokes using smart technology. See how recruitment chatbots simplify communicating with potential candidates and raise your bar in a competitive job market.

Driven by curiosity, Varshini Ravi was a wandering freelance content writer working for a myriad of industries before finding a home at Zappyhire. A total bookworm, if her nose is not buried in a book, you can find her keeping up with global pop culture in strange corners of the Internet.

Imagine sifting through a towering stack of resumes, each representing a potential candidate. ...

by Guruprakash Sivabalan

by Guruprakash Sivabalan

Have you ever wondered if your hiring efforts are hitting the jackpot or draining resources?

by Varshini Ravi

by Varshini Ravi

The world is powered by the experience economy, and it's time to take that to heart when it...

by Varshini Ravi

by Varshini Ravi

Imagine sifting through a towering stack of resumes, each representing a potential candidate. ...

by Guruprakash Sivabalan

by Guruprakash Sivabalan

Have you ever wondered if your hiring efforts are hitting the jackpot or draining resources?

by Varshini Ravi

by Varshini Ravi