November 19, 2019

by Dhaval Sarvaiya / November 19, 2019

by Dhaval Sarvaiya / November 19, 2019

Facebook. Apple. Gucci. Toyota. Adidas. YouTube. Starbucks. IKEA. The list goes on.

The number of brands that have adopted augmented reality projects is endless. AR blends physical and digital worlds to create an experience within the tiny space of a few-inch widescreen. That makes it a thing of fascination for businesses all around the world who are vying to win customer attention.

Augmented reality works like a tech that is straight out of the Star Wars series. It is a technology that can project digital media superimposed on real-world surfaces without demanding any heavy hardware or software requirements.

Although we don’t much about how the technologies in Star Wars work, AR is powered by three major technologies. They are responsible for collecting real-world images, processing them and overlaying them digital media — be it text or imagery.

There are three major technologies that make augmented reality work. In fact, these are the technologies that makes it possible to superimpose digital media on physical spaces in the right dimensions and at the right location. They do not work as standalone technologies, instead they interact with each other by supplying data to one another to make AR function.

SLAM renders virtual images over real-world spaces/objects. It works with the help of localizing sensors (like gyroscope or accelerometer) that map the entire physical space or object. A complex AR simulation is conducted by its algorithm which renders the virtual image in the right dimensions on the space or object. Most of the augmented reality APIs and SDKs available today come with built-in SLAM capabilities.

Depth tracking is used to measure the distance of the object or surface from the AR device’s camera sensor. It works the same a camera would work to focus on the desired object and blur out the rest of its surroundings.

Once SLAM and depth tracking is completed, the AR program will process the image as per requirements and projects it on the user's screen. The user screen could be a dedicated device (like Microsoft Hololens) or any other device with the AR application.

The image is collected from the user’s device lens and processed in the backend by the AR application. SLAM and depth tracking makes it possible to render the image in the right dimensions and at the right location.

Let’s take a restaurant locator app as an example. When the user points their device at the restaurant, the AR app would fetch the location details, match it additional details like star rating, reviews, directions, seat availability, and more. and finally place such information on the screen. SLAM and depth tracking makes it possible to pace the digital information as viewed through the screen on the physical object in the right dimension.

|

Related: See 13 innovative augmented reality examples and how they're currently being used across industries. |

Apart from the three technologies — SLAM, depth tracking and image processing, there are also a handful of other subset technologies that make AR work. Primary among them are two types of technologies that detect objects: trigger-based and view-based. Both trigger-based and view-based augmentations have several subsets.

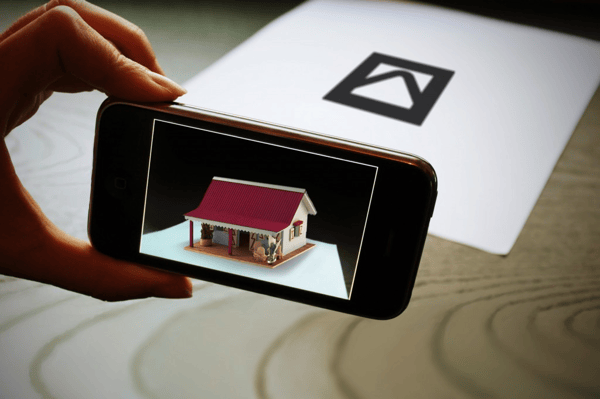

There are triggers that activate the augmentation by detecting AR markers, symbols, icons, GPS locations, and so on. When the AR device is pointed at the AR marker, the AR app processes the 3D image and projects it on the user device.

Trigger-based AR can work with the help of:

|

Marker-based augmentation works by scanning and recognizing AR markers. AR markers are paper-printer markers in the form of specific designs. They look more or less like bar codes and enable the AR app to create digitally enhanced 360-degree images on the AR device.

Nike’s SNKRs app is an example of marker-based augmentation. Users can scan the marker which was designed in the form of a special Nike logo for the campaign to view premium sneakers on sale. It was created with markers distributed in newspapers, graffiti walls, posters and so on. The app helped Nike combat the menace of bot-based reselling.

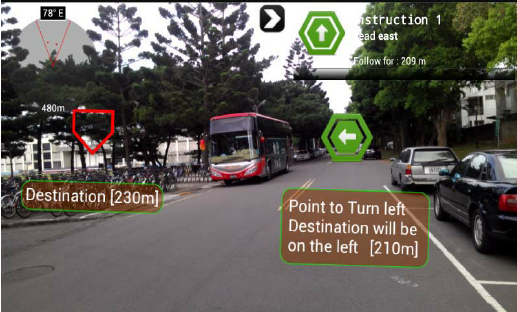

In this method of augmentation, the AR app picks up the real-time location of the device and combines it with dynamic information fetched from cloud servers or from the app’s backend. Most maps and navigation that have AR facilities, or vehicle parking assistants work based on location-based augmentation.

BMW’s heads-up display is a perfect example of location-based augmentation at work.

According to BMW’s blog:

“Whenever a navigation manoeuvre needs to be performed, such as turning at an intersection, the system presents the information in such a way that it appears to blend with the road itself. The driver can keep his eye on the road throughout, and intuitively drives in the right direction.”

Dynamic augmentation is the most responsive form of augmented reality. It leverages motion tracking sensors in the AR device to detect images from the real-world and super-imposes them with digital media.

Sephora’s AR mirror is a perfect example of dynamic augmentation at work. The app works like a real-world mirror reflecting the user’s face on the screen. Using on-screen markers/pickers, the user can try on various Sephora products to see how they would look in real life. This helps Sephora reduce the amount of makeup that is lost on trials. It also saves time and effort for users on applying and removing makeup.

They are dynamic surfaces (like buildings, desktop surfaces, natural surroundings, etc.) which are detected by the AR app. The app connects the dynamic view to its backend to match reference points and projects related information on the screen.

View-based augmentation works with the help of:

|

Let’s take a deep dive into how each of these works.

Superimposition-based augmentation works by detecting static objects that are already fed into the AR application’s database. The app uses optical sensors to detect the object and relays digital information above them.

For example, Hyundai has an AR-based owner’s manual which allows users to point their AR device at the engine. The AR will display each component name and how to perform basic maintenance process for each of those components. All this without having to refer to a hundred pages long owner’s manual

Generic digital augmentation is what gives developers and artists the liberty to create anything that they wish the immersive experience of AR. It allows rendering of 3D objects that can be imposed on actual spaces.

IKEA’s furniture shopping is a perfect example of generic digital augmentation.

Augmented reality is one-of-a-kind of immersive technology. Its ability to render digital information atop physical objects and surfaces has positioned it as a futuristic technology. It holds great promise for e-commerce, education, engineering, marketing, customer service and several other sectors where information overload is becoming a problem.

But there is no one-size-fits-all AR technology. The right augmented reality software technology has to be chosen based on the requirements of the end-users.

Dhaval Sarvaiya is a Co-Founder of Intelivita, an enterprise web and mobile app development company based in the UK and India. He helps enterprises and startups overcome their digital transformation and mobility challenges with the might of on-demand solutions powered by cutting-edge technologies such as augmented reality, virtual reality, IoT, and native mobile apps.

Uber. Airbnb. Amazon. Facebook. Netflix.

by Dhaval Sarvaiya

by Dhaval Sarvaiya

Consumer demand is a powerful market driver across all industries.

by Ralf Llanasas

by Ralf Llanasas

Mixed reality apps (MR) are taking the internet by storm.

by Jose Bort

by Jose Bort

Uber. Airbnb. Amazon. Facebook. Netflix.

by Dhaval Sarvaiya

by Dhaval Sarvaiya

Consumer demand is a powerful market driver across all industries.

by Ralf Llanasas

by Ralf Llanasas