When you need an answer and need it fast, wouldn’t it be nice to ask everyone in the world who could help find an answer?

While yes, this would be great, it would also likely take an obscene amount of time and be pretty expensive. Instead, it’s better to gather your data by asking a select number of people with the information you need.

This method is known as data sampling.

For some help with data sampling, use statistical analysis software, which can not only assist in determining a sample size and analyzing the data but also in coming up with various conclusions and hypotheses once sampling is complete.

What is data sampling?

Data sampling is a common statistics technique for analyzing patterns and trends in a subset of data representative of a larger data set being examined. Using representative samples, data scientists and analysts can quickly build models while maintaining accuracy and deciding the amount and frequency of data collection.

Data sampling is a complex form of statistical analysis that can go very wrong if not done correctly. It can also require extensive research before sampling can begin.

Types of sampling

Various sampling methods can be used to extract samples from data, with the most effective approach depending on the dataset and context. These data sampling methods are generally categorized as probability sampling and non-probability sampling.

Probability sampling

In probability sampling, every aspect of the population has an equal chance of being selected to be studied and analyzed. These methods typically provide the best chance of creating a sample that’s as representative as possible.

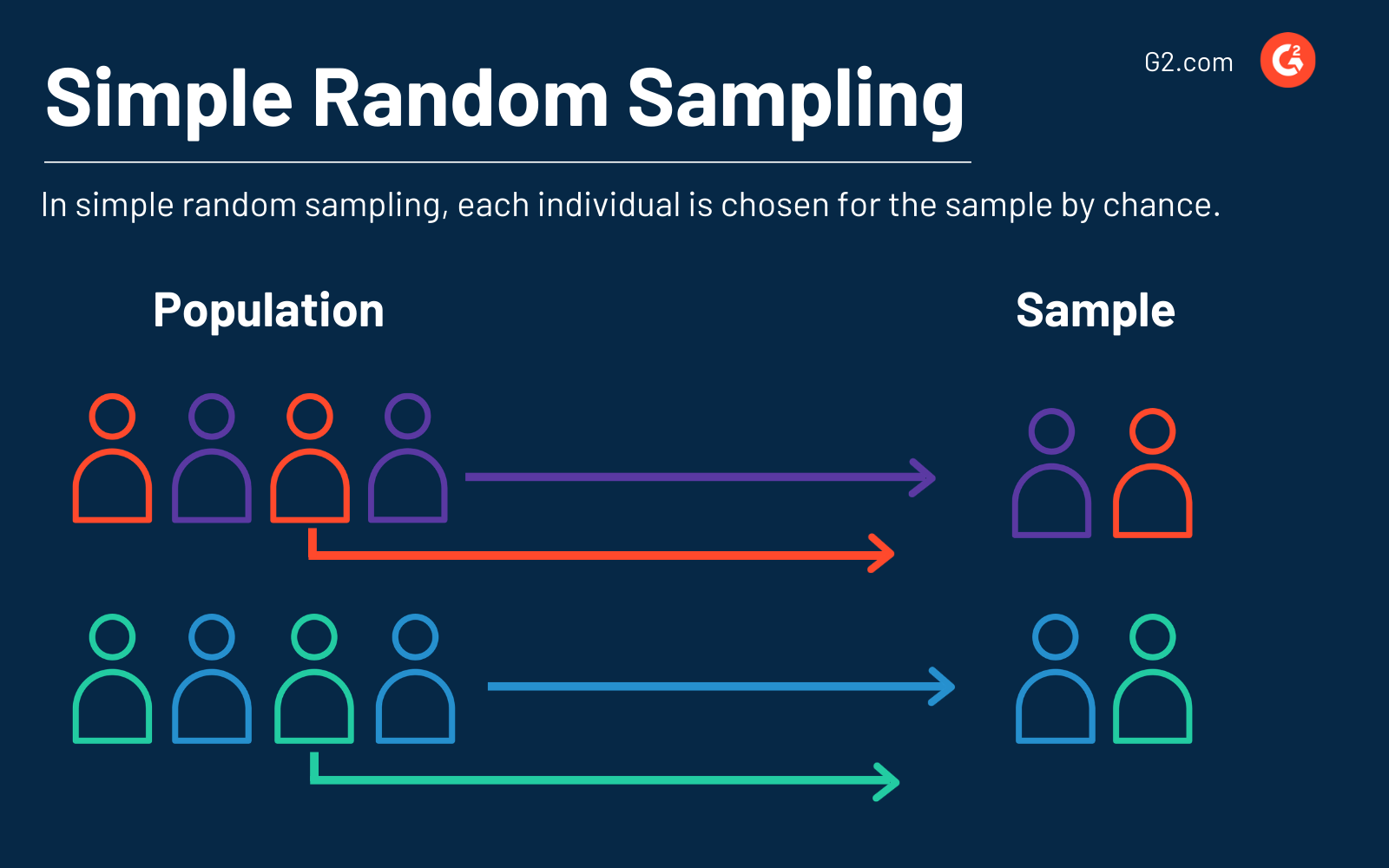

1. Simple random sampling

As the name suggests, The simple method of data sampling is random. Each individual is chosen by chance, and each member of the population or group has an equal chance of being selected.

Those going this route may even use software to choose randomly since it’s used when there is no prior information about the target population.

For example, say your business has a marketing team of 50 people and needs 10 of them on a new project about to launch. Each team member has an equal chance of being selected, with a probability of 5%.

An advantage of using simple random sampling is that it is the most direct way to perform probability sampling. On the other hand, those using simple random sampling may find that those selected don’t have the characteristics they want to study.

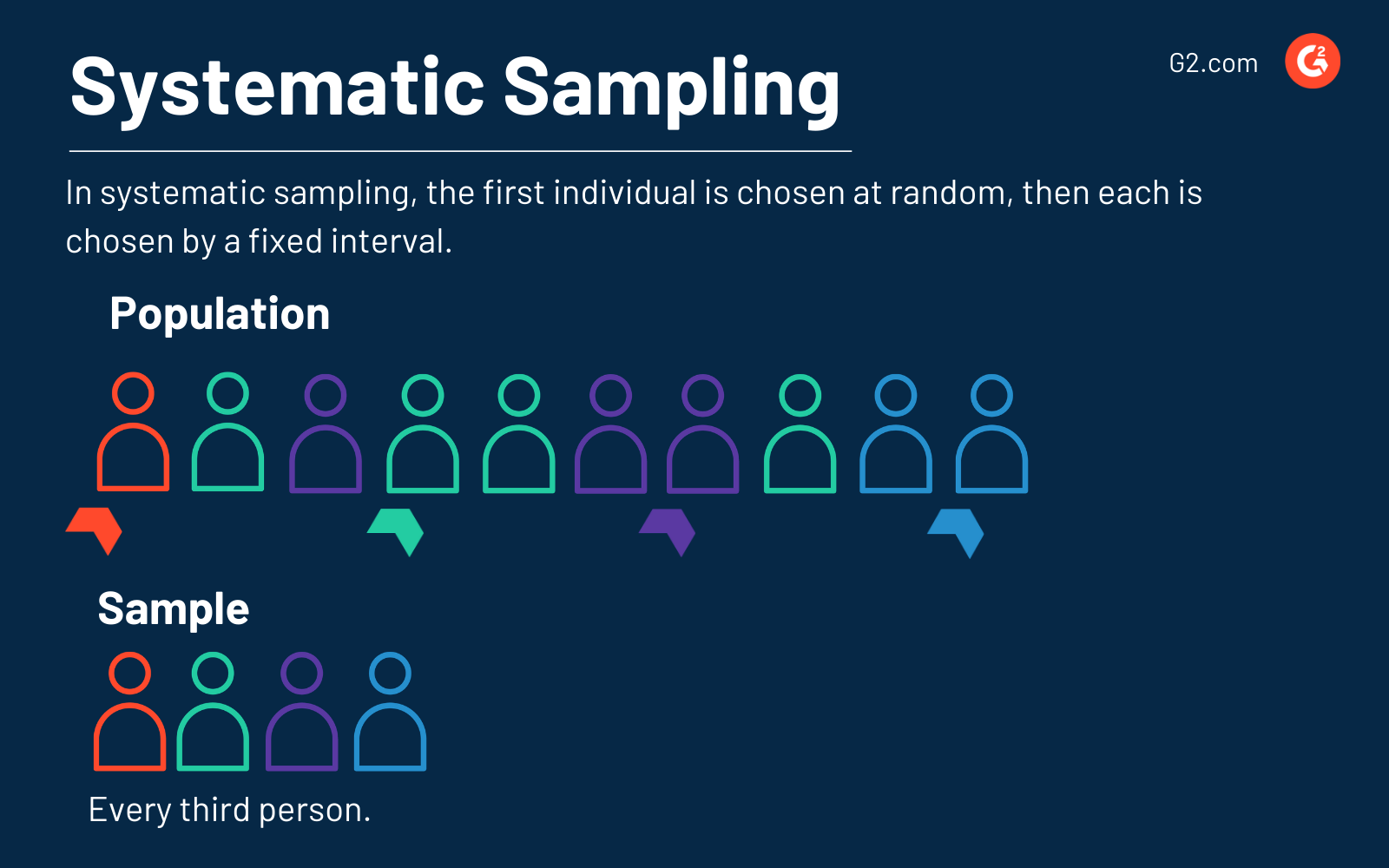

2. Systematic sampling

Systematic sampling is a little more complicated. In this method, the first individual is selected randomly, while others are selected using a “fixed sampling interval”. Therefore, a sample is created by setting an interval that derives data from the larger population.

An example of systematic data sampling would be choosing the first individual randomly and then choosing every third person for the sample.

Some clear advantages to using systematic sampling are that it’s easy to execute and understand, you have full control of the process, and there’s a low risk of data contamination.

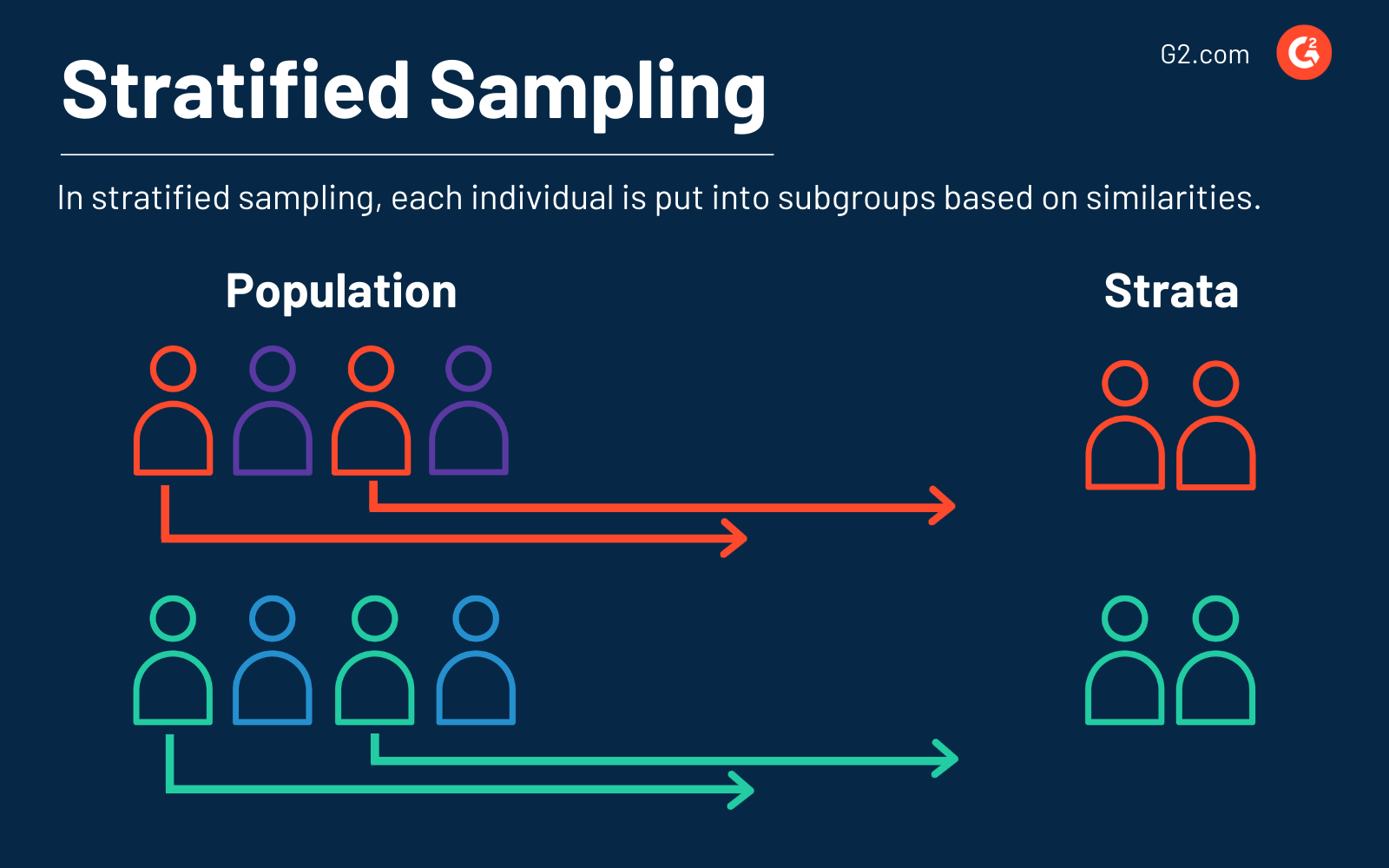

3. Stratified sampling

Stratified sampling is a method in which elements of the population are divided into small subgroups, called strata, based on their similarities or a common factor. Samples are then randomly collected from each subgroup.

This method requires prior information about the population to determine the common factor before creating the strata. These similarities can be anything from hair color to the year they graduated from college, the type of dog they have, and food allergies.

An advantage of stratified sampling is that this method can provide greater precision than other methods. Because of this, you can choose to test a smaller sample.

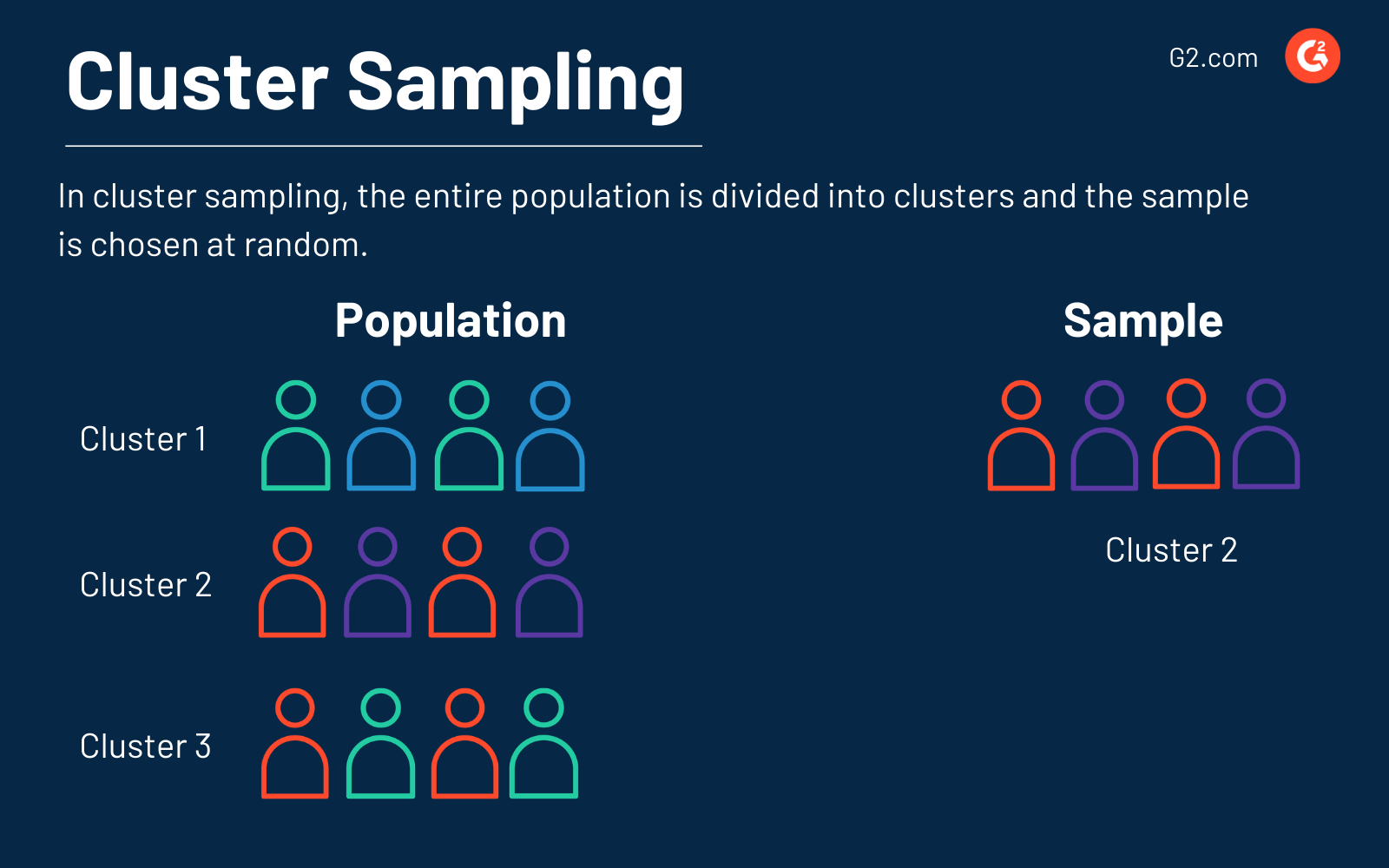

4. Cluster sampling

The clustering method divides the entire population or large data set into clusters or sections based on a defining factor. Then, the clusters are randomly selected to be included in the sample and analyzed.

Let's say each cluster is based on which Chicago neighborhood the individuals live in. These individuals are clustered by Wrigleyville, Lincoln Park, River North, Wicker Park, Lakeview, and Fulton Market. Then, the sample of individuals is randomly chosen to be represented by those living in Wicker Park.

This sampling method is also quick and less expensive and allows for a large sample of data to be studied. Cluster sampling, which is specifically designed for large populations, can also allow for many data points from a complete demographic or community.

5. Multistage sampling

Multistage sampling is a more complicated form of cluster sampling. Essentially, this method divides the larger population into many clusters. The second-stage clusters are then broken down further based on a secondary factor. Then, those clusters are sampled and analyzed.

The “staging” in multistage sampling continues as multiple subsets are identified, clustered, and analyzed.

Non-probability sampling

The data sampling methods in the non-probability category have elements that don’t have an equal chance of being selected to be included in the sample, meaning they don’t rely on randomization. These techniques rely on the ability of the data scientist, data analyst, or whoever is selecting to choose the elements for a sample.

Because of this, these methods risk producing a non-representational sample, which is a group that doesn’t truly represent the sample. This could result in a generalized conclusion.

1. Convenience sampling

In convenience sampling, sometimes called accidental or availability sampling, data is collected from an easily accessible and available group. Individuals are selected based on their availability and willingness to participate in the sample.

This data sampling method is typically used when the availability of a sample is rare and expensive. It’s also prone to bias since the sample may not always represent the specific characteristics needed to be studied.

Let’s go back to the example we used for simple random sampling. You still need 10 marketing team members to assist with a specific project. Instead of randomly selecting team members, you select the 10 who are most willing to help.

This method has the advantage of being easy to carry out at a relatively low cost promptly. It also allows for gathering useful data and information from a less formal list, like the methods used in probability sampling. Convenience sampling is the preferred method for pilot studies and hypothesis generation.

2. Quota sampling

When the quota method is used in data sampling, items are chosen based on predetermined characteristics. The data sampling researcher ensures equal representation within the sample for all subgroups within the data set or population.

Quota sampling depends on the preset standard. For example, the population being analyzed is 75% women and 25% men. Since the sample should reflect the same percentage of women and men, only 25% of the women will be chosen to be in the sample to match the 25% of men.

Quota sampling is ideal for those considering population proportions while remaining cost-effective. Once characters are determined, quota sampling is also easy to administer.

3. Judgment sampling

Judgment sampling, also known as selective sampling, is based on the assessment of experts in the field when choosing who to ask to be included in the sample.

In this case, let’s say you are selecting from a group of women aged 30-35, and the experts decide that only the women with a college degree will be best suited to be included in the sample. This would be judgment sampling.

Judgment sampling takes less time than other methods, and since there’s a smaller data set, researchers should conduct interviews and other hands-on collection techniques to ensure the right type of focus group. Since judgment sampling means researchers can go directly to the target population, there’s an increased relevance of the entirety of the sample.

4. Snowball sampling

The snowball sampling, sometimes called referral sampling or chain referral sampling, is used when the population is rare and unknown.

This is typically done by selecting one or a small group of individuals based on specific criteria. The person(s) selected are then used to find more individuals to be analyzed.

Consider a highly sensitive situation or topic, like contracting a contagious disease. These individuals may not openly discuss their situation or participate in surveys to share information regarding the disease.

Since not all people with this disease will respond to questions asked, the researcher can choose to contact people they know, or those with the disease may contact others they know who also have it to collect the information needed.

This method is called snowballing because, since existing people are asked to nominate people to be in the sample, the same increases in size like a rolling snowball.

Snowball sampling allows a researcher to reach a specific population that would be difficult to sample using other methods while keeping costs down. Due to the smaller sample size, it also requires little planning and a smaller workforce.

Data resampling

Once you have a data sample, this can be used to estimate the population. However, since this only gives you a single estimate, there isn’t any variability or certainty in the estimate. Because of this, some researchers estimate the population multiple times from one data sample, which is called data resampling.

Each new estimate is referred to as a subsample since it’s from the original data sample. Each sample that estimates the population from resampling is its own statistical tool to quantify its accuracy.

Want to learn more about Data Visualization Tools? Explore Data Visualization products.

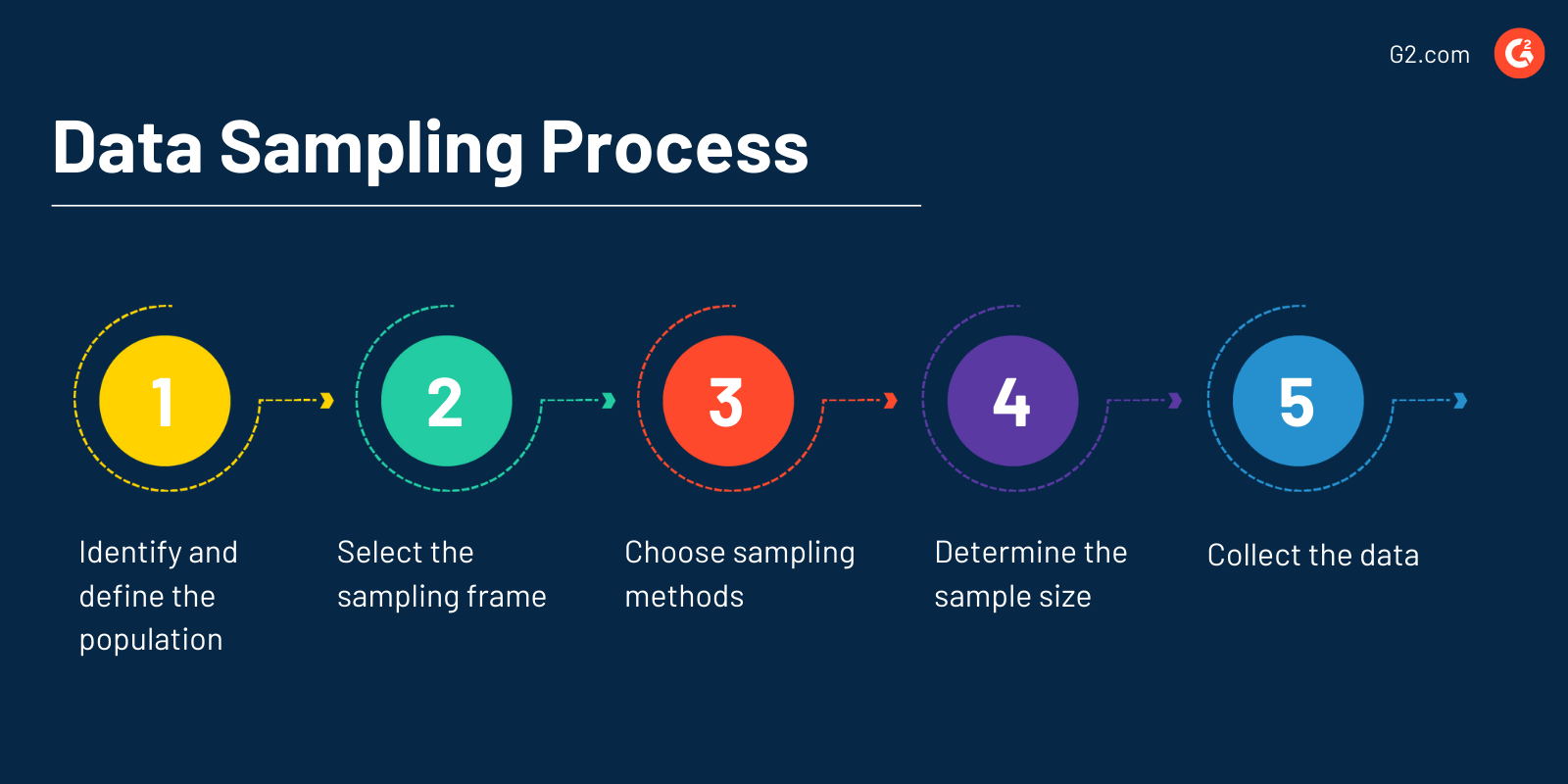

Data sampling process

The overall process of data sampling is a statistical analysis method that helps to draw conclusions about populations from samples.

The first step in data sampling is identifying and defining the population you want to analyze. This can be done by conducting surveys, opinion polls, observations, focus groups, questionnaires, or interviews.

This step can also be referred to as data collection. Parameters need to be set, whether it’s decided only to survey women between the ages of 18 and 35 or men who graduated from college in 2010 in the state of Vermont.

Next, select the sampling frame, which is the list of items or people forming a population in which the sample is taken. For example, a sampling frame could be the names of people who live in a specific town for a survey on family size in that town.

Then, a sampling method will be chosen. Depending on the dataset's characteristics and research goals, you can choose any of the data sampling methods mentioned in the previous section.

The fourth step is to determine the sample size to analyze. In data sampling, the sample size is the exact number of samples that will be measured for an observation to be made.

Let’s say your population will be men who graduated from college in 2010 in the state of Vermont, and that number is 40,000, then the sample size will be 40,000. The larger the sample size, the more accurate the conclusion will be.

Finally, it’s time to collect data from the sample. Based on the data, you’ll either make a decision, conclusion, or actionable plan.

Common data sampling errors

When sampling data, those involved must make statistical conclusions about the population from a series of observations.

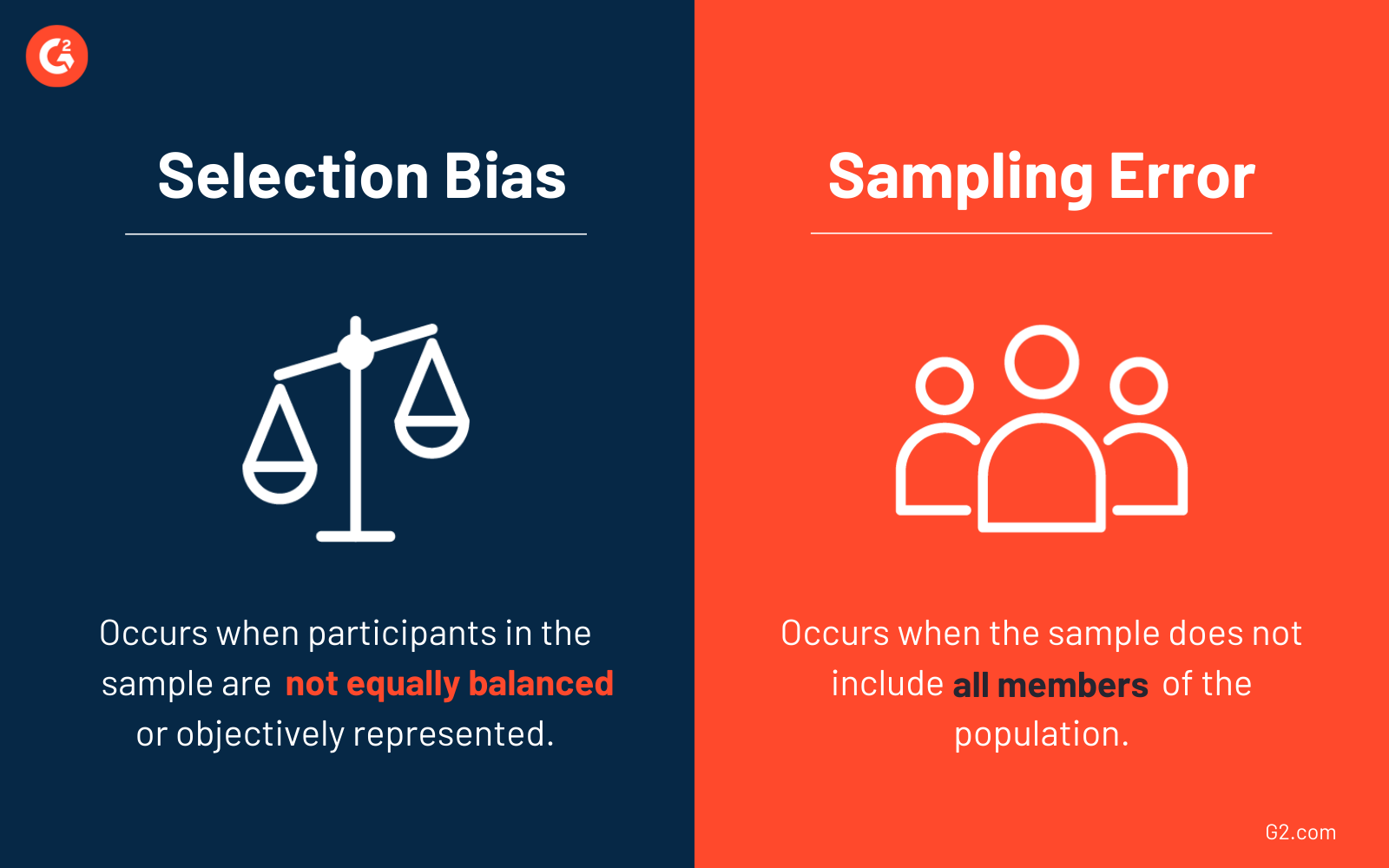

Because these observations often come from estimations or generalizations, errors are bound to occur. The three main types of errors that occur when performing data sampling are:

- Selection bias: The bias that’s introduced by the selection of individuals to be part of the sample that isn’t random. Therefore, the sample cannot represent the population that is looking to be analyzed.

-

Sampling error: The statistical error occurs when the researcher doesn’t select a sample that represents the entire data population. When this happens, the results found in the sample don’t represent the results that would have been obtained from the entire population.

The only way to 100% eliminate the chance of a sampling error is to test 100% of the population. Of course, this is usually impossible. However, the larger the sample size in your data, the less extreme the margin of error will be.

- Non-response error: This error occurs when selected individuals do not participate in a survey or study. It arises from factors like lack of interest, difficulty reaching participants, or survey fatigue and affects the accuracy of the data collected.

Advantages of sampling data

There’s a reason why data sampling is so popular, as there are many advantages.

For starters, it’s useful when the data set that needs to be examined is too large to be analyzed as a whole. An example of this is big data analytics, which examines raw, massive data sets in an attempt to uncover trends.

In these cases, identifying and analyzing a representative sample of data is more efficient and cost-effective than surveying the entire population or data set. In addition to being low-cost, analyzing a sample of data takes less time than analyzing the entire population of data.

It’s also a great option if your business has limited resources. Studying the entire data population would require time, money, and varying equipment. If supplies are limited, data sampling is an appropriate strategy to consider.

Challenges of data sampling

Some challenges or drawbacks of data sampling could arise during the process. An important factor to consider is the size of the required sample and the possibility of experiencing a sampling error, in addition to sample bias.

When delving into data sampling, a small sample could reveal the most important information needed from a data set. However, in other cases, using a large sample can increase the likelihood of accurately representing the dataset as a whole—even if the increased size of the sample may interfere with manipulating and interpreting that data.

Because of this, some may have difficulty selecting a truly representative sample for more reliable and accurate results.

There’s no such thing as a free sample

At least, not when it comes to your data. No matter which method you choose, it will take time and effort.

Narrow down the size of the population you want to analyze, roll up your sleeves, and get started. The solid numbers your business needs to make data-driven decisions are just a sample away!

You have your data, sample, and analysis. Want a clearer view? Explore data visualization tools for better insights.

This article was originally published in 2020. It has been updated with new information.

Mara Calvello

Mara Calvello is a Content and Communications Manager at G2. She received her Bachelor of Arts degree from Elmhurst College (now Elmhurst University). Mara writes customer marketing content, while also focusing on social media and communications for G2. She previously wrote content to support our G2 Tea newsletter, as well as categories on artificial intelligence, natural language understanding (NLU), AI code generation, synthetic data, and more. In her spare time, she's out exploring with her rescue dog Zeke or enjoying a good book.